ByteDance's AI Mathematician Earns Gold Medal-Level Scores

ByteDance's AI Achieves Mathematical Olympiad Success

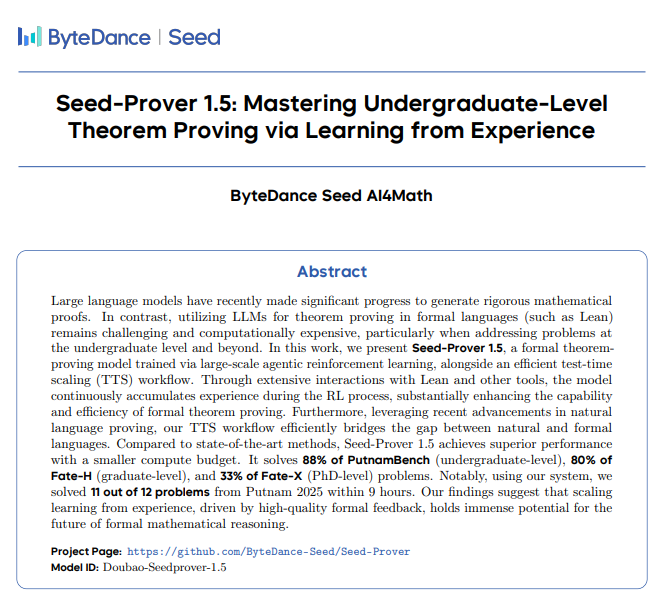

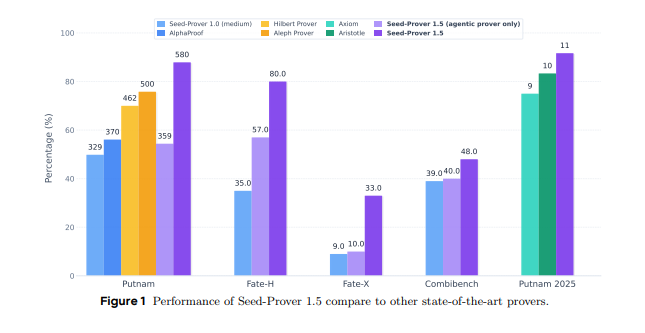

ByteDance's Seed AI team has developed a mathematical reasoning model that's turning heads in academic circles. Their Seed Prover 1.5 recently demonstrated capabilities rivaling top human mathematicians by solving International Mathematical Olympiad (IMO) problems at gold medal level.

Breaking Down the Achievement

The model tackled five of six problems from IMO2025 in just 16.5 hours, scoring an impressive 35 points - enough to qualify for gold medal status among human competitors.  This represents significant progress from ByteDance's previous model, which required three days to solve four problems and only achieved silver medal standing.

This represents significant progress from ByteDance's previous model, which required three days to solve four problems and only achieved silver medal standing.

"What makes this particularly exciting," explains Dr. Li Wei, an AI researcher unaffiliated with the project, "is seeing how quickly these models are advancing in complex reasoning tasks that were previously considered uniquely human domains."

The Technology Behind the Breakthrough

The secret sauce? Large-scale reinforcement learning transformed Seed Prover 1.5 from solving half its practice problems correctly to achieving nearly 90% accuracy. The model didn't stop at IMO - it also set records in the notoriously difficult Putnam competition for North American university students.

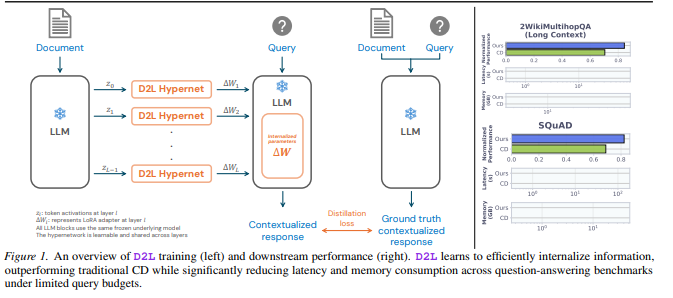

Two key innovations power this mathematical whiz:

- Agentic Prover: Uses formal mathematical languages like Lean to create verifiable proofs - think of it as giving the AI mathematician peer-reviewable work.

- Sketch Model: Mimics human problem-solving by creating informal drafts first, then converting them to formal proofs.

The Sketch Model operates much like a human mathematician working through ideas on scratch paper before writing up the final solution. Through mixed reward signal reinforcement learning, it improves both overall planning and reduces complexity barriers.

Practical Applications Beyond Competitions

While competition performance grabs headlines, the real value lies in potential applications:

- Assisting mathematicians with complex proofs

- Verifying mathematical arguments

- Educational tools that demonstrate problem-solving approaches

The team published their findings in a technical paper available on arXiv (https://arxiv.org/pdf/2512.17260), inviting scrutiny from both AI and mathematics communities.

Key Points:

- Gold Medal Performance: Solved IMO2025 problems at gold medal level (35/42 points)

- Speed Boost: Completed solutions in 16.5 hours vs previous model's three days

- Technical Innovations: Agentic Prover and Sketch Model mimic human reasoning processes

- Broader Implications: Could transform mathematical research and education methodologies