Apple's Xcode Gets Smarter with OpenAI Integration

Apple Supercharges Xcode with OpenAI Partnership

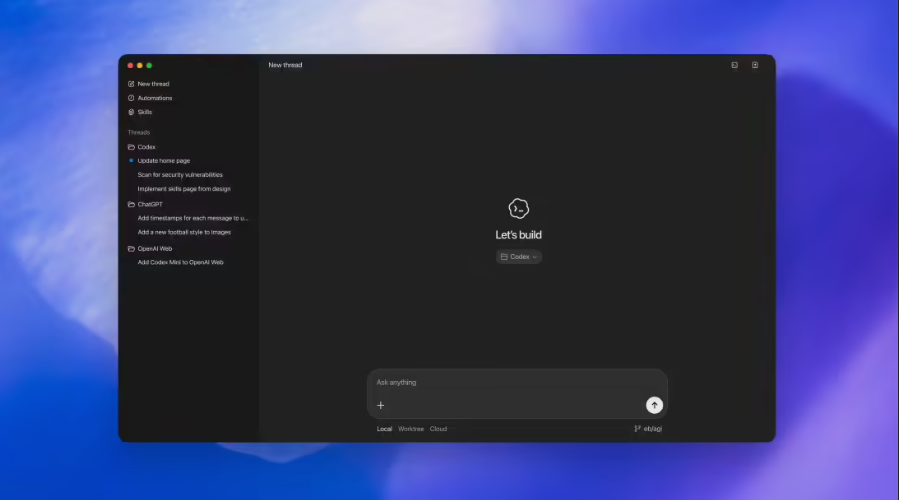

Apple has taken a significant leap forward in developer tools with its latest Xcode update. Version 26.3 introduces native integration with advanced AI models from OpenAI and Anthropic, transforming how developers build apps.

From Helper to Full Partner

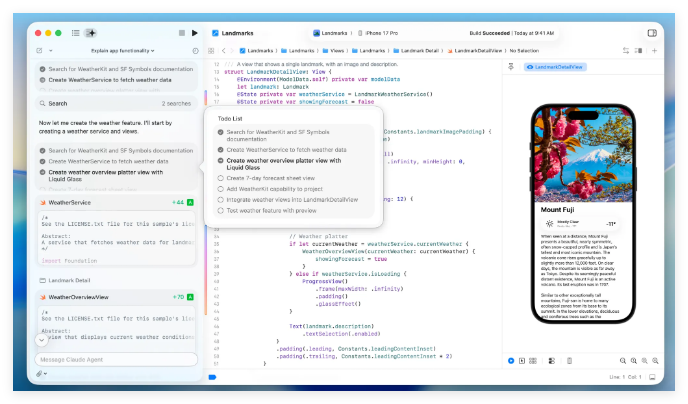

The new "agent-based" approach goes beyond simple code suggestions. Developers can now:

- Understand entire projects - AI agents analyze structure, metadata, and API relationships automatically

- Automate complete workflows - Natural language commands like "add Apple-standard framework feature" trigger end-to-end implementation

- Verify changes visually - The system highlights modifications and maintains clear version history

Optimized for Performance

Apple didn't just slap AI features into Xcode. They worked closely with OpenAI to specially optimize:

- Token efficiency for GPT-5.2-Codex models

- Tool call responsiveness

- Project discovery capabilities through the new Model Context Protocol (MCP)

The result? Smoother operation that feels native rather than bolted-on.

Lowering the Coding Barrier

For newcomers, these changes could be revolutionary. The transparent process acts like:

"Having an expert looking over your shoulder," explains Apple's developer relations lead. "You see every change clearly and can roll back anything instantly."

The company will demonstrate these features in a special "Learn by Doing" workshop this Thursday.

The Xcode26.3 Release Candidate is available now, with full App Store rollout coming soon.

Key Points:

- Deep integration of GPT-5 level models in Xcode 26.3

- Supports natural language commands for complex tasks

- Visual change tracking and easy rollback features

- Special optimizations for developer workflows

- Available now as Release Candidate