Apple's AI Design Breakthrough: Small Model Outshines GPT-5

How Apple Taught AI to Design Better Than Humans

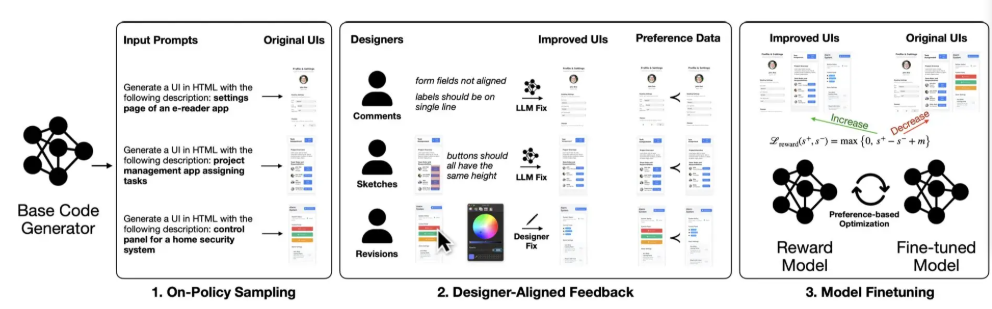

For years, AI-generated interfaces have suffered from what designers call "functional but ugly" syndrome. Apple's latest research reveals why—and more importantly, how they fixed it.

The tech giant discovered traditional AI training methods were missing crucial nuances. "Scoring systems are too blunt," explains Dr. Elena Torres, lead researcher on the project. "They can't capture why one layout feels right while another falls flat."

The Designer Touch

The breakthrough came when Apple brought human experts back into the loop. Their team worked with 21 senior designers who provided:

- 1,460 detailed improvement logs

- Hand-drawn sketches showing ideal layouts

- Direct modification suggestions for AI outputs

"We weren't just saying 'make it better,'" notes designer Marcus Chen. "We showed exactly how—with pencil marks demonstrating spacing relationships, visual hierarchy, and that elusive quality we call 'balance.'"

Surprising Results

The findings challenged conventional wisdom:

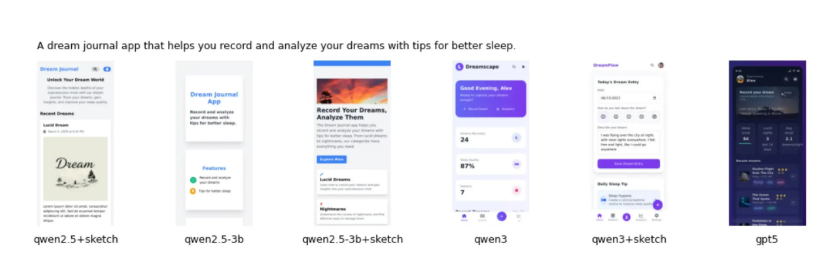

- Small models can outperform giants: The optimized Qwen3-Coder (a relatively compact model) surpassed GPT-5's design capabilities

- Visual feedback trumps text: Evaluation consistency jumped from 49% to 76% when using sketches versus text descriptions

- Efficient learning: Just 181 sketch samples produced significant improvements

The implications extend beyond Apple products. This approach could revolutionize how we train AI for any creative task where subjective judgment matters.

Key Points:

- 🎨 Quality over size: Smaller, well-trained models can beat larger generic ones at specialized tasks

- ✏️ Show don't tell: Visual feedback proves far more effective than text instructions for design training

- ⚡ Rapid improvement: The method achieves dramatic results with surprisingly few samples