Ant Lingbo's New AI Model Brings Virtual Worlds to Life

Ant Lingbo Breaks New Ground With Interactive World Model

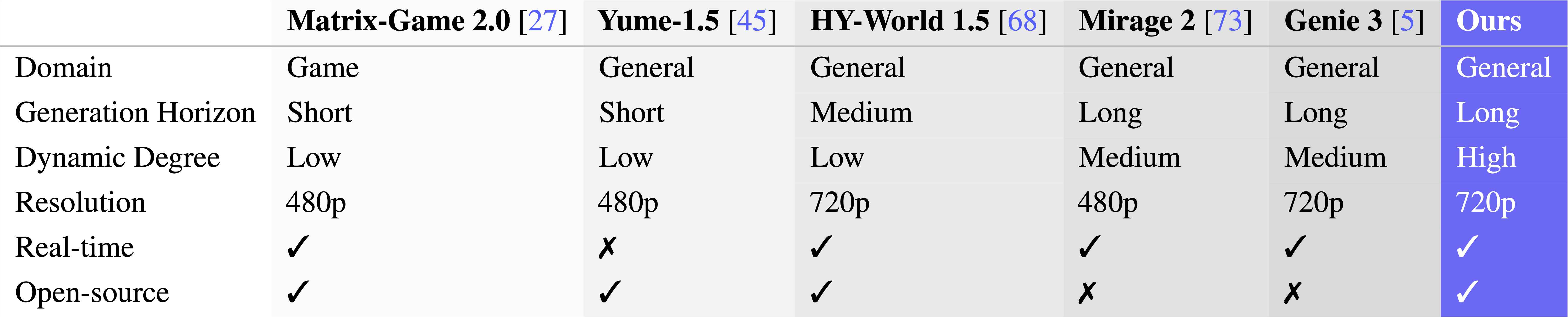

In a move that could reshape how we develop virtual environments, Ant Lingbo Technology has released LingBot-World as open-source software. This cutting-edge AI model creates remarkably lifelike digital spaces where objects maintain their form even during extended interactions - solving one of the biggest headaches in virtual world design.

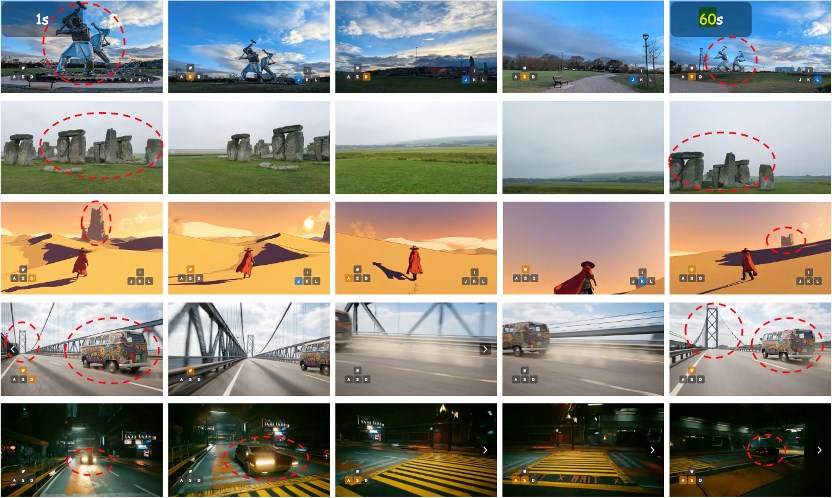

(Caption: LingBot-World sets new standards for scene duration, dynamism and resolution)

(Caption: LingBot-World sets new standards for scene duration, dynamism and resolution)

Solving the Disappearing Act Problem

Ever noticed how objects in some virtual worlds gradually warp or vanish? Developers call this "long-term drift," and LingBot-World tackles it head-on. Through innovative multi-stage training, the model maintains stable environments for up to 10 minutes - a game-changer for complex simulations.

"Imagine training a self-driving car AI where street signs melt away after two minutes," explains Dr. Wei Zhang, lead researcher on the project. "With our model, every detail stays crisp through extended sessions."

(Caption: Even after 60 seconds away, objects retain their structure when the camera returns)

(Caption: Even after 60 seconds away, objects retain their structure when the camera returns)

Instant Response Meets Creative Control

The model responds to commands with impressive speed - generating visuals at about 16 frames per second while keeping total delay under one second. Users can:

- Navigate scenes using keyboard/mouse controls

- Alter weather conditions through simple text prompts

- Trigger specific events while maintaining environmental consistency

(Caption: Vehicles keep their shape perfectly despite camera movements)

(Caption: Vehicles keep their shape perfectly despite camera movements)

Training Tomorrow's AI Today

The technology shines brightest in training scenarios. "Real-world testing for robotics and autonomous systems is expensive and sometimes dangerous," notes Zhang. "Our model creates safe, cost-effective digital proving grounds."

The system learns from two data streams:

- Carefully filtered internet videos covering diverse scenarios

- Game engine recordings that capture pure visual data without interface clutter

This hybrid approach teaches the AI how actions affect environments - crucial knowledge for developing practical machine intelligence.

(Caption: Architectural structures remain intact over time)

(Caption: Architectural structures remain intact over time)

Open Access Accelerates Innovation

By making LingBot-World freely available, Ant Lingbo hopes to spur development across multiple industries. The model weights and inference code are now accessible to all researchers and developers.

The release marks another step in Ant's ambitious AGI roadmap, bridging digital models with physical world applications.