AI's Surprising Struggle: Why Six-Year-Olds Outsmart Top Models

When Kids Outperform AI: The Visual Reasoning Gap

Artificial intelligence may dominate chessboards and math competitions, but there's one area where preschoolers still reign supreme: visual reasoning. A surprising new study from institutions including UniPat AI and Alibaba shows that top-tier AI models barely outperform toddlers in basic visual tasks.

The BabyVision Wake-Up Call

The research team created BabyVision, a visual reasoning test that exposes fundamental limitations in how AI perceives the world. While human children effortlessly spot differences or solve spatial puzzles, even Gemini 3 Pro Preview - currently leading the field - struggles with tasks most six-year-olds find simple.

Lost in Translation

The core issue? Current large models remain fundamentally "language animals." When processing images, they first convert visuals into text descriptions before attempting reasoning. This indirect approach works for broad concepts but fails miserably with subtle visual details like slight curve variations or complex spatial relationships.

Four Ways AI Gets Visuals Wrong

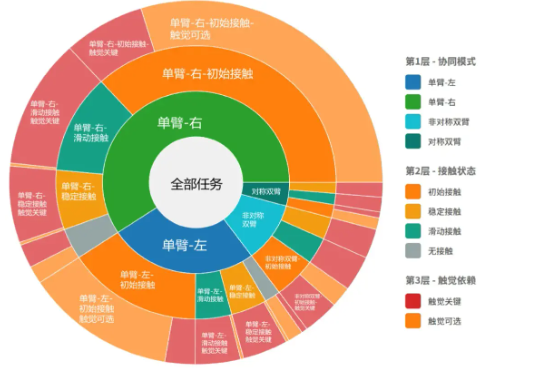

The study categorizes AI's visual shortcomings into four critical areas:

- The Missing Details Dilemma: Pixel-level differences often escape AI notice, leading to wrong answers in shape-matching tasks

- Maze Runners Gone Wrong: Like distracted children, models lose track of paths at intersections during trajectory tracking

- Spatial Imagination Gap: Text descriptions can't accurately represent 3D space, causing frequent projection errors

- Pattern Blindness: Instead of understanding evolving patterns, models rigidly count attributes without grasping deeper logic

Implications for Embodied Intelligence

These findings throw cold water on ambitious plans for embodied AI assistants. If machines can't match a child's understanding of their physical environment, how can we trust them to navigate our world safely?

The research suggests two potential solutions:

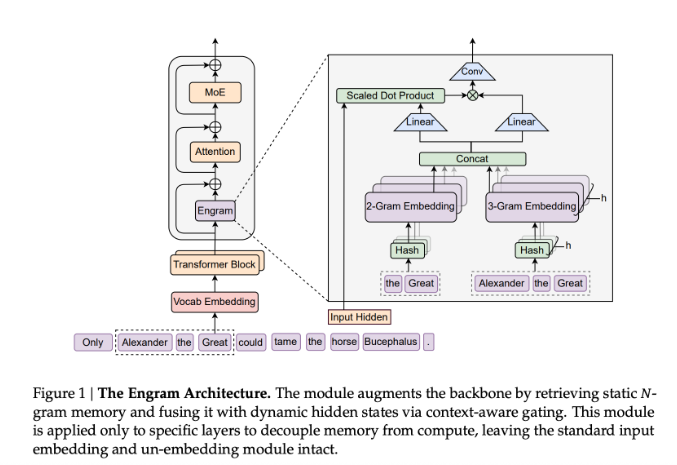

- Reinforcement learning approaches (RLVR) that incorporate explicit intermediate reasoning steps

- True multimodal systems capable of "visual calculation" within pixel space itself - similar to Sora 2's approach - rather than relying on language translations

The study serves as a humbling reminder: the path to artificial general intelligence might not lie in solving harder math problems, but in mastering the simple puzzles children enjoy.