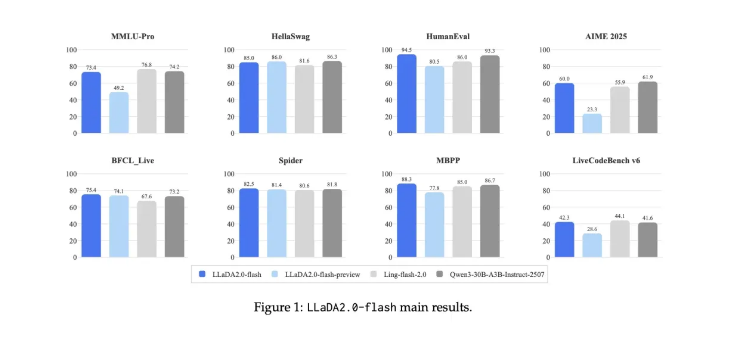

Ant Group's LLaDA2.0: A 100B-Parameter Leap in AI Language Models

Ant Group Breaks New Ground with Open-Source LLaDA2.0

In a move that's shaking up the AI community, Ant Group's Technology Research Institute has released LLaDA2.0 - the industry's first 100-billion-parameter discrete diffusion language model (dLLM). This isn't just another incremental update; it represents a fundamental shift in how we think about scaling diffusion models for language processing.

What Makes LLaDA2.0 Special?

The model comes in two flavors: a compact 16B (mini) version and the heavyweight 100B (flash) variant. The larger model particularly shines when tackling complex challenges like code generation and instruction execution - tasks where most models typically stumble.

"We've cracked the code on scaling diffusion models," explains an Ant Group spokesperson. "Our Warmup-Stable-Decay (WSD) pre-training strategy allows LLaDA2.0 to build on existing autoregressive model knowledge rather than starting from scratch - saving both time and resources."

Speed That Turns Heads

Here's where things get exciting for developers:

- Lightning-fast processing at 535 tokens per second

- 2.1x faster than comparable autoregressive models

- Achieved through innovative KV Cache reuse and block-level parallel decoding

The team didn't stop there. They've further optimized performance using complementary masking and confidence-aware parallel training (CAP) techniques during post-training.

Real-World Performance That Delivers

Early tests show LLaDA2.0 excels where it matters most:

- Code generation with superior structural planning

- Complex agent calls that require nuanced understanding

- Long-text tasks demanding sustained coherence

The model demonstrates remarkable adaptability across diverse applications - from technical programming scenarios to creative writing exercises.

What This Means for AI's Future

This release does more than just introduce another large language model. It fundamentally changes our understanding of what diffusion models can achieve at scale. Ant Group's decision to open-source LLaDA2.0 invites global collaboration, potentially accelerating innovation across the AI landscape.

The company has already hinted at future developments, including plans to:

- Expand parameter scales even further

- Integrate reinforcement learning techniques

- Explore new thinking paradigms for generative AI

The model is now available for exploration at https://huggingface.co/collections/inclusionAI/llada-20.

Key Points:

- Industry first: 100B-parameter discrete diffusion language model

- Speed demon: Processes 535 tokens per second (2.1x faster than competitors)

- Code whisperer: Excels at complex programming tasks

- Open invitation: Available now on Hugging Face for developers worldwide