Alibaba's Z-Image Turbocharges AI Art with Surprising Efficiency

Alibaba's Lean Image Generator Outperforms Bulkier Rivals

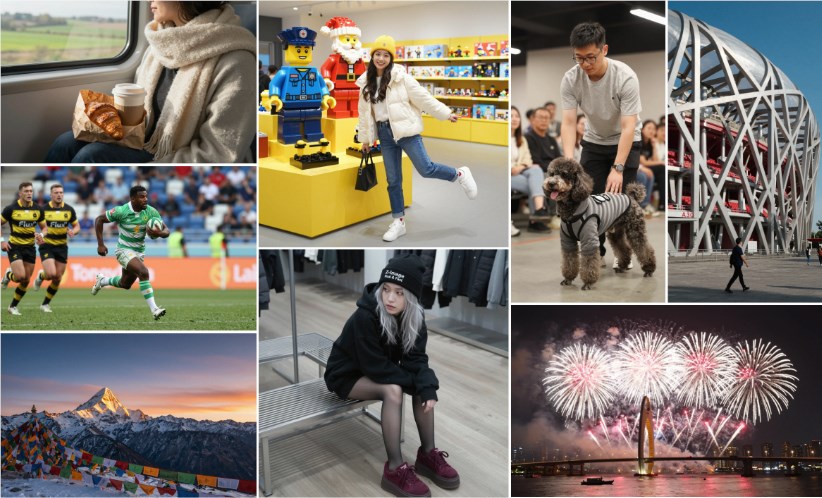

Imagine creating a detailed 1024×1024 pixel neon Hanfu portrait in just 2.3 seconds on your gaming PC. That's the reality Alibaba's Tongyi Lab demonstrated last night with their new Z-Image-Turbo model, which achieved this feat while using only 13GB of VRAM on an RTX 4090.

Small Package, Big Results

What makes Z-Image remarkable isn't just what it can do, but how efficiently it does it:

- Lightweight operation: Runs smoothly on modest hardware like the RTX 3060 with just 6GB VRAM

- Chinese prompt mastery: Understands complex nested descriptions and even corrects logical inconsistencies

- Photorealistic details: Captures subtle elements like skin texture and glass reflections that often stump other models

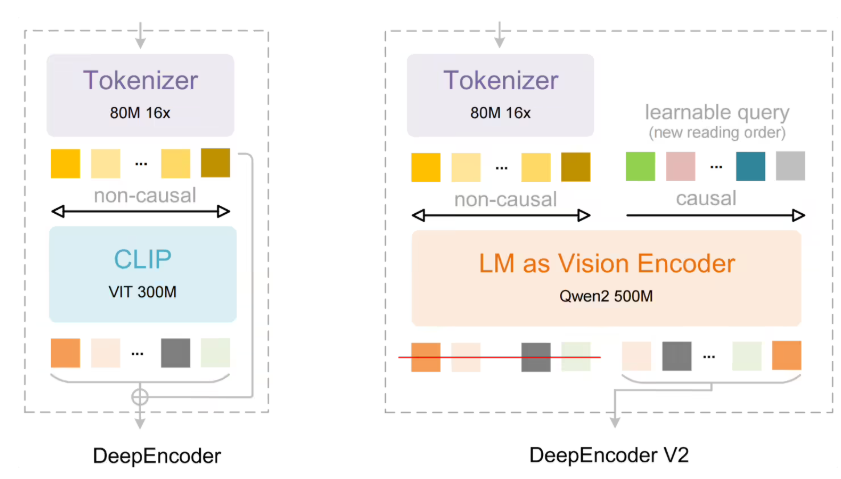

The secret sauce? A novel S3-DiT architecture that processes text, visual semantics and image tokens as a single stream. This streamlined approach uses about one-third the parameters of competing models while delivering comparable - sometimes superior - results.

Democratizing AI Art Creation

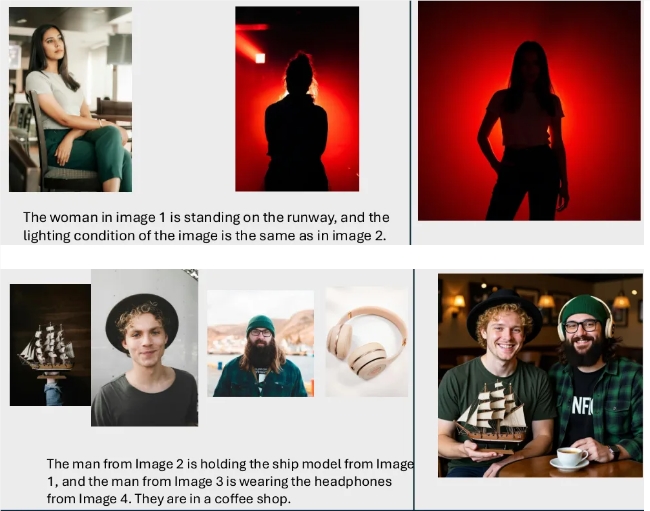

The team didn't stop at generation capabilities. They've also released Z-Image-Edit, allowing natural language-based image modifications that previously required Photoshop skills. Want to swap heads or change backgrounds? Just describe what you want.

While Alibaba hasn't confirmed full open-sourcing plans, the model is already accessible via ModelScope and Hugging Face. With simple pip installation available and enterprise API pricing forthcoming, commercial competitors may need to rethink their strategies.

This development marks a turning point for generative AI art tools. When professional-grade results become achievable on everyday hardware without massive computing resources, creative possibilities expand exponentially.

The question isn't whether you'll try Z-Image - it's what you'll create first.

Project address: https://github.com/Tongyi-MAI/Z-Image

Key Points:

- Efficiency breakthrough: Matches larger models' quality with fraction of parameters

- Hardware accessibility: Runs on consumer GPUs starting from RTX 3060

- Chinese language strength: Excels at understanding and interpreting complex prompts

- Open availability: Currently accessible through major AI platforms