Alibaba's Qwen3-Omni Model Nears Release with Hugging Face Integration

Alibaba's Next-Gen Multimodal AI Nears Open-Source Release

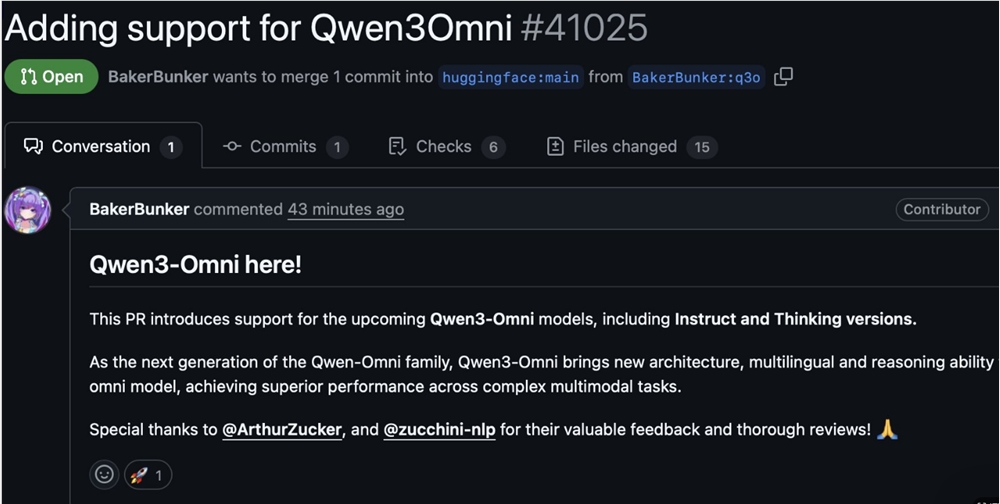

Alibaba Cloud's Qwen team has advanced its cross-modal AI technology with the upcoming release of Qwen3-Omni, now undergoing integration with Hugging Face's Transformers library through a recently submitted pull request (PR). This development marks significant progress in making sophisticated multimodal AI more accessible to developers worldwide.

Technical Advancements in Qwen3-Omni

The third-generation model builds upon its predecessors' success with enhanced end-to-end architecture capable of processing multiple input modalities including:

- Text documents

- Visual content (images/video)

- Audio streams

The system employs a distinctive Thinker-Talker dual-track design:

- Thinker module: Processes and interprets multimodal inputs, generating high-level semantic representations

- Talker module: Converts processed information into natural speech outputs in real-time

This architecture enables efficient streaming processing during both training and inference phases, making it particularly suitable for real-time interactive applications such as virtual assistants or customer service automation.

Deployment Optimization for Edge Devices

A key focus of the Qwen3-Omni development has been improving performance on resource-constrained devices. The team has implemented several optimizations:

- Reduced computational overhead through architectural refinements

- Enhanced memory efficiency for edge deployment scenarios

- Improved streaming capabilities for continuous input processing

The submission to Hugging Face suggests Alibaba Cloud's commitment to open-source collaboration within the AI community. Developers will soon be able to leverage this technology through the popular Transformers library ecosystem.

Key Points:

- Open-source milestone: PR submission indicates imminent public availability via Hugging Face

- Multimodal capabilities: Unified processing of text, visual, and auditory data streams

- Edge optimization: Designed for efficient deployment on resource-limited devices

- Real-time performance: Thinker-Talker architecture enables low-latency interactions

- Generational improvement: Third iteration builds on proven Qwen series foundation