Alibaba's Bai Ling Speech Model Now Speaks Your Language—And Your Emotions

Alibaba's Speech AI Breakthrough: Emotionally Intelligent Multilingual Models

In a move that could redefine voice technology, Alibaba's Tongyi team has supercharged its Bai Ling speech models with human-like multilingual fluency and emotional range. Forget robotic monotones—these systems now understand nuance.

Three Seconds to Your Voice

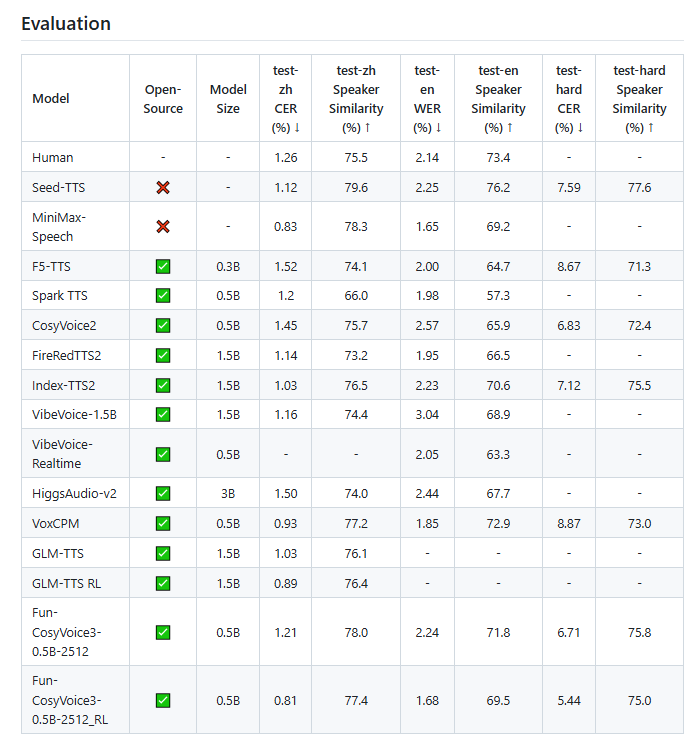

The magic happens startlingly fast.  Provide just three seconds of audio, and the upgraded Fun-CosyVoice3 model clones vocal patterns across nine languages (including Mandarin, English, Japanese) and eighteen regional dialects. Ever wanted your Cantonese grandmother's warmth in a Japanese business meeting? The tech makes it possible.

Provide just three seconds of audio, and the upgraded Fun-CosyVoice3 model clones vocal patterns across nine languages (including Mandarin, English, Japanese) and eighteen regional dialects. Ever wanted your Cantonese grandmother's warmth in a Japanese business meeting? The tech makes it possible.

"We've essentially given AI the equivalent of perfect pitch for human emotion," explains Dr. Li Wen, lead developer at Tongyi. "The system detects subtle vocal cues—a quiver of excitement, clipped syllables of irritation—then reproduces them authentically."

Under the Hood: Faster, Smarter, Sharper

The technical leaps are substantial:

- 50% faster response: First-packet delay slashed in half

- 93% noise-proof accuracy: Fun-ASR model cuts through background chatter

- 160ms streaming latency: Near-instant voice interaction feels natural

Developers will appreciate the expanded toolkit supporting local deployment and customization. Open-sourced on GitHub (FunAudioLLM/CosyVoice), these models empower everything from real-time translation earbuds to emotionally responsive audiobook narration.

Real-World Impact Beyond Tech Demos

The implications stretch far beyond engineering labs:

- Accessibility: Non-verbal users gain expressive synthetic voices

- Entertainment: Streamers dub live broadcasts instantly in multiple languages

- Business: Customer service bots convey appropriate empathy

As voice becomes our primary interface with technology, Alibaba's upgrade reminds us: The future speaks many tongues—and does so with feeling.

Key Points:

- 🌍 Polyglot prowess: Instant switching between nine languages/dialects

- 🎭 Emotional intelligence: Captures happiness, anger through vocal patterns

- ⚡ Performance boost: Halved delays, 93% noisy-environment accuracy

- 🔧 Developer-friendly: Open-source with local deployment options