AI Companion Apps Expose Millions of Private Messages

AI Companion Apps Expose Millions of Private Messages

Massive Data Breach Revealed

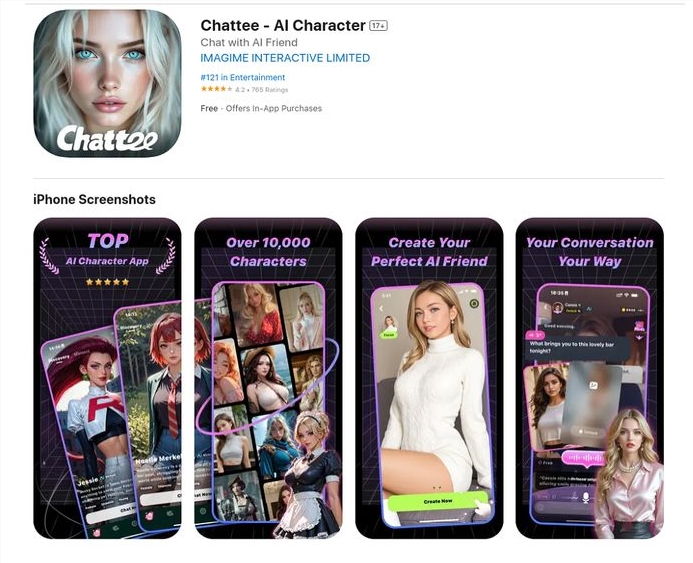

Cybersecurity investigators have uncovered a severe data leak affecting two prominent AI companion applications: Chattee Chat - AI Companion and GiMe Chat - AI Companion. The breach exposed 43 million private messages, 600,000+ media files, and sensitive user data from over 400,000 accounts.

The vulnerability stemmed from an unprotected Kafka Broker instance used for message storage. Cybernews researchers discovered this critical infrastructure had no authentication protocols, allowing unrestricted access to all stored user communications.

How the Breach Occurred

The exposed data included:

- User IP addresses

- Device identifiers

- Authentication tokens

- Full message histories with AI companions

While no direct personal identification (like names or addresses) was leaked, cybersecurity experts warn that malicious actors could cross-reference exposed IPs and device IDs with other datasets to identify individuals.

"This represents a perfect storm for potential harassment campaigns," noted Cybernews lead investigator Mark Johnson. "Attackers could use intimate conversation details for blackmail or social engineering attacks."

Financial Risks Emerge

The affected apps boast significant user engagement:

- Ranked #121 in Apple's Entertainment category

- Over 300,000 downloads combined

- Average user sent 107 messages to their AI companion

The investigation revealed some users spent up to $18,000 on in-app purchases, with total revenue potentially exceeding $1 million. Most alarmingly, leaked authentication tokens could allow hackers to:

- Hijack user accounts

- Drain virtual currency balances

- Make unauthorized purchases

Industry Response and Warnings

Following Cybernews' disclosure:

- Developers immediately shut down the vulnerable Kafka instance

- No confirmation if bad actors accessed data beforehand

- Security experts call for stricter regulation of emotional AI apps

The incident highlights growing concerns about data protection in intimate digital spaces. Dr. Emily Chen of Stanford's Digital Ethics Lab warns: "Users share deeply personal thoughts with these companions. A breach isn't just about data - it's about violating psychological safety."

The apps' developers have not yet commented on whether they will notify affected users or provide compensation.

Key Points:

- 🔓 Unprotected server exposed 43M messages & media files

- 💰 High-spending users at risk of financial theft

- 🆔 Device identifiers could enable user identification

- 🤖 Growing calls for emotional AI app regulation