Zhipu's GLM-4.7-Flash Hits 1 Million Downloads in Just Two Weeks

Zhipu's Lightweight AI Model Gains Rapid Traction

In a remarkable show of developer enthusiasm, Zhipu AI's latest open-source model GLM-4.7-Flash has crossed the 1 million download mark on Hugging Face just two weeks after its release. This explosive growth highlights the AI community's appetite for efficient yet powerful models that don't sacrifice performance for size.

Benchmark-Busting Performance

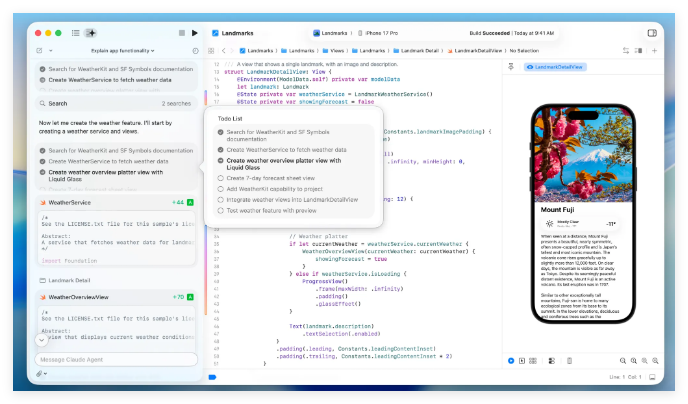

The 30B-A3B hybrid thinking model isn't just popular - it delivers where it counts. Recent tests show GLM-4.7-Flash outpacing competitors like gpt-oss-20b and Qwen3-30B-A3B-Thinking-2507 in key evaluations including SWE-bench Verified and τ²-Bench. For models of similar size, it currently holds the top spot in open-source performance metrics.

"We designed GLM-4.7-Flash to give developers the best balance between speed, accuracy and cost," a Zhipu spokesperson told us. "Seeing this level of adoption so quickly confirms we're meeting a real need in the community."

Why Developers Are Flocking to GLM-4.7-Flash

Three factors appear to be driving the model's rapid adoption:

- Lightweight efficiency that doesn't compromise on capability

- Proven performance across multiple benchmark tests

- Cost-effectiveness that makes advanced AI more accessible

The model's success also reflects growing sophistication among open-source users who increasingly demand professional-grade tools without enterprise price tags.

What This Means for AI Development

GLM-4.7-Flash's milestone suggests we may be entering a new phase of AI democratization, where high-performance models become available to individual developers and smaller teams. As open-source options continue closing the gap with proprietary systems, we could see accelerated innovation across the AI ecosystem.

The model is currently available for download on Hugging Face, with documentation and support resources growing alongside its user base.

Key Points:

- Record adoption: 1M+ downloads in first 14 days

- Proven performance: Outperforms similar-sized models in benchmarks

- Developer-focused: Balances power with practical deployment needs

- Open-source advantage: Makes advanced AI more accessible to all developers