OpenAI Pulls Back the Curtain on GPT-5's Hidden Thought Process

OpenAI Confirms Leaked Details About GPT-5's Reasoning Engine

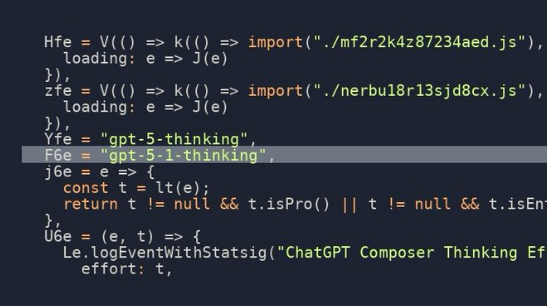

In a move that's shaking up the AI community, OpenAI has officially acknowledged that recently circulated documents about GPT-5's inner workings are genuine. These files reveal how the advanced model handles complex reasoning tasks using what developers call a "hidden chain-of-thought" mechanism.

The AI's Secret Reasoning Language

The leaked materials show GPT-5Thinking—a specialized version of the model—uses an abstract internal language when solving problems. Take Sudoku puzzles as an example. Instead of working through each step in plain English, the model follows condensed instructions like:

- Evaluate grid constraints

- Simulate filling paths

- Verify conflict thresholds

"This isn't a vulnerability—it's by design," an OpenAI spokesperson explained. "The hidden CoT (Chain of Thought) allows more efficient processing without overwhelming users with intermediate steps."

Why This Matters for AI Development

The disclosure offers unprecedented insight into how cutting-edge language models handle complex tasks:

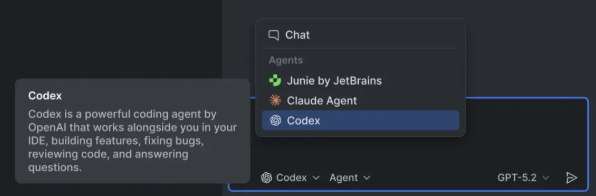

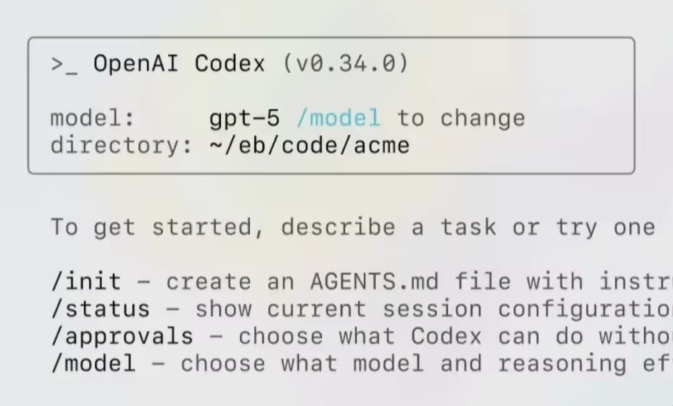

- Enterprise Applications: Early tests show the model can tackle sophisticated programming challenges with minimal prompting

- Competitive Edge: Benchmarks suggest advantages over rival systems like Llama4 and Cohere v2 in reasoning tasks

- Transparency Balance: OpenAI faces ongoing challenges in revealing enough about AI operations without compromising proprietary technology

"We're walking a tightrope," admitted the spokesperson. "Users deserve understanding, but full transparency could enable misuse or stifle innovation."

Key Points:

- GPT-5Thinking uses hidden reasoning steps not shown to users

- The approach improves efficiency in coding and logic puzzles

- Leaked documents came from legitimate internal testing materials

- No security vulnerabilities were exposed by this design feature