New AI Framework Boosts Model Efficiency by 21% with "Slow-Fast" Thinking

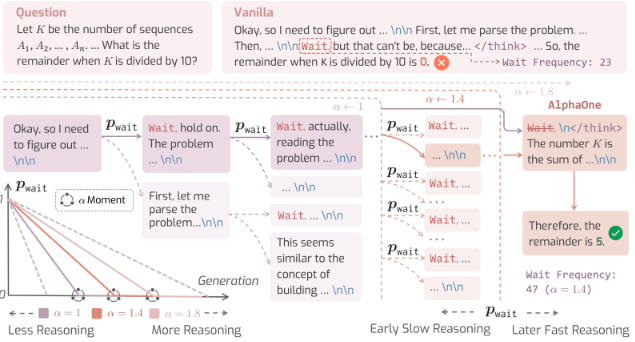

A breakthrough artificial intelligence framework called AlphaOne (α1) is transforming how large language models process information. Developed jointly by researchers from the University of Illinois Urbana-Champaign and the University of California, Berkeley, this system gives developers unprecedented control over a model's reasoning process.

Addressing Critical AI Limitations

Current large-scale inference models often struggle with inefficient thinking patterns. While they incorporate slow "System 2" reasoning mechanisms, they frequently waste resources on simple questions while underperforming on complex ones. Traditional solutions either use computationally expensive parallel methods or rigid sequential techniques, both with limited success.

How AlphaOne Works Differently

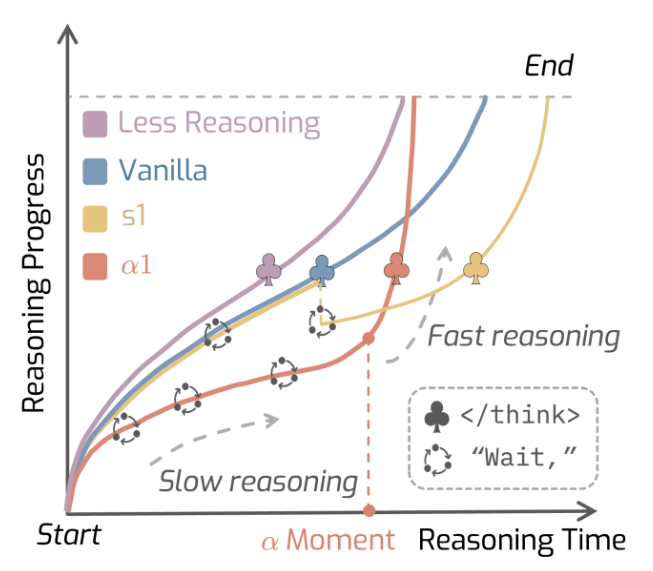

The framework introduces an innovative α parameter that acts like a precision dial for controlling thinking phases. Before reaching a critical "α moment," the system strategically inserts pauses to encourage deliberate reasoning. After this threshold, it forces a switch to faster processing mode to generate final answers.

Unlike conventional approaches, AlphaOne offers flexible configuration options for either dense or sparse intervention. This gives developers fine-grained control previously unavailable in AI systems.

Impressive Performance Gains

Testing across models ranging from 1.5 billion to 32 billion parameters showed remarkable results:

- 6.15% average accuracy improvement over baseline methods

- Exceptional performance on PhD-level complex problems

- 21% reduction in token usage, significantly lowering computational costs

The research revealed a counterintuitive finding: AI models perform better with a "slow-then-fast" reasoning sequence rather than mimicking human "fast-then-slow" cognition.

Practical Applications and Availability

The framework shows particular promise for enterprise applications like complex query answering and code generation. Companies can expect both improved output quality and reduced operational costs when implementing AlphaOne.

The research team plans to release the code soon, noting that integration requires minimal configuration changes for most open-source or custom models.

Key Points

- AlphaOne improves AI efficiency by 21% through controlled reasoning phases

- The framework outperforms existing methods on complex problems while using fewer resources

- Developers gain precise control over model thinking processes via the α parameter

- Enterprise applications stand to benefit from both quality improvements and cost savings

- The system will soon be available for implementation with minimal configuration