NeurIPS Conference Rocked by Fake Citation Scandal

NeurIPS Conference Faces Credibility Crisis Over Fake Citations

In a scandal shaking the artificial intelligence research community, the prestigious NeurIPS conference has been caught up in a widespread case of citation fraud. AI detection firm GPTZero uncovered that 51 accepted papers contained at least 100 fabricated references between them - complete with fictional authors and bogus publication details.

The 'Vibe Citing' Phenomenon

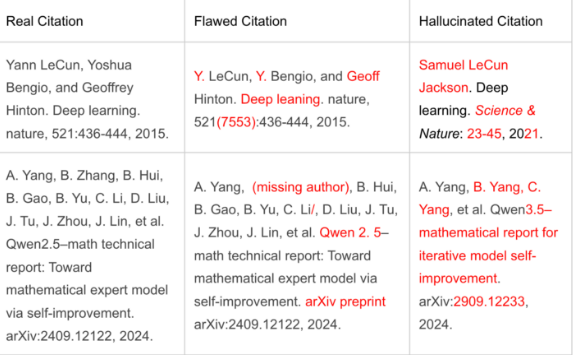

Researchers have dubbed this troubling trend "vibe citing" - where authors include references that look legitimate but are completely fabricated. Some papers listed non-existent authors like "John Doe," while others cited papers with clearly fake arXiv identifiers (such as arXiv:2305.XXXX). These phantom citations slipped past peer review despite their obvious flaws.

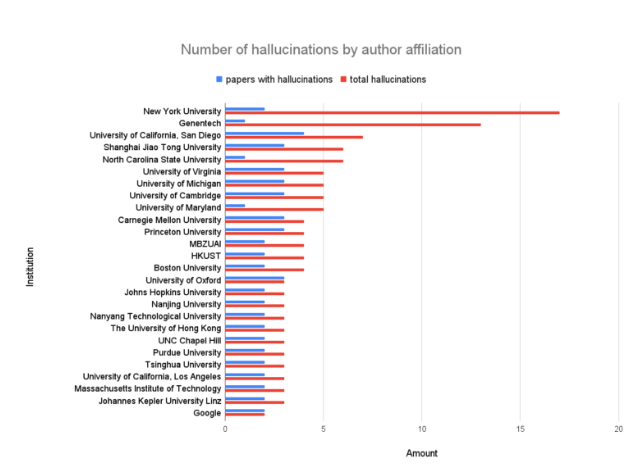

The problem appears concentrated among submissions from top-tier institutions, including New York University and tech giants like Google. "It's particularly concerning because these are papers we'd expect to meet the highest standards," said a source familiar with the investigation.

A System Under Pressure

The scandal reveals deeper cracks in academic publishing's foundation. NeurIPS submissions have skyrocketed from 9,467 in 2020 to 21,575 this year - a staggering 220% increase. This deluge forced organizers to recruit many inexperienced reviewers just to handle the volume.

Some reviewers reportedly cut corners by using AI tools instead of carefully reading submissions. "When you're expected to review dozens of complex papers in weeks, the temptation to take shortcuts becomes overwhelming," explained one anonymous reviewer.

Consequences and Reforms

NeurIPS has responded by declaring fabricated citations grounds for paper rejection or withdrawal. But the damage to trust may be harder to repair in a field where citations serve as academic currency.

The incident raises tough questions about how to maintain quality control as AI research expands exponentially. With preprint servers and conferences flooded with submissions, traditional peer review systems appear increasingly strained.

Key Points:

- 51 papers at NeurIPS contained 100+ fake citations

- Fabrications included fake authors and invalid publication IDs

- Submissions more than doubled since 2020, overwhelming reviewers

- Conference organizers now treating fake citations as grounds for rejection

- Scandal highlights growing pains in AI research publishing