Mistral AI Launches Open-Source Model Magistral Small 1.2

Mistral AI Unveils Open-Source Magistral Small 1.2 Model

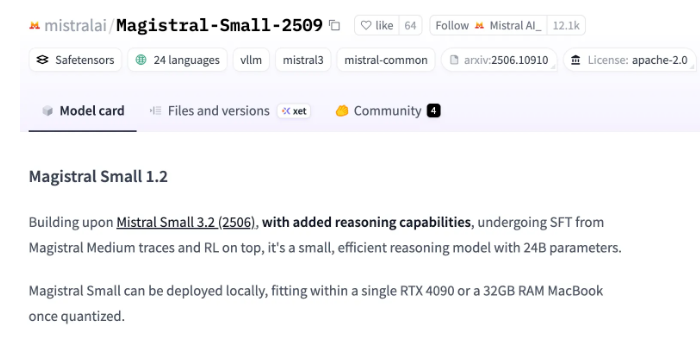

Paris-based Mistral AI has launched its newest open-source inference model, Magistral Small 1.2, representing a major step forward in accessible artificial intelligence technology. Released under the Apache 2.0 license, this 24-billion-parameter model demonstrates Mistral's growing influence in the competitive AI sector.

Enhanced Capabilities

The updated version introduces several groundbreaking features:

- 128k context processing for handling longer sequences

- Multilingual support and visual input processing

- Innovative [THINK] special token that wraps reasoning processes to improve expressiveness

- New visual encoder for improved image-text integration (

)

)

Developer-Friendly Features

Magistral Small 1.2 ships with multiple pre-configured reasoning templates and supports popular frameworks including:

- vLLM

- Transformers

- llama.cpp

The model also provides GGUF quantization versions and Unsloth fine-tuning examples, significantly reducing setup time for development teams.

Enterprise Solutions Launching Simultaneously

The company has concurrently upgraded its enterprise offering, Magistral Medium 1.2, which continues to power conversational services through the Le Chat platform. Mistral has also made the API available on La Plateforme, expanding potential commercial applications.

Key Points:

- Open-source model with Apache 2.0 license ensures broad accessibility

- [THINK] token represents novel approach to model reasoning processes

- Visual encoder addition creates new multimodal capabilities

- Full compatibility with major AI frameworks lowers adoption barrier

- Enterprise version upgrades demonstrate commitment to commercial applications