Meta Enhances Parental Controls for AI Chatbots

Meta Rolls Out Enhanced Parental Controls for AI Chatbots

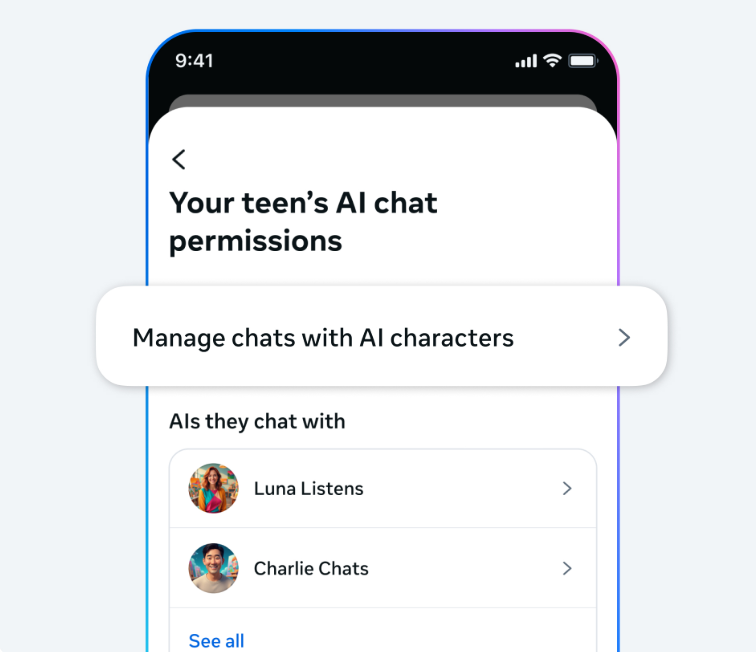

Meta has unveiled significant upgrades to its parental control measures for AI chatbots, targeting improved safety for underage users. The new "Master Switch" tool will enable parents to restrict their children's access to specific AI chatbot characters on Instagram and Facebook. This initiative addresses growing societal concerns about AI safety and aims to create a more secure digital environment for minors.

New Features for Parental Oversight

In addition to the "Master Switch," Meta is introducing an "Insights" feature. This tool allows parents to monitor the topics discussed between their children and AI chatbots, fostering greater awareness of online interactions. The company has also committed to prohibiting AI chatbots from engaging in conversations about sensitive subjects such as self-harm, eating disorders, relationships, and sexual topics with teenagers. Instead, chatbots will focus on age-appropriate themes like academics and sports.

Global Rollout and Background

The parental control tools are slated for release in early 2026, initially launching in the United States, UK, Canada, and Australia. Meta's decision follows several incidents this year involving inappropriate interactions between AI chatbots and minors. For example, reports surfaced that fictional characters like "John Cena" had made unsuitable remarks, prompting public outcry.

To further enhance transparency and safety, Meta has updated its content review mechanisms and introduced a PG-13 rating guidance system. These measures are part of broader efforts to ensure AI interactions remain appropriate for young users.

Commitment to Ongoing Improvements

Meta has emphasized its dedication to refining AI interaction supervision. The company aims to empower parents with better tools to manage their children's digital experiences. By implementing these safeguards, Meta seeks to foster a safer online space for minors.

Key Points:

- 📅 Master Switch Tool: Parents can block underage access to specific AI chatbot characters.

- 👁️ Insights Feature: Enables parents to monitor conversation topics between children and AI.

- 🔒 Content Restrictions: Prohibits discussions on harmful topics like self-harm and eating disorders.