Meituan's New AI Model Packs a Punch with Smart Parameter Trick

Rethinking How AI Models Grow

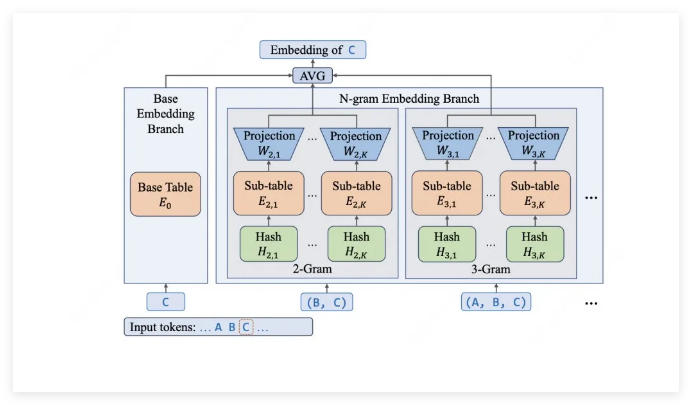

Most AI models try to get smarter by adding more "experts" - specialized sub-models that handle different tasks. But Meituan's LongCat team noticed this approach hits diminishing returns fast. Their solution? A clever workaround they call "Embedding Expansion" that makes every parameter work harder.

The Numbers Behind the Magic

At first glance, LongCat-Flash-Lite seems massive - 68.5 billion parameters total. But here's where it gets interesting: during actual use, only 2.9 to 4.5 billion parameters activate at once. That's like having a sports car that only uses the horsepower it needs for each stretch of road.

The secret sauce? Over 30 billion parameters dedicated to an N-gram embedding layer that's exceptionally good at picking up context clues. Need to understand programming commands or technical jargon? This model nails it with surgical precision.

Engineering for Real-World Speed

All this clever architecture wouldn't mean much if the model crawled along. Meituan's engineers made sure that didn't happen with three key optimizations:

- Smart Parameter Management: Nearly half the model's brainpower sits in its embedding layer, which works more like a quick dictionary lookup than heavy computation.

- Custom Hardware Tricks: They built specialized caching (think of it as short-term memory) and fused operations together to cut down on processing delays.

- Predictive Processing: The model guesses what might come next to work more efficiently, like a chess player thinking several moves ahead.

The payoff? Blazing speeds of 500-700 tokens per second (that's several paragraphs generated in the blink of an eye) and the ability to handle documents up to 256,000 words long - perfect for analyzing lengthy reports or codebases.

Benchmark Buster

When put through its paces, LongCat-Flash-Lite surprised even its creators:

- Specialized Tasks: Outperformed rivals in telecom, retail, and aviation scenarios on industry-standard tests.

- Coding Prowess: Solved over half the problems in SWE-Bench (a tough coding challenge) and crushed terminal command tests with a score nearly double some competitors'.

- General Smarts: Held its own against Google's Gemini2.5Flash-Lite on broad knowledge tests and tackled advanced math problems with ease.

The best part? Meituan has open-sourced everything - the model itself, detailed technical papers, even their custom inference engine. Developers can try it out today with a generous daily free allowance of 50 million tokens.

Key Points:

- Meituan challenges conventional AI scaling with innovative "Embedding Expansion"

- Model activates only 4.5B of its 68.5B parameters per task for efficiency

- Excels at technical domains like programming while maintaining broad competence

- Open-source release includes weights, research papers, and optimized inference tools