Kunlun Wanwei's Open-Source Video AI Takes Creativity to New Heights

Kunlun Wanwei Breaks New Ground With SkyReels-V3

Chinese artificial intelligence company Kunlun Wanwei made waves this week with the release of SkyReels-V3, its latest open-source video generation model. What sets this technology apart? It packs three powerful creative tools into a single framework.

Where Images Come to Life

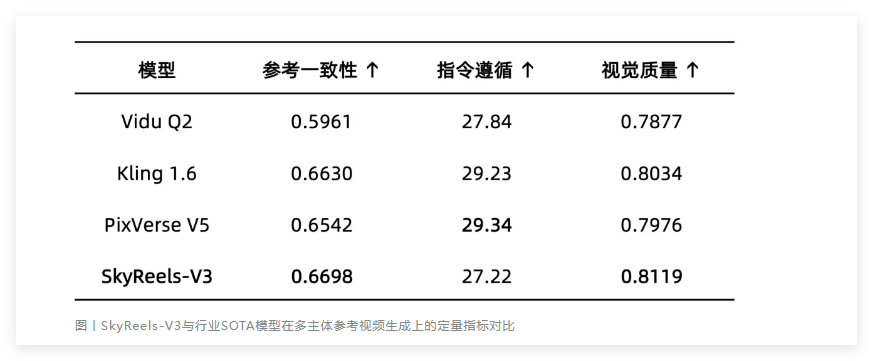

The image-to-video (I2V) function isn't just about making pictures move—it's about preserving their essence. Feed SkyReels-V3 up to four reference images, and it maintains remarkable fidelity to the original subjects' features and compositions. Independent evaluations suggest it outperforms popular commercial alternatives like Vidu Q2 and Kling1.6 in both consistency and visual quality.

From Seconds to Stories

Ever watched an AI-generated video that felt... incomplete? Kunlun Wanwei's engineers tackled this head-on with their video extension feature. Rather than simply stretching clips longer, the system introduces professional film techniques—cut-ins, perspective shifts, even narrative transitions—transforming basic animations into coherent visual stories.

"We're moving beyond technical demonstrations," explains Dr. Liang Wei, Skywork AI's lead researcher. "Now creators can focus on storytelling while our model handles the cinematography."

Virtual Avatars That Actually Listen

The audio-driven avatar module addresses one of digital humans' most uncanny shortcomings: mismatched lip movements. Through precise audio-visual synchronization, SkyReels-V3 generates remarkably natural speech animations capable of sustaining minute-long dialogues—a boon for educators and content creators alike.

Open Access Philosophy

In a surprising move for such advanced technology, Kunlun Wanwei has made SkyReels-V3 freely available on GitHub alongside temporary API access. "Democratizing creative tools aligns with our AGI vision," commented CEO Fang Han during the launch event.

The tech community has responded enthusiastically since Tuesday's release, with developers already experimenting with novel applications from educational content to indie filmmaking.

Key Points:

- Three-in-one architecture combining image animation, video extension and virtual avatars

- Outperforms commercial rivals in reference consistency testing

- Professional-grade transitions elevate basic animations into narratives

- Currently free via GitHub repository and limited-time API access

- Part of Kunlun Wanwei's broader "All in AGI" strategic push