Google Unveils AI-Powered Search with Deep Learning Features

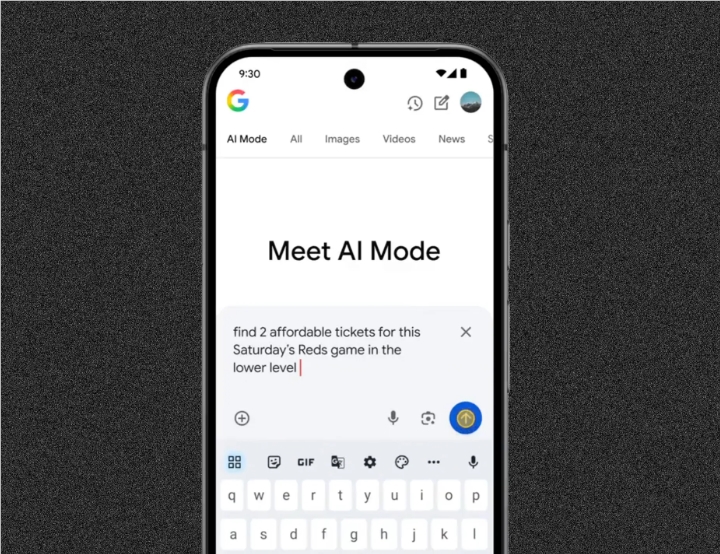

At the Google I/O Developer Conference on May 20, 2025, the tech giant unveiled its most significant search innovation in years—AI Mode is now available to all U.S. users. Powered by the advanced Gemini2.0 model, this overhaul replaces the familiar "ten blue links" with an intelligent assistant that understands complex queries through text, voice, or images.

A New Era of Conversational Search The system processes multi-part questions like "compare sleep tracking in smart rings versus smartwatches" by deploying parallel searches across Google's knowledge graph and real-time shopping data. Users can now snap a photo of clothing to find purchase options or similar styles—a feature made possible by Google Lens integration.

Deep Search Goes Beyond Surface Results For research-intensive queries, the new Deep Search function automatically runs hundreds of searches to compile expert-level reports complete with citations. Meanwhile, Project Mariner's proxy capabilities allow the AI to perform multi-step tasks—from finding concert tickets within budget to completing purchases via Google Pay.

"We're seeing query lengths double compared to traditional searches," revealed a Google spokesperson, noting a 10% increase in usage frequency for complex questions. Partners like Ticketmaster and StubHub already support these transactional features, with more service integrations planned.

Personalization Raises Privacy Questions While optional, the personalization features leverage search history and connected Google services (with user consent) to tailor results. A search for "Nashville weekend food events" might prioritize outdoor dining options if that aligns with past preferences. A new sidebar helps users track ongoing research for trips or major purchases.

As AI Mode rolls out universally without subscription fees through the Google app (iOS/Android), content creators voice concerns about declining website traffic. Google maintains it hasn't observed significant drops but acknowledges ongoing adjustments to balance user benefits with ecosystem health.

The launch signals a fundamental shift from information retrieval to proactive assistance—one that could redefine how billions interact with knowledge online.

Key Points

- AI Mode combines Gemini2.0's reasoning with multi-modal input (text/voice/images)

- Deep Search automates intensive research with cited reports

- Proxy functions enable ticket purchases and reservations

- Personalization uses opt-in data from Google services

- Available free to all U.S. users via mobile apps