DoorDash Driver Busted Using AI-Generated Photos for Fake Deliveries

Delivery Driver Caught Using AI to Fake Orders

In what appears to be a first for the gig economy, DoorDash has permanently banned a driver accused of using artificial intelligence to fabricate proof of deliveries. The incident came to light when suspicious customers noticed telltale signs of digital manipulation in their delivery confirmation photos.

The Smoking Gun: Impossible Porch Photos

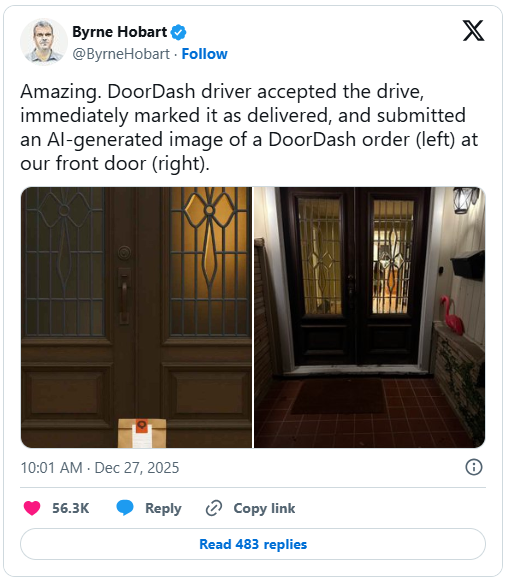

Austin resident Byrne Hobart became suspicious when his DoorDash order showed as "delivered" suspiciously quickly last December. The accompanying photo showed his food on what appeared to be his porch - except crucial details didn't add up.

"The lighting was all wrong," Hobart explained on social media platform X. "The proportions looked unnatural, and the texture of my actual porch didn't match the image at all." His post comparing the AI-generated image with his real porch quickly went viral.

The plot thickened when another Austin resident reported encountering similar discrepancies with deliveries from an account bearing the same display name.

How the Scheme Worked

Industry experts speculate the driver may have accessed historical delivery photos through compromised accounts or devices. These authentic images of customers' homes could then serve as templates for AI tools like Sora or Runway to superimpose virtual food packages.

While technologically simple, this method exposed vulnerabilities in platforms' ability to detect synthetic content during automated reviews.

DoorDash Takes Action

The company responded swiftly once the reports gained traction online. A spokesperson confirmed:

- Permanent deactivation of the driver's account

- Full refunds issued to affected customers

- Ongoing enhancements to fraud detection systems combining AI and human review

The incident represents one of the first documented cases where generative AI has been weaponized against gig economy platforms.

Bigger Than One Bad Apple

The implications extend far beyond a single fraudulent driver:

- Trust erosion: Digital proofs that were once reliable may no longer suffice

- Verification arms race: Platforms may need biometric checks or video confirmations

- Broader implications: Similar vulnerabilities likely exist across other service apps

The case serves as a wake-up call about how easily generative AI can undermine digital trust systems built over decades.

Key Points:

- DoorDash confirms first known case of AI-assisted delivery fraud

- Driver allegedly used historical photos and AI tools to fake deliveries

- Platform responded with permanent ban and refunds

- Incident highlights growing challenge of verifying digital proofs

- Future solutions may require biometric verification or video confirmation