ByteDance Unveils Sa2VA: Merging LLaVA and SAM-2 for AI-Powered Video Segmentation

ByteDance Introduces Sa2VA: A Breakthrough in Multimodal AI Segmentation

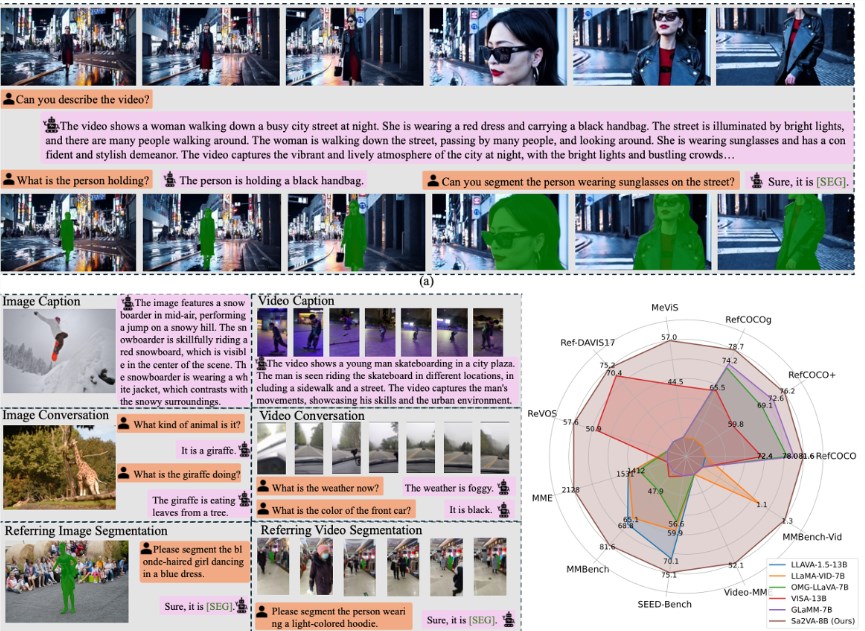

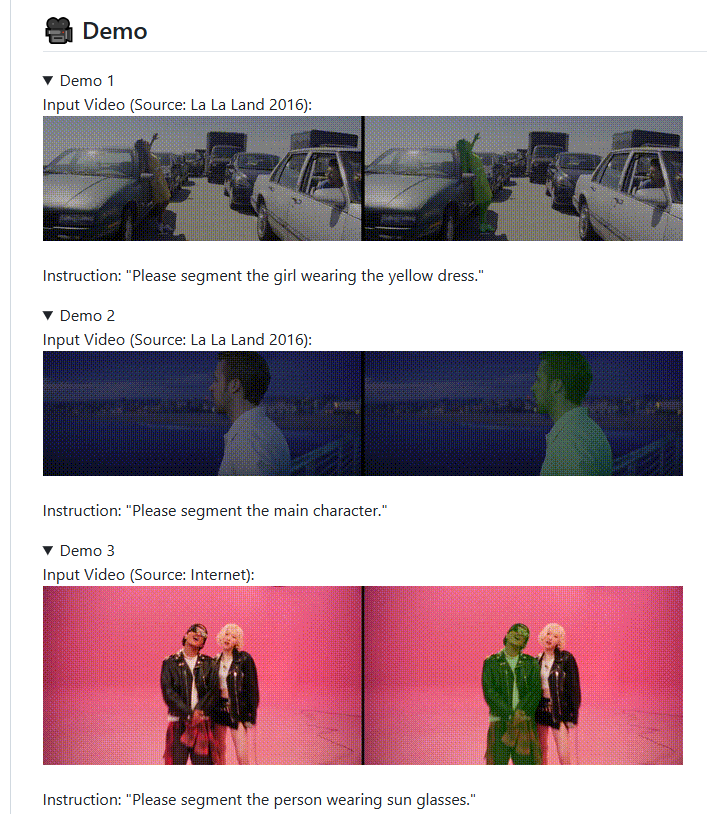

In a significant leap forward for artificial intelligence technology, ByteDance has partnered with academic researchers to develop Sa2VA, a novel model that merges the strengths of two powerful AI systems: LLaVA (Large Language and Vision Assistant) and SAM-2 (Segment Anything Model). This innovative combination creates a multimodal solution capable of sophisticated video understanding and precise object segmentation.

Bridging Two AI Powerhouses

The new model addresses critical limitations in existing technologies. LLaVA, while exceptional at macro-level video storytelling and content comprehension, struggles with detailed execution tasks. Conversely, SAM-2 excels at pixel-perfect image segmentation but lacks language processing capabilities. Sa2VA's architecture effectively bridges this gap through an innovative "code" system that facilitates seamless communication between the two components.

"Think of Sa2VA as having dual processors," explains Dr. Li Xiang, lead researcher on the project. "One module specializes in language understanding and dialogue processing, while its counterpart handles precise video segmentation and object tracking."

Technical Innovation Behind Sa2VA

The model operates through an elegant workflow:

- Users provide natural language instructions

- The LLaVA component interprets these commands

- Specialized instruction tokens are generated

- SAM-2 receives these tokens to execute precise segmentation

- Continuous feedback improves future performance

The research team implemented multi-task joint training to enhance Sa2VA's capabilities across various domains. Initial tests demonstrate remarkable performance, particularly in:

- Video referential segmentation

- Real-time object tracking

- Complex scene understanding

- Dynamic video processing

Open-Source Commitment and Future Applications

ByteDance has made multiple versions of Sa2VA publicly available alongside comprehensive training tools:

- Project homepage: https://lxtgh.github.io/project/sa2va/

- GitHub repository: https://github.com/bytedance/Sa2VA

This open approach aims to accelerate development in multimodal AI applications across industries including:

- Autonomous vehicles

- Medical imaging

- Content moderation

- Augmented reality

The release follows ByteDance's pattern of contributing to open-source AI development while maintaining proprietary enhancements for its commercial products like TikTok.

Key Points:

- Multimodal breakthrough: Sa2VA combines LLaVA's language understanding with SAM-2's segmentation precision.

- Real-world performance: Excels in complex video analysis tasks including dynamic object tracking.

- Open ecosystem: Publicly available models encourage widespread research and application development.

- Future potential: Technology applicable across numerous industries requiring advanced visual analysis.