Apple's STARFlow-V Takes a Radical Approach to Stable Video Generation

Apple Bets on Normalizing Flow for Next-Gen Video AI

In a surprising departure from industry trends, Apple has introduced STARFlow-V, a video generation model that completely bypasses the diffusion technology powering most competitors. Instead, it relies on normalizing flow - a mathematical approach that directly transforms random noise into coherent video frames.

Why Normalizing Flow Matters

While diffusion models like those in Sora or Runway gradually refine videos through multiple noisy iterations, STARFlow-V takes a more direct route. Imagine teaching someone to paint by showing completed masterpieces rather than having them erase and redraw repeatedly - that's essentially how this technology differs.

The benefits are tangible:

- Training happens in one go, eliminating the need for countless small adjustments

- Generation is nearly instantaneous after training completes

- Fewer errors creep in without iterative processing steps

Apple claims STARFlow-V matches diffusion models in quality while being about 15 times faster at producing five-second clips than its initial prototypes.

Solving the Long Video Puzzle

The real breakthrough comes in handling longer sequences. Most AI video tools struggle beyond a few seconds as errors compound frame by frame. STARFlow-V tackles this with an innovative dual architecture:

- One system maintains consistent motion across frames

- Another polishes details within individual frames

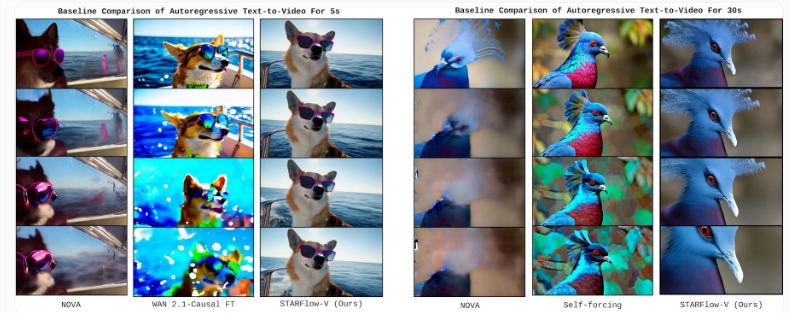

The result? Stable 30-second demonstrations where competing models start showing blur or distortion within seconds.

Capabilities and Limitations

The model handles multiple tasks out of the box:

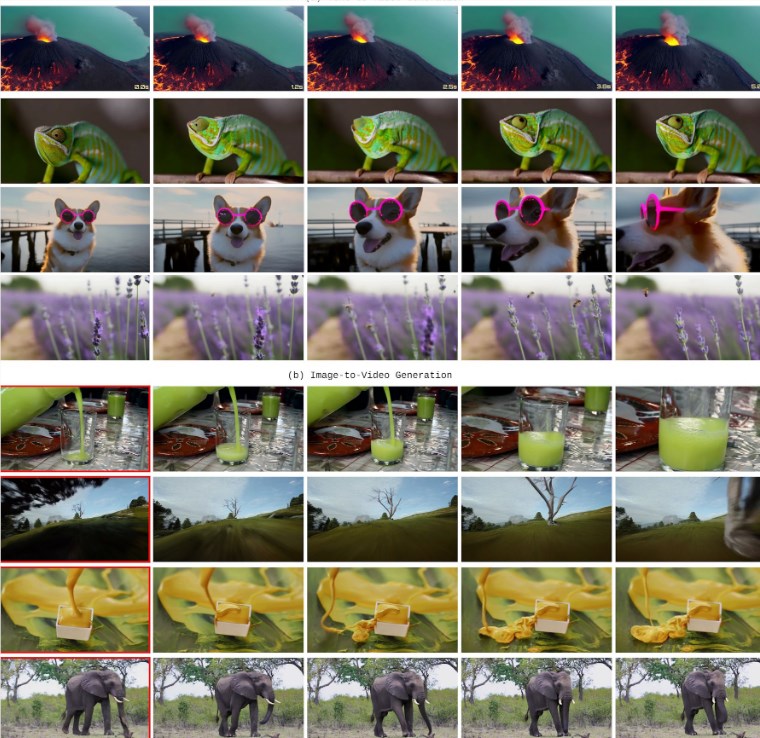

- Creating videos from text prompts

- Animating still images

- Editing existing footage by adding or removing objects

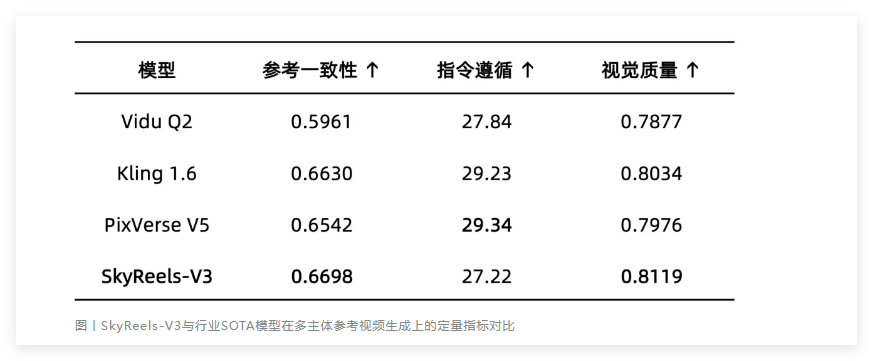

Benchmark tests reveal STARFlow-V scoring 79.7 points on VBench - respectable but trailing leaders like Veo3 (85.06). However, it significantly outperforms other autoregressive models, particularly in rendering spatial relationships and human figures realistically.

The current version isn't perfect though:

- Resolution tops out at modest 640×480 pixels

- Standard GPUs can't handle real-time processing yet

- Physical simulations sometimes glitch (think octopuses phasing through glass)

Apple acknowledges these limitations and plans to focus on speed optimization, model compression, and incorporating more physically accurate training data moving forward. The company has already published code on GitHub with model weights coming soon to Hugging Face.

Key Points:

- Apple's STARFlow-V uses normalizing flow instead of diffusion models

- Achieves stable 30-second videos where competitors falter

- Processes frames directly rather than iteratively refining them

- Currently trails top models slightly in benchmark scores

- Available soon via Hugging Face with code already on GitHub