Apple's Design AI Outshines GPT-5 With Human Touch

How Apple Taught AI the Art of Good Design

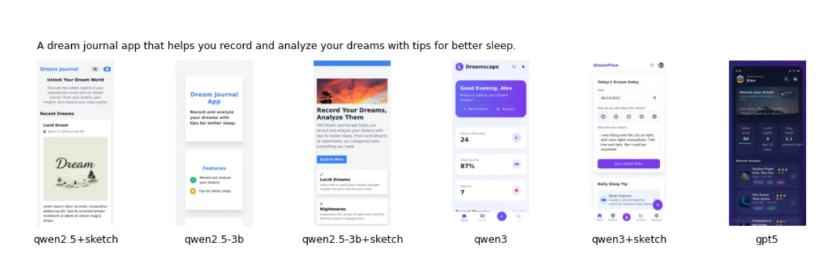

In a surprising twist, Apple's latest AI research demonstrates that sometimes machines just need human help to develop good taste. Their optimized Qwen3-Coder model has surpassed GPT-5 in creating visually appealing interfaces - proving that beauty isn't just in the eye of the beholder, but can be learned by algorithms too.

The Problem With Machine-Made Designs

For years, AI-generated interfaces suffered from what designers call "functional but ugly" syndrome. While these digital creations worked perfectly fine, they often lacked that indefinable quality that makes users say "wow." Traditional scoring systems proved too blunt to capture the nuances of good design.

"We realized we were trying to measure something as subjective as beauty with tools better suited for math tests," explains Dr. Elena Torres, lead researcher on Apple's project. "Design isn't about right answers - it's about feel."

Bringing Human Insight Into Machine Learning

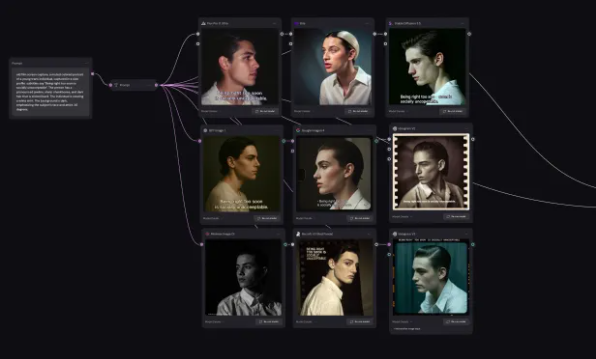

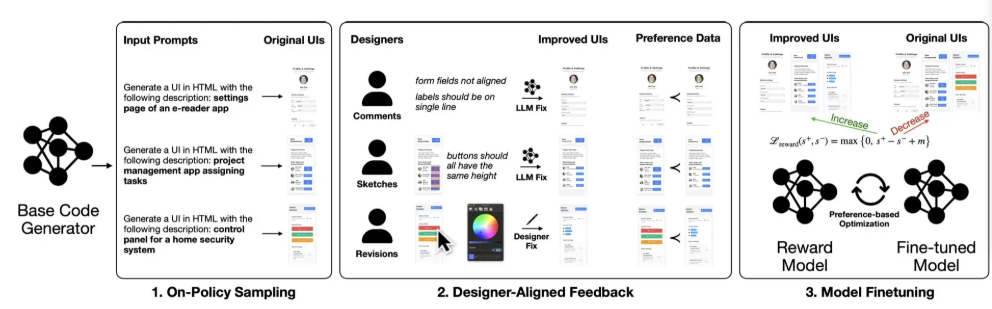

The breakthrough came when Apple gathered 21 senior designers who provided over 1,460 detailed improvement logs. These weren't just ratings or comments - they included annotated sketches and direct modifications showing exactly how designs could be improved.

The team then transformed these visual critiques into training data for their reward model. Suddenly, the AI wasn't just guessing what made designs good - it had concrete examples showing how professionals would refine them.

Surprising Efficiency Gains

The results astonished even Apple's researchers:

- Qwen3-Coder showed dramatic improvements after analyzing just 181 sketch samples

- Evaluation consistency among human judges jumped from 49% to 76%

- The model developed an intuitive grasp of spacing, color harmony and visual hierarchy

"What surprised us most was how quickly the AI absorbed these aesthetic principles," notes Torres. "It suggests that with the right kind of feedback, machines can develop surprisingly sophisticated taste."

The implications extend far beyond Apple products. This approach could revolutionize how we train AIs for any creative task where subjective judgment matters - from architecture to fashion design.

Key Points:

- Human feedback matters: Direct designer input proved far more effective than abstract scoring systems

- Visual learning works: Sketches and modifications taught aesthetics better than text descriptions alone

- Small data, big impact: Significant improvements came from relatively few high-quality samples

- Subjectivity conquered: Structured visual feedback reduced evaluation inconsistencies dramatically