Ant Group Unveils Global AI Open Source Trends at Bund Conference

Ant Group Reveals AI Open Source Ecosystem Trends

At the 2025 Inclusion·Bund Conference AI Open Source Insights Forum, Ant Group Open Source launched its comprehensive "Global Large Model Open Source Ecosystem Overview and Trend Report." This second edition builds upon May's initial release with updated community developments and expanded analysis of artificial intelligence open source trends.

(Wang Xu, Vice Chairman of the Ant Group Open Source Technology Committee, presents the report)

(Wang Xu, Vice Chairman of the Ant Group Open Source Technology Committee, presents the report)

Data-Driven Insights into AI Development

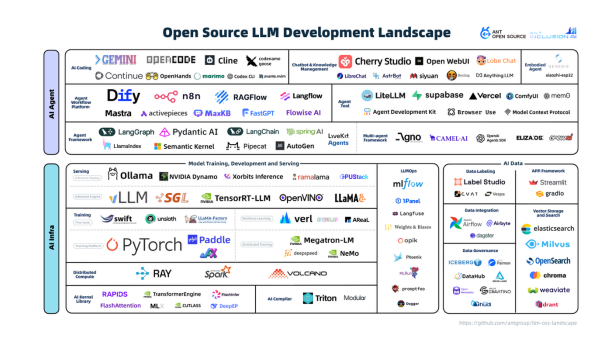

The report employs GitHub data analyzed through the OpenRank algorithm to evaluate 114 leading open source projects across 22 technical fields. These projects fall into two primary categories: AI Agent and AI Infra technologies. Notably, 62% of these projects emerged after October 2022's "GPT Moment," with an average lifespan of just 30 months - demonstrating the field's rapid evolution.

(Large Model Open Source Map 2.0)

(Large Model Open Source Map 2.0)

Diverging Paths: Open vs Closed Models

The study reveals striking geographical differences in development approaches:

- Chinese companies predominantly release open-weight models (72%)

- U.S. firms favor closed-source models (68%)

Wang Xu commented: "These projects function like digital building blocks. China's enthusiasm for sharing these components is energizing the global ecosystem."

The Rise of AI Coding Assistants

The most dramatic growth area identified is AI-powered coding tools, which have seen adoption rates triple since 2024. These tools fall into two categories:

- Command-line interfaces (e.g., Google's Gemini CLI)

- IDE plugins (e.g., Cline)

The Gemini CLI project gained over 60,000 GitHub stars in just three months - unprecedented growth for an open source tool.

Tracking Model Development

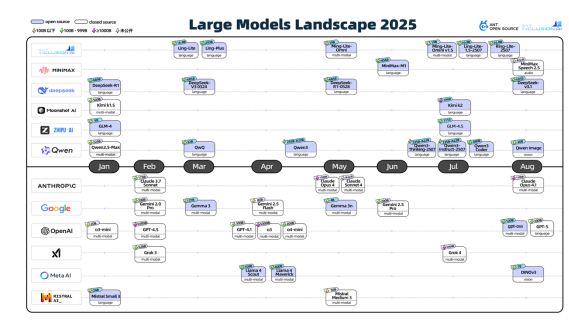

The accompanying timeline map charts major model releases since January 2025, documenting:

- Parameter counts

- Modality types

- Release strategies (open/closed)

(Large Model Development Timeline Map)

(Large Model Development Timeline Map)

Key Points:

- US and China account for over 40% of core developers globally

- Average new coding tool receives >30k stars in first year

- MoE architecture enabling parameter scaling beyond previous limits

- Multimodal models now represent >60% of new releases

- Reinforcement learning significantly improving reasoning capabilities