Ant Group Releases Groundbreaking Trillion-Parameter AI Model

Ant Group Breaks New Ground With Open-Source AI Model

In a move that could reshape the AI landscape, Ant Group has released Ring-2.5-1T, the first trillion-parameter reasoning model with hybrid linear architecture available to the public. This technological leap forward offers unprecedented capabilities for handling complex tasks in our increasingly automated world.

Performance That Turns Heads

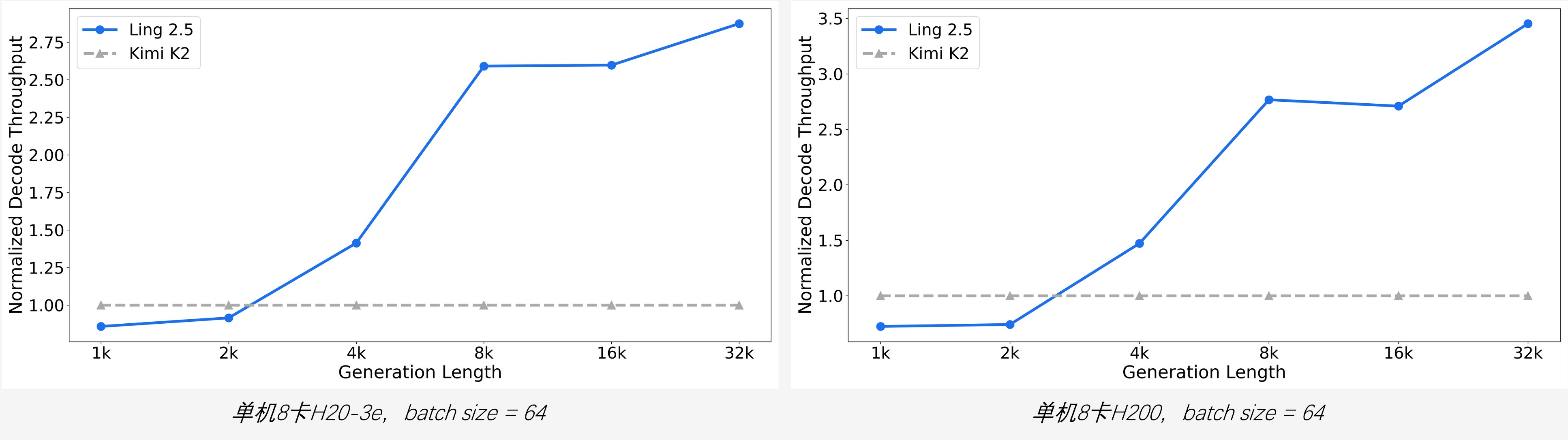

The numbers speak volumes: Ring-2.5-1T reduces memory access by more than 10 times and triples throughput compared to its predecessor when processing lengthy texts exceeding 32K tokens. But raw speed isn't its only trick - this model demonstrates remarkable depth in specialized domains.

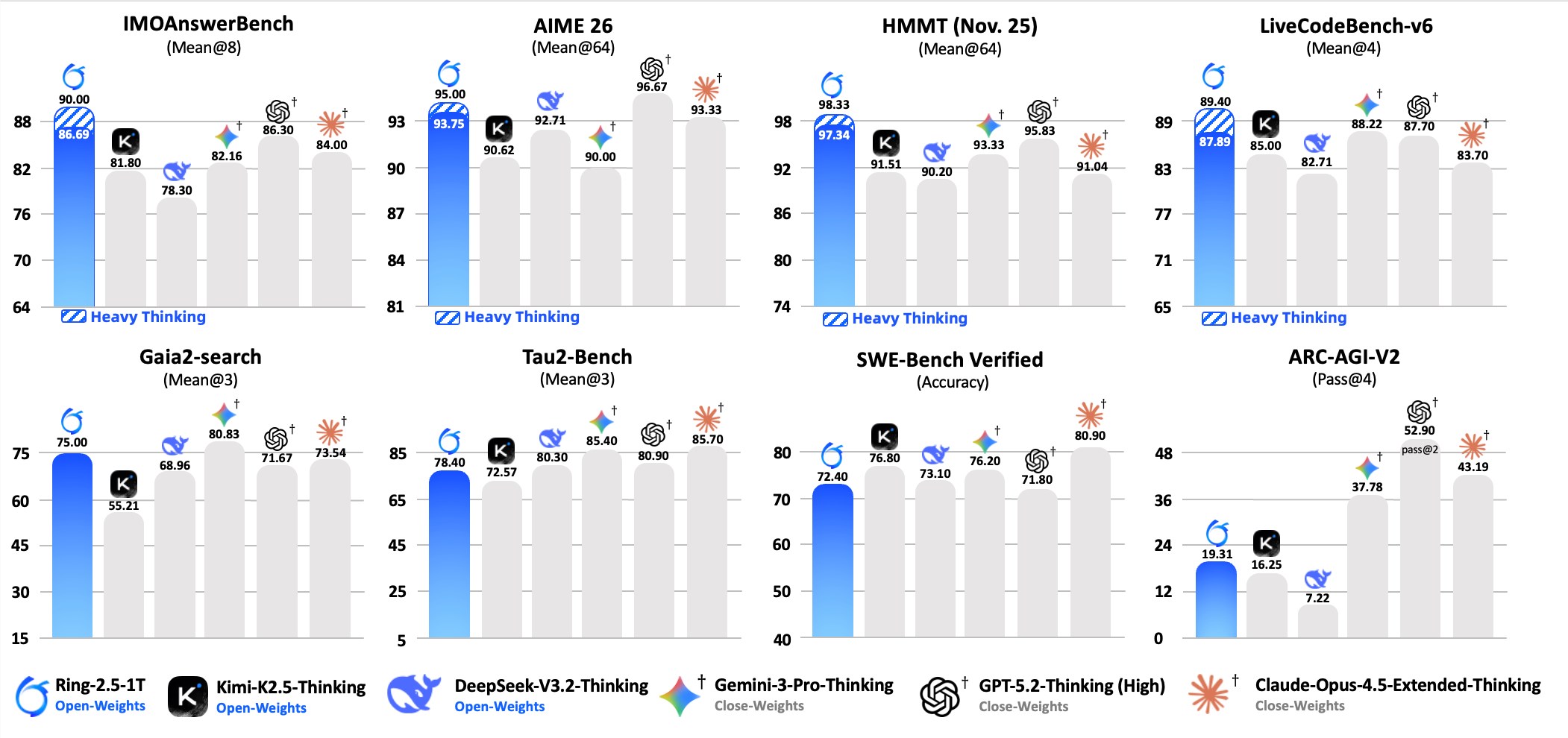

(Figure caption: Ring-2.5-1T reaches open-source leading levels in high-difficulty reasoning tasks such as mathematics, code, and logic, as well as in long-term task execution such as agent search, software engineering, and tool calling.)

(Figure caption: Ring-2.5-1T reaches open-source leading levels in high-difficulty reasoning tasks such as mathematics, code, and logic, as well as in long-term task execution such as agent search, software engineering, and tool calling.)

Mathematical Prowess Meets Practical Application

Imagine an AI that could ace prestigious math competitions - Ring-2.5-1T achieves gold medal performance levels (scoring 35 points on IMO2025 and 105 on CMO2025 benchmarks). Yet it's equally comfortable powering everyday applications, seamlessly integrating with popular agent frameworks like Claude Code and OpenClaw personal assistants.

The model shines brightest when tackling multi-step challenges requiring sophisticated planning and tool coordination. Developers will appreciate its ability to maintain efficiency even as task complexity grows.

Benchmark Dominance

When pitted against industry heavyweights including GPT-5.2-thinking-high and Gemini-3.0-Pro-preview-thinking in rigorous testing scenarios, Ring-2.5-1T consistently came out on top:

The model particularly excels in:

- Mathematical reasoning (IMOAnswerBench)

- Code generation (LiveCodeBench-v6)

- Logical problem-solving

- Extended agent task execution

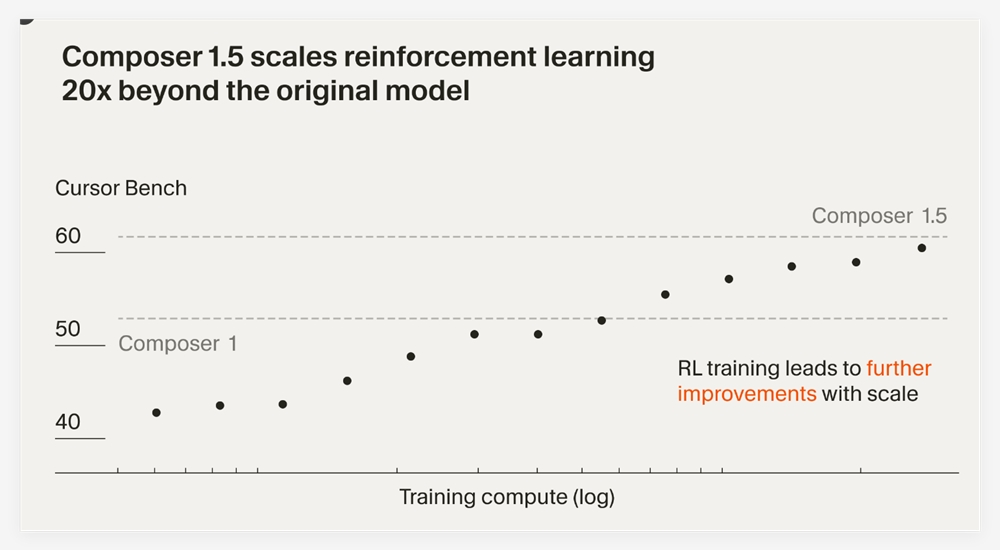

The secret sauce? Ant Group's innovative Ling2.5 architecture optimizes attention mechanisms while scaling activated parameters from 51B to 63B - all while improving rather than compromising efficiency.

(Figure caption: Efficiency comparison under different generation lengths. The longer the generation length, the more obvious the throughput advantage.)

(Figure caption: Efficiency comparison under different generation lengths. The longer the generation length, the more obvious the throughput advantage.)

Solving Real-World Challenges

The timing couldn't be better as AI applications evolve beyond simple conversations into domains requiring deep document analysis, cross-file code comprehension, and complex project planning.

The computational demands of these advanced use cases have traditionally created bottlenecks - exactly where Ring-2.5-1T makes its mark by dramatically reducing processing costs and latency for extended output scenarios.

The implications extend far beyond technical specs:

"This release showcases Ant Bailing team's mastery of large-scale training infrastructure," observes one industry analyst. "They're giving developers powerful new tools for building next-generation AI applications."

Developers can already access Ring-2.5-1T's model weights and inference code through Hugging Face and ModelScope platforms, with chat interfaces and API services coming soon.

Key Points:

- Historic Release: First open-source trillion4parameter hybrid linear architecture model

- Performance Leap: Up to 10x memory efficiency gains over previous generation

- Academic Strength: Achieves competition-level math problem-solving capabilities

- Practical Power: Excels at real-world agent frameworks and multi-step tasks

- Industry Impact: Addresses growing computational demands of advanced AI applications