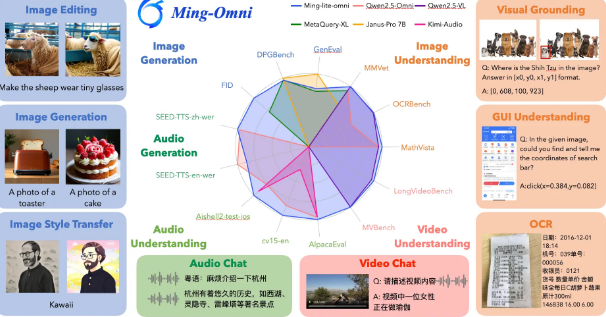

Ant Group Open-Sources Ming-lite-omni, Rivaling GPT-4o

Ant Group's Bai Ling team has made waves in the AI community by fully open-sourcing its Ming-lite-omni multimodal large model. Announced during Ant Technology Day, this strategic move represents a major step in making advanced AI technology accessible worldwide while challenging proprietary systems like OpenAI's GPT-4o.

Technical Specifications Break New Ground

The model builds upon Ant's Ling-lite foundation using an innovative MoE (Mixture of Experts) architecture. With 22 billion total parameters and 3 billion active parameters, Ming-lite-omni sets a new benchmark for open-source multimodal models. Developers now have access to the complete model weights and inference code, with training materials scheduled for future release.

Consistent Open-Source Commitment

This release continues Ant Group's pattern of technological transparency:

- Earlier this year saw open-sourcing of Ling-lite and Ling-plus language models

- The Ming-lite-uni multimodal model became publicly available

- May's Ling-lite-1.5 version demonstrated SOTA-level capabilities

The team has particularly emphasized their success in training high-performance models using non-high-end computing platforms, proving that cutting-edge AI development doesn't require exclusive access to premium hardware.

Performance That Challenges Industry Leaders

Independent evaluations place Ming-lite-omni's capabilities on par with leading 10B-scale multimodal models. The Bai Ling team asserts this represents the first open-source alternative matching GPT-4o's modality support - potentially reshaping how developers approach multimodal AI projects worldwide.

"Our MoE architecture implementation," explains Bai Ling leader Xiting, "combined with strategic use of domestic GPU resources, demonstrates we can achieve GPT-4o level performance without relying on specialized infrastructure."

The implications are significant for global AI development. By removing proprietary barriers while maintaining competitive performance, Ant Group may have altered the landscape for accessible advanced AI systems.

Key Points

- Ming-lite-omni offers 22B parameters with 3B active via MoE architecture

- Complete weights and inference code now available to developers

- Represents Ant Group's latest step in ongoing open-source strategy

- Performance benchmarks comparable to leading proprietary models

- Demonstrates viability of non-premium computing platforms for advanced AI