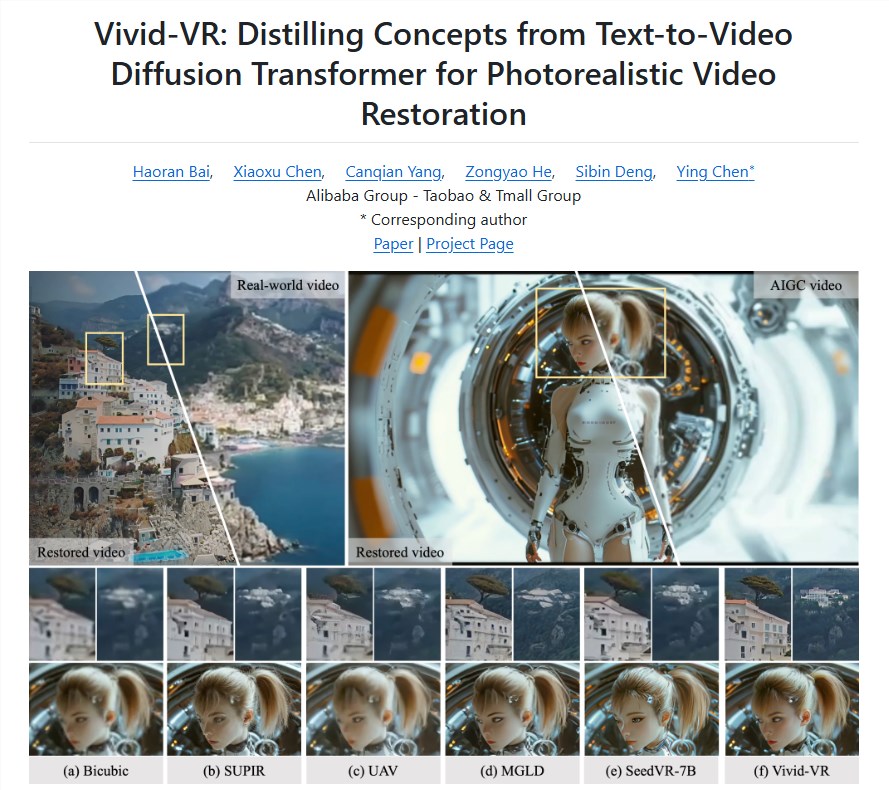

Alibaba's Vivid-VR: AI Video Restoration Goes Open-Source

Alibaba Cloud Open-Sources Cutting-Edge Video Restoration Tool

Alibaba Cloud has made its Vivid-VR generative video restoration tool publicly available as open-source software. This technological breakthrough leverages advanced text-to-video (T2V) models integrated with ControlNet technology to deliver unprecedented frame consistency in restored video content.

Technical Innovation Behind Vivid-VR

The system's architecture represents a significant leap in video processing:

- T2V Foundation Model: Generates high-quality video content through deep learning algorithms

- ControlNet Integration: Maintains temporal consistency across frames, eliminating common artifacts

- Dynamic Semantic Adjustment: Enhances texture realism during the generation process

"What sets Vivid-VR apart is its ability to maintain visual stability while dramatically improving restoration efficiency," explains the development team. Early tests show the tool can process both traditionally captured footage and AI-generated content with equal effectiveness.

Broad Industry Applications

The tool demonstrates particular value for:

- Content creators repairing low-quality source material

- Post-production teams optimizing AI-generated videos

- Archival projects restoring historical footage

- Social media platforms enhancing user-generated content

Supporting multiple input formats, Vivid-VR allows parameter customization for specific use cases, making it adaptable across creative and technical workflows.

Open-Source Accessibility

Alibaba Cloud has released Vivid-VR through multiple platforms:

- GitHub (primary code repository)

- Hugging Face (model sharing)

- ModelScope (Alibaba's model hub)

This follows the company's successful Wan2.1 series, which garnered over 2.2 million downloads. The open-source approach significantly lowers the barrier to entry for developers worldwide.

Industry Impact Analysis

The 2025 digital landscape increasingly relies on video content, yet quality issues persist:

- 78% of creators report struggling with inconsistent footage quality (AIbase 2025 survey)

- AIGC platforms see 42% user attrition due to generation artifacts

Vivid-VR addresses these pain points while potentially creating new revenue streams in:

- Automated video enhancement services

- Legacy media restoration businesses

- Real-time processing applications

The tool's release coincides with growing demand for AI-assisted creative tools, projected to become a $27 billion market by 2027 (Gartner).

Key Points:

- Frame Consistency: Advanced ControlNet integration eliminates flickering and shaking artifacts

- Dual Compatibility: Processes both traditional video and AIGC content effectively

- Open Ecosystem: Full access to models and code via major developer platforms

- Customization Options: Adjustable parameters for specialized use cases

- Industry Transformation: Potential to redefine video quality standards across multiple sectors