Alibaba's New AI Understands Your Tone - And Maybe Your Mood

Alibaba Releases Emotionally Aware Voice AI

In a move that could reshape how we interact with machines, Alibaba's Tongyi Lab has open-sourced Fun-Audio-Chat-8B - a voice AI model that doesn't just hear your words, but senses your mood.

Human-Like Conversations Without the Lag

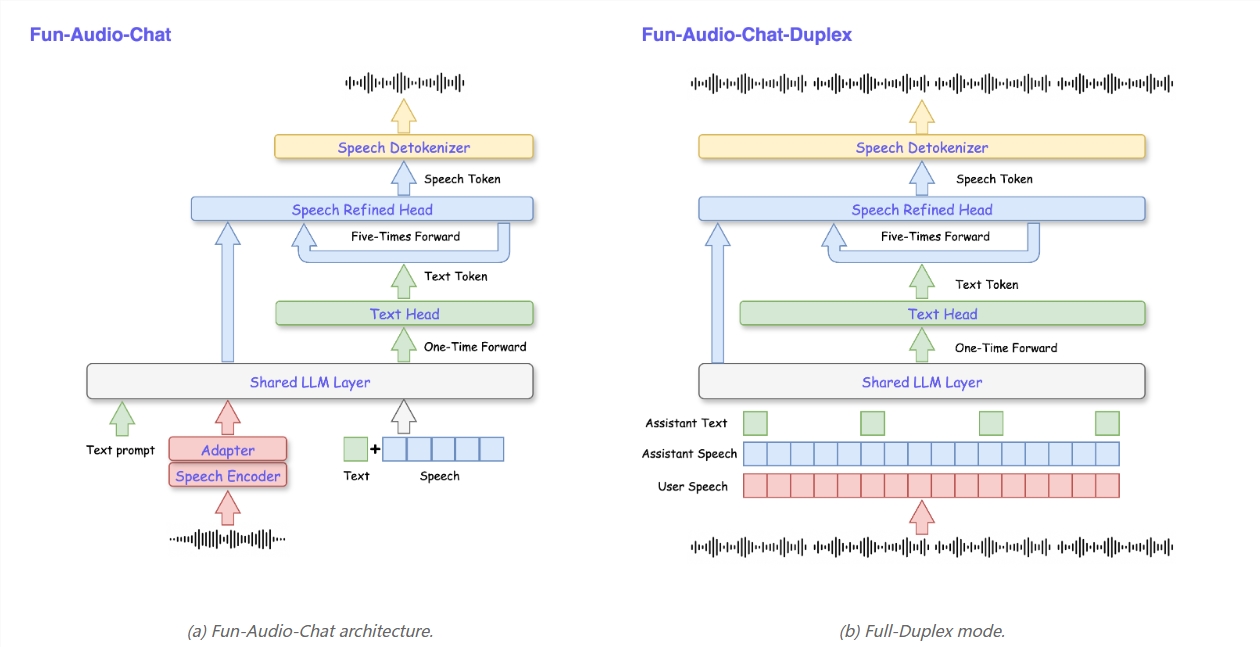

The breakthrough eliminates the robotic delays common in voice assistants. Traditional systems route audio through multiple processing stages (speech recognition → language processing → speech synthesis), creating noticeable pauses. Alibaba's solution handles everything in one streamlined step.

"It's like talking to someone who actually listens," explains Dr. Li Wei, an NLP researcher at Tsinghua University. "The responses come so naturally you forget it's artificial."

Reading Between the Vocal Lines

What sets this apart is emotional perception. While most AIs analyze text content, Fun-Audio-Chat detects:

- Tone shifts indicating frustration or excitement

- Speech patterns revealing fatigue or hesitation

- Pauses and emphasis that convey unspoken meaning

The system then adjusts responses accordingly - offering cheerful replies to happy users or measured tones during tense exchanges.

Practical Magic

The technology isn't just emotionally smart; it's resource-efficient too:

- Uses a dual-speed architecture (5Hz backbone + 25Hz detail processing)

- Cuts GPU usage by nearly 50%

- Supports real-time translation and role-playing scenarios

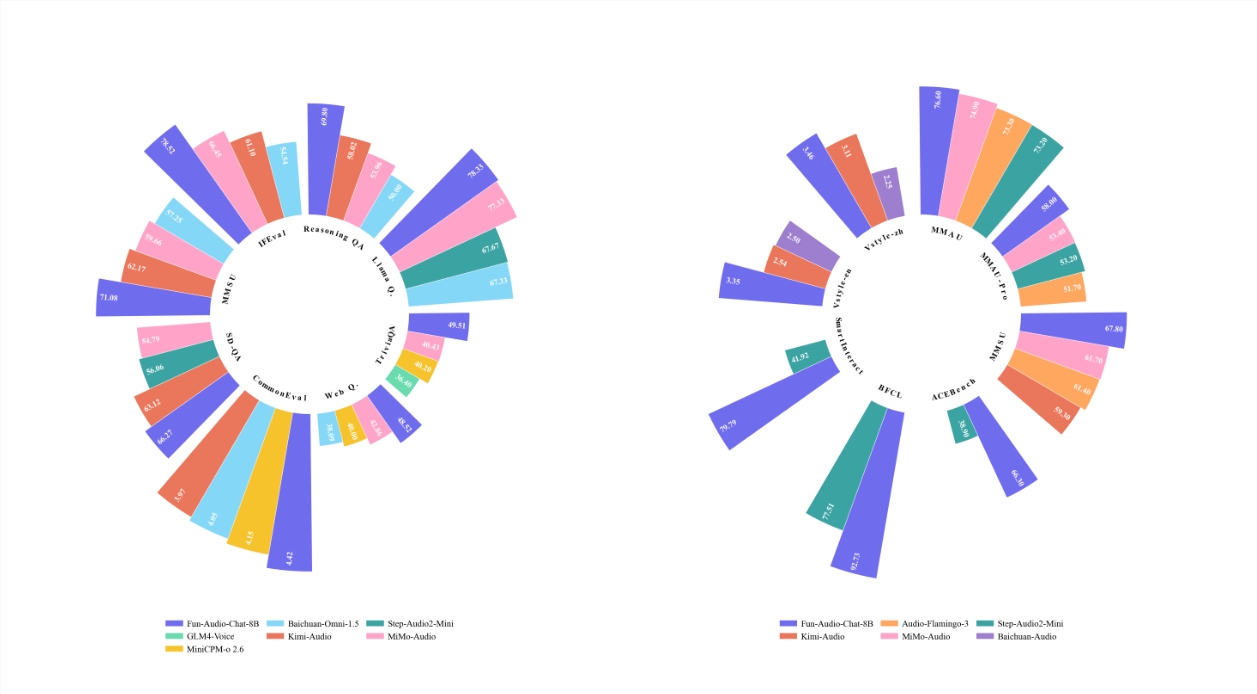

Early tests show it outperforming similar-sized models on benchmarks like OpenAudioBench while rivaling proprietary systems from OpenAI and Google.

Key Points:

- Available now: Complete model weights and code on GitHub/Hugging Face

- Potential uses: Customer service, therapy bots, smart home controls

- Language support: Currently optimized for Mandarin with English capabilities

- Privacy note: All processing occurs locally unless cloud integration is added

The open-source release lowers barriers for developers worldwide to experiment with emotionally intelligent interfaces. As Dr. Li observes: "We're not just teaching machines to talk - we're helping them understand how humans really communicate."