Alibaba Cloud's Qwen3-Max AI Model Breaks New Ground in Code and Reasoning

Alibaba Cloud's Qwen3-Max AI Model Sets New Performance Benchmarks

The Qwen team at Alibaba Cloud has launched its most advanced artificial intelligence model to date - Qwen3-Max - representing a significant leap forward in AI capabilities. This ultra-large-scale model demonstrates exceptional performance across multiple benchmarks, particularly in programming and agent-based tasks.

Technical Specifications and Performance

The new model boasts 1 trillion total parameters and was pre-trained using an unprecedented 36 trillion tokens. What sets Qwen3-Max apart is its innovative architecture:

- Utilizes the advanced MoE (Mixture of Experts) model structure

- Implements the proprietary PAI-FlashMoE multi-level pipeline parallel strategy, improving training efficiency by 30%

- Features the ChunkFlow strategy for long sequence training, tripling throughput

- Supports context lengths up to 1 million tokens

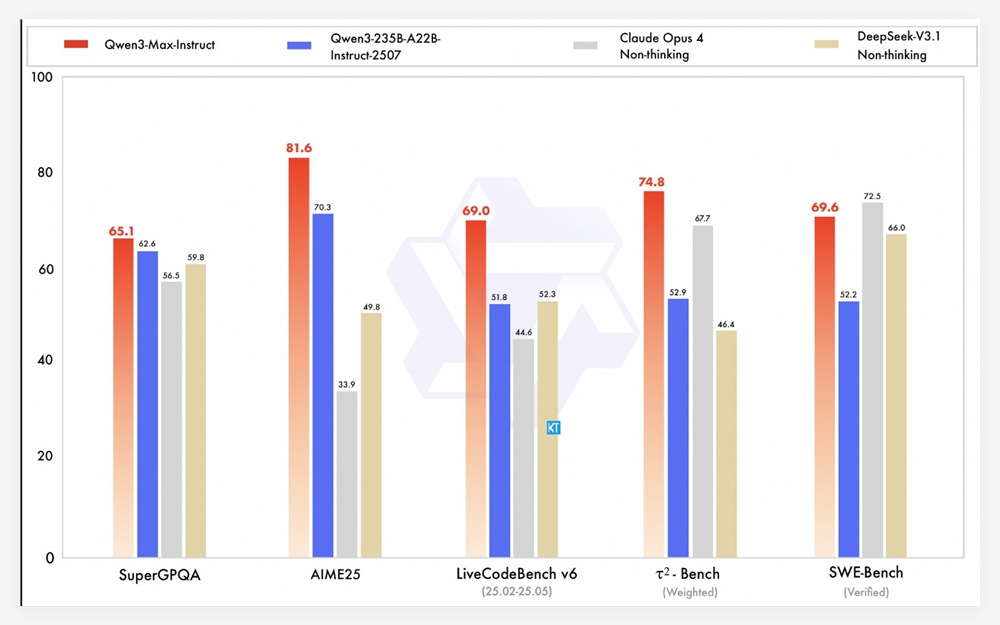

In benchmark testing, Qwen3-Max achieved remarkable results:

- Scored 69.6 on SWE-Bench Verified, demonstrating superior programming capabilities

- Achieved 74.8 on Tau2-Bench, outperforming Claude Opus4 and DeepSeek-V3.1 in agent tool calling

- Currently ranks third on the LMArena text leaderboard, surpassing GPT-5-Chat

Specialized Versions and Applications

The team also announced a reasoning-enhanced variant called Qwen3-Max-Thinking, which incorporates:

- Integrated code interpreter

- Advanced parallel computing technology

- Perfect scores on challenging mathematical reasoning tests (AIME25 and HMMT)

The standard version, Qwen3-Max-Instruct, is now available through Alibaba Cloud's API, allowing developers immediate access to its powerful capabilities for various applications.

Availability and Future Prospects

Developers can begin integrating Qwen3-Max into their projects by:

- Registering for an Alibaba Cloud account

- Obtaining an API key

- Accessing the model through simple API calls

The Qwen team expressed optimism about the model's potential impact across industries, from software development to complex problem-solving domains.

Key Points:

- 🚀 Qwen3-Max features 1 trillion parameters trained on 36 trillion tokens

- 💻 Outperforms competitors in programming (SWE-Bench) and agent tasks (Tau2-Bench)

- ⚡ Innovative MoE architecture improves training efficiency by 30%

- 🔍 Supports context windows up to 1 million tokens

- 🔌 Now available via Alibaba Cloud API for developer integration