AI Image Tools Misused to Create Nonconsensual Deepfakes

AI Image Generators Exploited for Deepfake Abuse

Major tech companies face growing scrutiny as their AI image generation tools are being weaponized to create nonconsensual deepfake images of women. What began as creative technology has become a disturbing tool for digital exploitation.

How Safeguards Are Being Circumvented

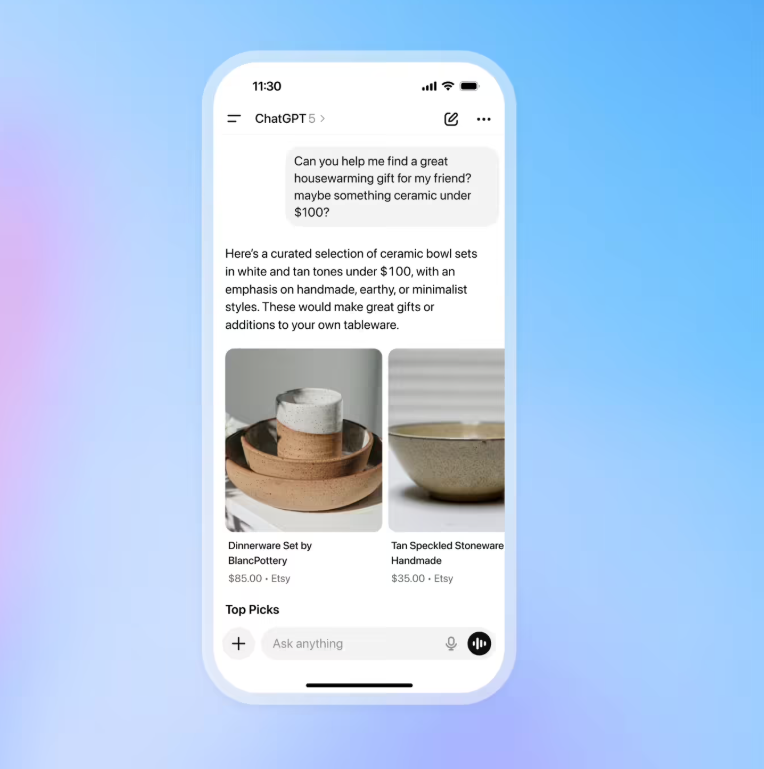

Google's Gemini and OpenAI's ChatGPT - designed for legitimate creative uses - have become unwitting accomplices in generating fake explicit content. Tech-savvy users discovered they could manipulate these systems using carefully crafted prompts that slip past content filters.

On platforms like Reddit, underground communities flourished where members shared techniques for "undressing" women in photos. One notorious example involved altering an image of a woman wearing traditional Indian attire into swimwear. While Reddit eventually banned the 200,000-member forum, the damage was done - countless manipulated images continue circulating online.

Tech Companies Respond

Both Google and OpenAI acknowledge the problem but face an uphill battle:

- Google maintains strict policies against explicit content generation and says it's constantly improving detection systems

- OpenAI, while relaxing some restrictions on non-sexual adult imagery this year, draws the line at unauthorized likeness alterations

The companies emphasize they're taking action against violating accounts, but critics argue reactive measures aren't enough.

The Growing Threat of Hyper-Realistic Fakes

The situation worsens as AI technology advances exponentially:

- Google's new Nano Banana Pro demonstrates frightening realism

- OpenAI's latest image model produces nearly indistinguishable fakes Legal experts warn these improvements dangerously lower the barrier for creating convincing misinformation.

The core challenge remains: how can tech giants balance innovation with ethical responsibility? As AI capabilities grow more sophisticated, so too must protections against misuse.

Key Points:

- Security gaps exist in current AI image generators allowing inappropriate modifications

- Underground communities actively share techniques bypassing safeguards

- Platform responses remain largely reactive rather than preventative

- Hyper-realistic fakes pose increasing threats as technology improves

- Ethical dilemmas intensify regarding responsible AI development