Yuan3.0Flash: A Game-Changing Open-Source AI Model

A New Contender in AI Emerges

The artificial intelligence landscape just got more interesting with the release of Yuan3.0Flash by YuanLab.ai. This open-source multimodal foundation model isn't just another entry in the AI race—it's bringing something genuinely innovative to the table.

Smarter Design, Better Performance

At its core, Yuan3.0Flash packs an impressive 40 billion parameters but uses them smarter than most. Its secret weapon? A sparse mixture-of-experts (MoE) architecture that activates only about 3.7 billion parameters during inference. Think of it like having a team of specialists where only the right experts chime in for each task—this approach slashes computing power needs while boosting accuracy.

The model introduces RAPO, a reinforcement learning training method with a clever twist: a reflection-inhibiting reward mechanism (RIRM) that cuts down on unproductive "thinking" cycles. It's like teaching the AI to get to the point faster without unnecessary detours.

Built for Real-World Challenges

Yuan3.0Flash doesn't just perform well in tests—it delivers where it counts:

- Visual Understanding: Its visual encoder transforms images into tokens that work seamlessly with text data

- Language Processing: Enhanced attention structures keep focus sharp while conserving resources

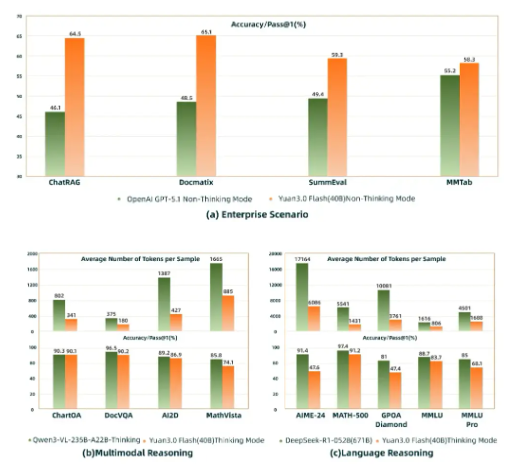

- Enterprise Ready: Already outperforming GPT-5.1 in practical business applications

The numbers tell an impressive story: matching or exceeding much larger models' accuracy while using just a quarter to half their computational resources.

What This Means for Developers and Businesses

The team isn't stopping here—they've announced plans for Pro and Ultra versions scaling up to a staggering 1 trillion parameters. But today's release already offers:

- Complete transparency with detailed technical documentation

- Multiple weight versions (16-bit and 4-bit)

- Full support for customization and secondary development

For businesses eyeing AI solutions, Yuan3.0Flash represents both cutting-edge capability and cost efficiency—a rare combination in today's AI market.

Key Points:

- Open Innovation: Comprehensive documentation invites community development

- Efficiency First: Smart architecture design minimizes computing costs

- Proven Performance: Outperforms established models in critical business applications

- Future-Ready: Larger versions already in development pipeline