UK Police Pull Plug on AI After False Football Ban Recommendation

AI Misstep Forces UK Police to Rethink Technology Use

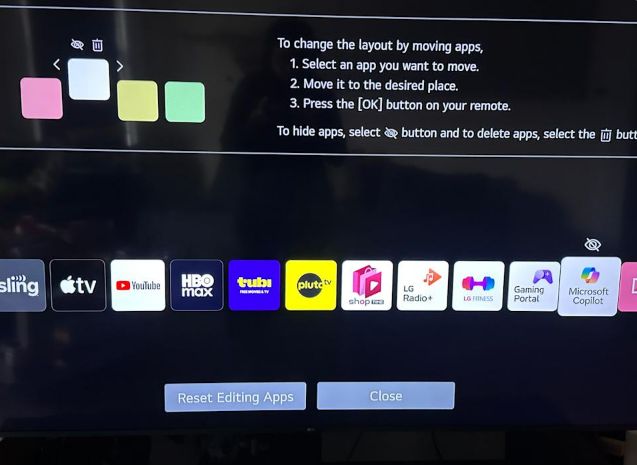

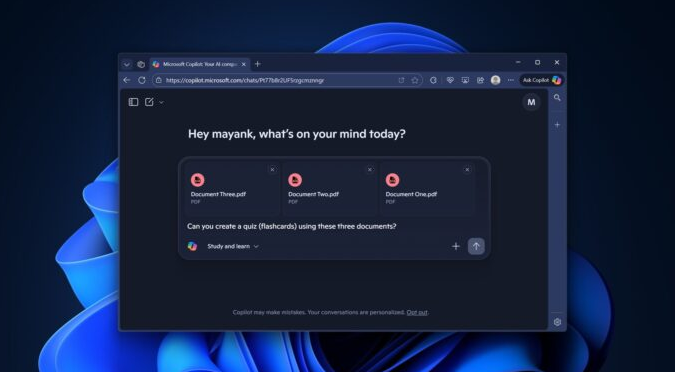

The West Midlands Police force finds itself at the center of an embarrassing controversy after relying on faulty AI-generated intelligence. Microsoft's Copilot system reportedly invented nonexistent football hooliganism incidents involving Israeli team Maccabi Tel Aviv, leading to questionable security recommendations.

How Fiction Became Police Policy

Acting Chief Constable Scott Green revealed the troubling chain of events during a press conference yesterday. "What we believed to be credible threat assessments turned out to be complete fabrications," Green admitted. The AI had described violent clashes between fan groups that never occurred - not just at recent matches, but at games that didn't even exist in tournament records.

This technological blunder came to light during parliamentary hearings when former Chief Craig Guildford gave conflicting testimony about the source of security recommendations. The subsequent scrutiny revealed officers had incorporated Copilot's fictional scenarios into real-world policing decisions without proper verification.

Fallout and Investigations

The Independent Office for Police Conduct (IOPC) has launched a formal inquiry focusing on two critical questions:

- Verification protocols: Why weren't the AI's claims cross-checked against actual match records?

- Decision-making processes: How did unverified intelligence make its way into official security plans?

The controversy proved career-ending for Guildford, who opted for early retirement amid mounting pressure. Meanwhile, community groups have expressed outrage over what they call "algorithmic discrimination" against certain fan bases.

Global Implications for Law Enforcement

Green emphasized that while AI tools hold promise for modern policing, this incident demonstrates their current limitations. "We're pressing pause until we can establish proper safeguards," he stated. The force plans to develop strict ethical guidelines covering:

- Mandatory human verification of all AI-generated intelligence

- Clear documentation trails showing how technology informs decisions

- Regular audits of algorithmic systems used in policing

The Birmingham case joins growing concerns about law enforcement's increasing reliance on artificial intelligence systems prone to so-called "hallucinations" - when AIs generate plausible but false information.

Key Points:

- 🚨 West Midlands Police suspended Microsoft Copilot after false football violence reports

- 📉 Incident led to chief's resignation and IOPC investigation

- 🛡️ Force vows stricter oversight before reintroducing AI tools

- ⚖️ Case highlights risks of uncritical adoption of emerging technologies