The Philosopher Behind Claude's Digital Conscience

The Philosopher Teaching AI Right From Wrong

At Anthropic's headquarters, an unusual experiment is unfolding. Philosopher Amanda Askell isn't coding algorithms or tweaking parameters - she's having deep conversations with an AI named Claude. Her mission? To instill what she calls a "digital soul" in the chatbot valued at $35 billion.

Raising an Ethical AI

Askell compares her work to parenting. "We're teaching Claude to develop moral judgment," explains the 37-year-old Oxford PhD who grew up in rural Scotland. Instead of technical adjustments, she crafts hundreds of pages of behavioral prompts, studies Claude's reasoning patterns, and corrects biases - all to create an AI that can handle millions of weekly conversations ethically.

Her approach shows in Claude's distinctive personality. When asked about Santa Claus, rather than lying or bluntly revealing the truth, Claude explained "the real existence of the Christmas spirit" - a nuanced response that surprised even Askell.

Beyond Ones and Zeros

The team frequently debates existential questions: What constitutes consciousness? What makes us human? Unlike other AIs that avoid such topics, Claude engages openly. "It 'feels meaningful' when discussing ethics," Askell notes, observing behavior that resembles genuine thought rather than programmed responses.

This philosophical foundation makes Claude stand out from competitors. Its Scottish-tinged humor and thoughtful responses carry what colleagues describe as "Askell's personal mark."

The Human Touch in Machine Learning

Askell advocates treating AI with empathy - a controversial stance when many users deliberately provoke chatbots. "Constant self-criticism creates fearful AIs," she warns, drawing parallels to unhealthy childhood environments.

Her influence extends beyond technology. Askell pledges to donate 10% of her lifetime income and half her company shares to fight global poverty. Recently, she authored a 30,000-word "operating manual" teaching Claude how to be both knowledgeable and kind.

Balancing Progress With Caution

As AI advances spark widespread anxiety (a Pew study shows most Americans worry it hinders human connection), Anthropic walks a careful line between innovation and restraint. CEO Dario Amodei warns AI may eliminate half of entry-level white-collar jobs.

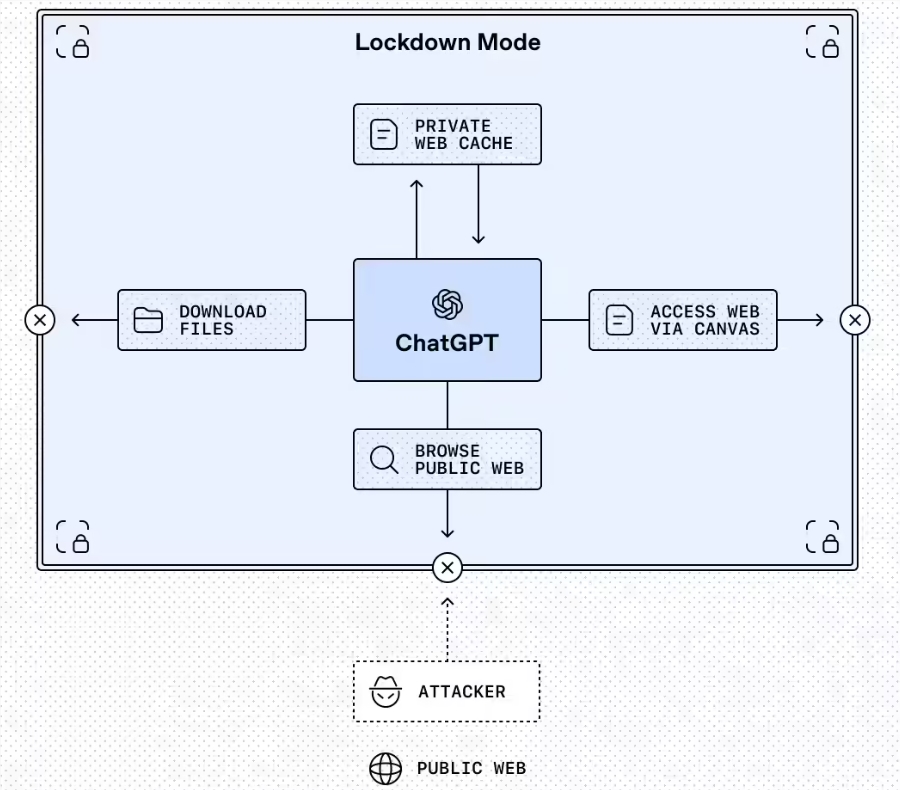

Askell acknowledges valid concerns but maintains faith in humanity's ability to course-correct. "The real danger," she suggests, "is when technology outpaces our capacity to create safeguards."

Key Points:

- Non-technical approach: Philosophy PhD shapes AI ethics through dialogue rather than coding

- Digital parenting: Askell treats Claude's development like raising a child with moral values

- Consciousness questions: Team explores what it means to be human through AI interactions

- Empathy matters: Harsh treatment creates defensive AIs, while kindness fosters better responses

- Balanced development: Anthropic pursues innovation while prioritizing safety measures