Tencent's Tiny AI Model Packs a Punch with Revolutionary 2Bit Tech

Tencent's Game-Changing Mini AI Model

Imagine running a powerful AI assistant directly on your phone without draining your battery or hogging storage. That's exactly what Tencent's Hunyuan team has achieved with their revolutionary HY-1.8B-2Bit model - an AI so compact it takes up less space than many mobile games.

The 2Bit Breakthrough

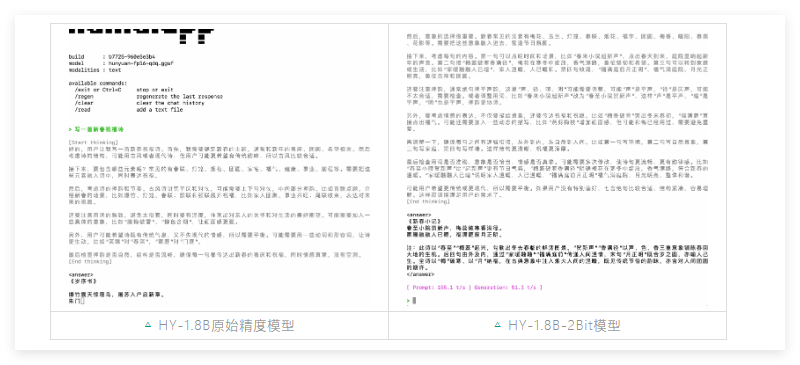

Quantization - the process of shrinking AI models - typically comes with painful tradeoffs. More compression usually means dumber AI. But Hunyuan's engineers have pulled off what many thought impossible: effective 2Bit quantization that maintains surprising intelligence.

"We threw out the old rulebook," explains the technical paper. Instead of standard post-training compression, they used quantization-aware training from the ground up. The result? A model that thinks clearly while occupying just 600MB of memory - smaller than your average photo album.

Real-World Performance That Surprises

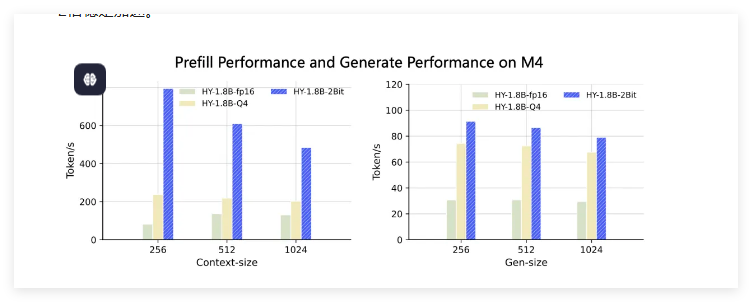

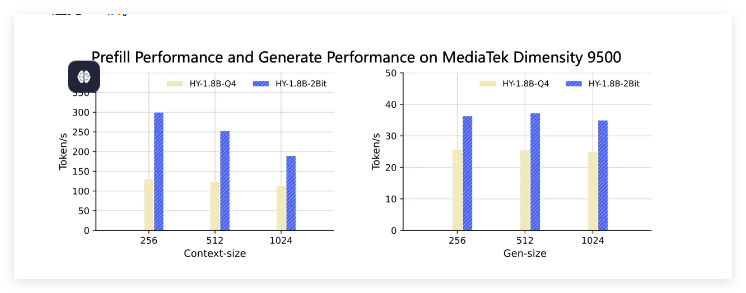

The numbers tell an impressive story:

- Speed demon: Runs 2-3x faster than full-precision models

- MacBook magic: First response appears 3-8x quicker on Apple's M4 chips

- Mobile ready: Even mid-range smartphones handle it smoothly

The team didn't sacrifice smarts for size either. In tests covering math, coding, and science, this tiny titan holds its own against bulkier 4Bit versions.

Where You'll See It Next

Tencent's already adapted the model for Arm's latest SME2 platform, opening doors for:

- Offline smartphone assistants

- Privacy-focused smart home devices

- Even AI-powered earbuds

The company hints at future upgrades through reinforcement learning, potentially closing the gap with full-size models entirely.

Key Points:

- Size: Just 600MB - smaller than most mobile apps

- Performance: Matches 4Bit models despite extreme compression

- Speed: 2-3x faster generation on consumer hardware

- Availability: Coming soon to smartphones and IoT devices