Robots Get Human-Like Touch With New Baihu-VTouch Dataset

Robots Gain Human-Like Touch Sensitivity

Imagine trying to thread a needle while wearing thick gloves. That's essentially the challenge robots face today when attempting delicate tasks. But a new development might change everything.

The Guodi Center and Weitai Robotics have teamed up to create Baihu-VTouch, a groundbreaking dataset that merges visual and tactile data across different robotic systems. This isn't just another collection of numbers - it's potentially the key to unlocking truly dexterous robots.

Massive Scale Meets Real-World Applications

What makes Baihu-VTouch special? For starters, its sheer size:

- Over 60,000 minutes of real-world interaction data

- Nearly 91 million individual contact samples

- High-resolution recordings capturing subtle physical changes at 120Hz

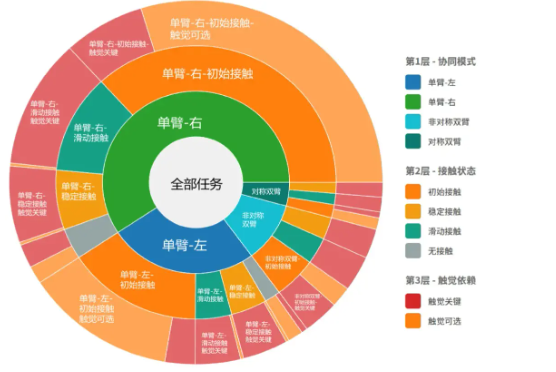

The dataset doesn't just measure pressure - it combines tactile sensor readings with RGB-D depth data and precise joint positioning information. This multi-angle approach gives robots something closer to human perception.

One Dataset For All Robots

Traditionally, robotic sensing research has been limited by platform-specific datasets. Baihu-VTouch breaks this mold by including data from:

- Full-size humanoid robots like 'Qinglong'

- Wheeled robotic arms such as D-Wheel

- Even handheld gripper devices

This cross-platform approach means algorithms trained on Baihu-VTouch could work across different robot designs - potentially saving developers countless hours of reprogramming.

Training Robots For Everyday Life

The researchers didn't just collect random interactions. They structured the dataset around practical scenarios where touch sensitivity matters most:

- Home environments - handling delicate dishes or adjusting grip on slippery objects

- Restaurant settings - safely grasping fragile glassware or soft foods

- Factory floors - precise assembly requiring perfect pressure control

- Special operations - working in hazardous conditions where vision alone isn't enough Early tests show robots using this visual-tactile combo can better understand contact states during tasks, leading to more natural movements and fewer failures.

The implications are huge - from safer elder care robots to more adaptable manufacturing systems. While we're not quite at human-level dexterity yet, Baihu-VTouch represents a significant step toward closing that gap.

Key Points:

- First large-scale multimodal dataset combining vision and touch sensors

- Covers multiple robot platforms for broader applicability

- Structured around real-world tasks rather than lab experiments

- Could accelerate development of robots capable of delicate manipulation