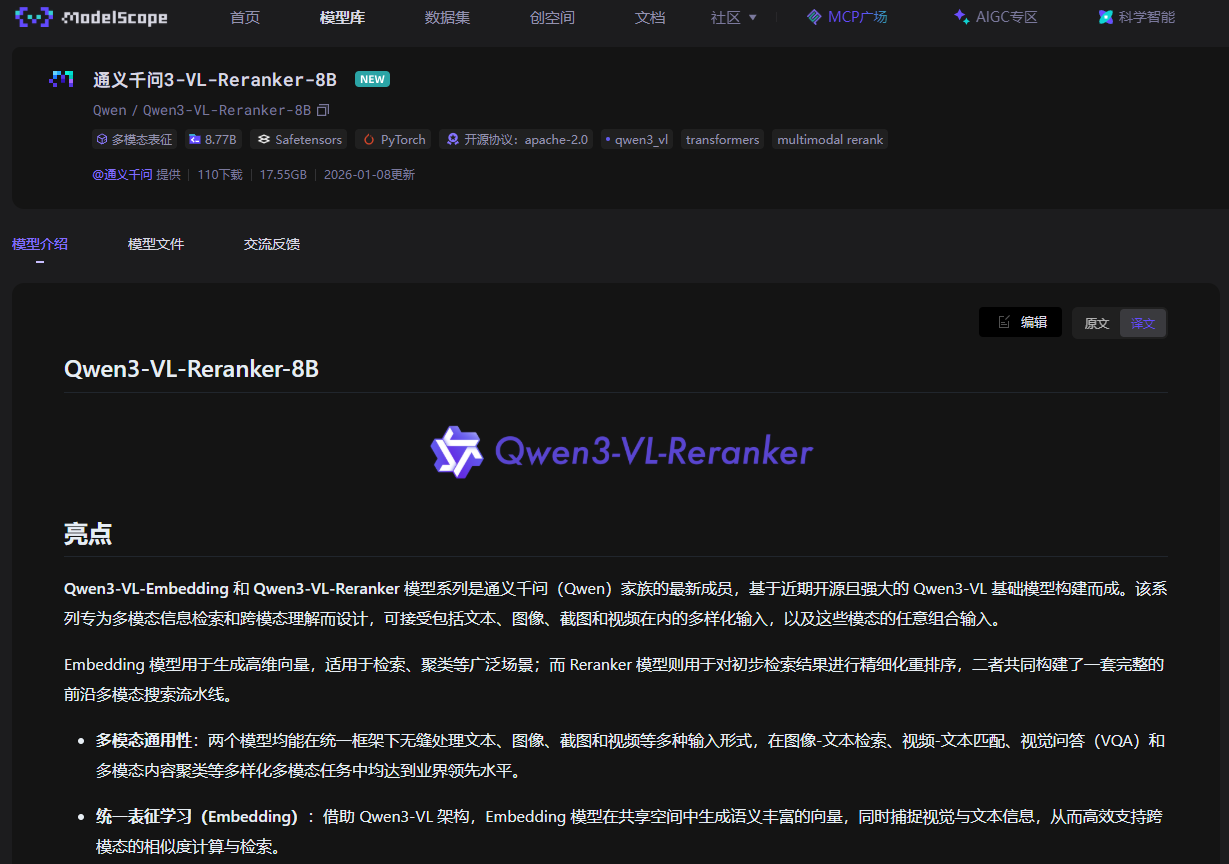

Qwen3-VL-Embedding: Your Multilingual Multimodal Search Powerhouse

Product Introduction

Ever wished you could search images using text descriptions or find videos that match written content? Qwen3-VL-Embedding makes this possible through cutting-edge multimodal understanding. Built on the robust Qwen3-VL foundation, this tool doesn't just analyze different media types—it truly comprehends their relationships.

Key Features

Cross-Media Superpowers

Imagine typing "sunset over mountains" and getting matching photos, paintings, and video clips—that's Qwen3-VL-Embedding's party trick. Its unified representation space treats text and visuals as equals.

Precision That Counts

The secret sauce? A sophisticated reranking system that goes beyond simple matches to understand deeper semantic connections. Your search results suddenly become scarily accurate.

Global Ready

With support for 30+ languages out of the box, researchers worldwide can work comfortably in their native tongues while accessing international content.

Flexible Framework

The model adapts to your needs—adjust vector dimensions based on whether you prioritize speed or precision in your specific application.

Product Data

- Supported Inputs: Text (30+ languages), Images (JPEG/PNG), Videos (MP4/MOV)

- Processing Speed: Generates embeddings faster than you can say "multimodal"

- Integration: Plays nicely with existing Python-based systems through simple API calls

- Video Handling: Smart frame sampling extracts key moments without processing entire clips

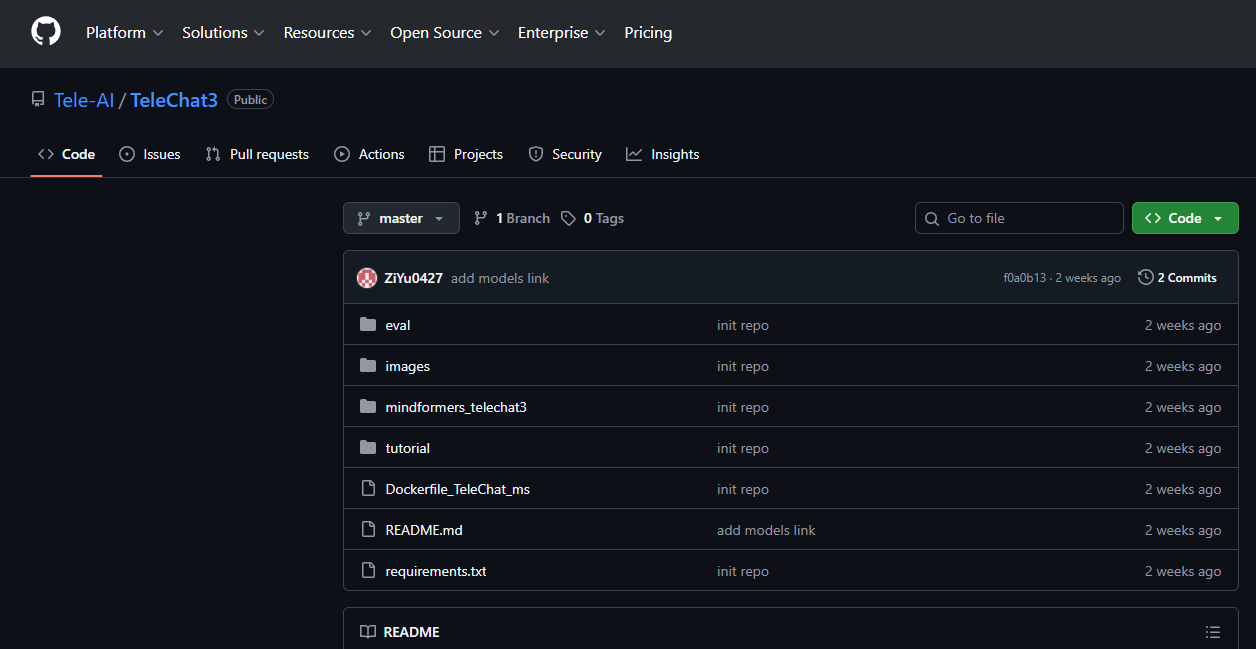

The best way to understand its capabilities? Dive into the GitHub repository where you'll find installation guides, sample code, and pretrained models ready for experimentation.