OpenAI Bolsters ChatGPT Security Against Sneaky Prompt Attacks

OpenAI Tightens ChatGPT's Security Belt

In an era where AI assistants routinely browse the web and interact with external apps, OpenAI is shoring up ChatGPT's defenses against increasingly sophisticated attacks. The company recently introduced two significant security enhancements designed to protect users from prompt injection vulnerabilities - a growing concern as AI systems become more interconnected.

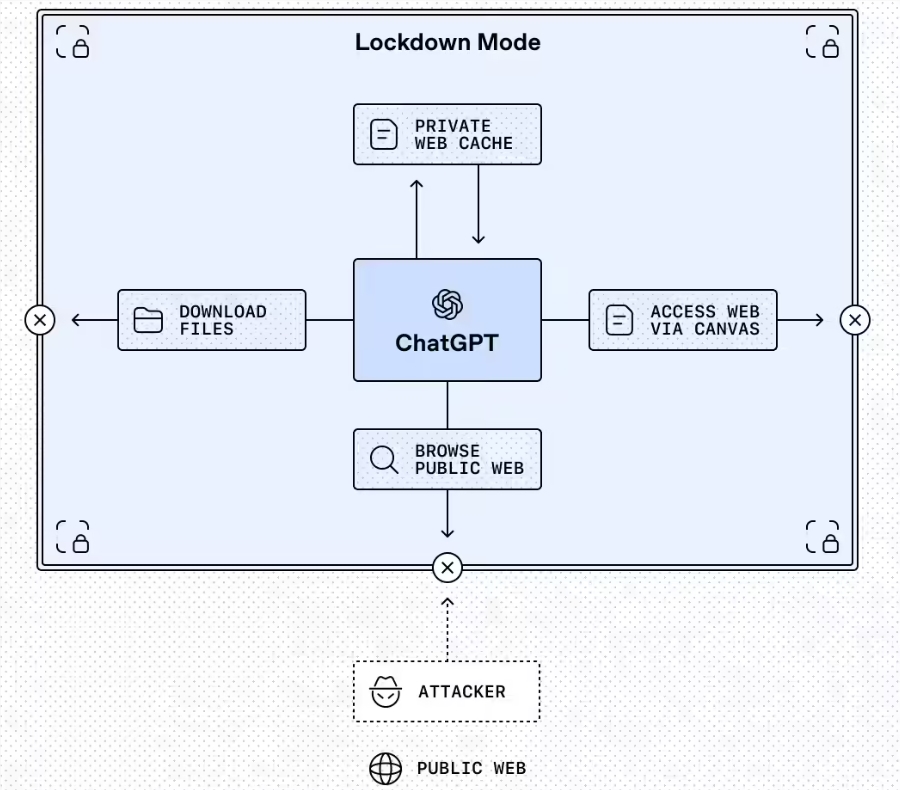

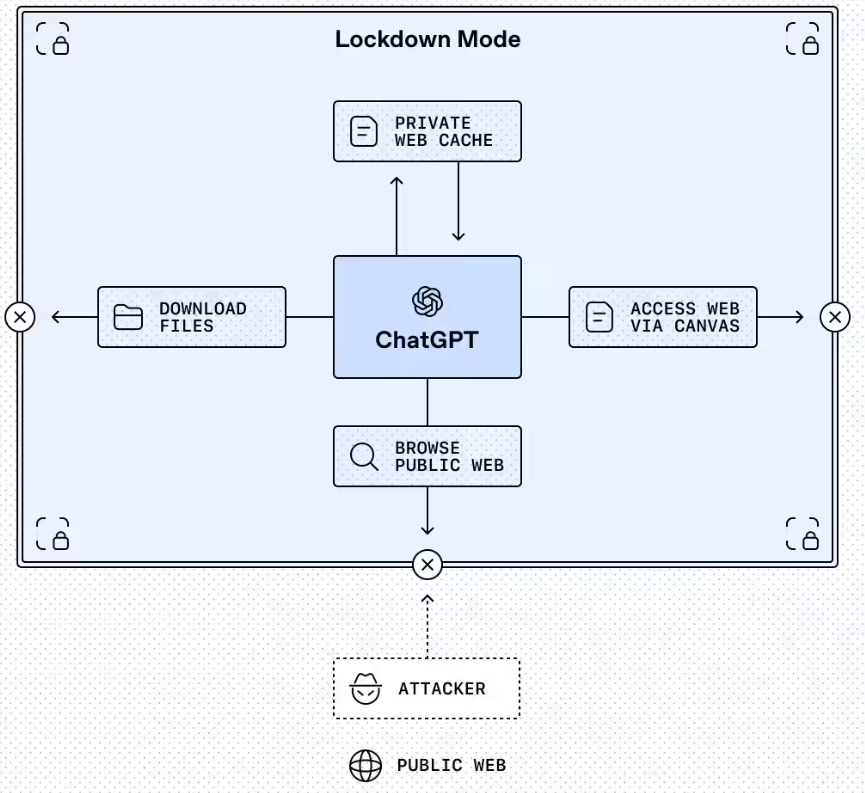

Lockdown Mode: Fort Knox for Sensitive Conversations

The first innovation, dubbed Lockdown Mode, functions like a digital panic room for high-stakes interactions. Currently available for enterprise, education, healthcare and teacher versions, this optional setting severely restricts how ChatGPT communicates with external systems. Imagine it as temporarily disabling all windows and doors while discussing classified information.

"We designed Lockdown Mode specifically for situations where data security can't be compromised," explained an OpenAI spokesperson. When activated, the mode:

- Limits web browsing to cached content only

- Automatically disables features lacking robust security guarantees

- Allows administrators to fine-tune permitted applications

The controls aren't just binary switches either. Organization admins can create custom roles specifying exactly which operations remain available during lockdown periods. Surprisingly versatile, the system even provides compliance logs perfect for regulatory audits - a must-have feature for healthcare and financial institutions.

Warning Labels Arrive for Risky Features

The second measure introduces standardized "Elevated Risk" tags across ChatGPT's ecosystem (including Atlas and Codex). These visual warnings highlight functions that - while useful - carry higher security implications.

"Some capabilities genuinely improve productivity but come with tradeoffs," notes OpenAI's security team. The labels serve as clear signposts, particularly when dealing with private data or enabling network access features.

The warnings don't just flash red lights either. Each tagged feature includes:

- Concise explanations of potential risks

- Recommended usage scenarios

- Practical mitigation strategies

- Clear indicators of what changes upon activation

Why These Changes Matter Now

The updates arrive as prompt injection attacks grow more sophisticated. Hackers have discovered they can manipulate AI systems by embedding malicious commands within seemingly innocent prompts - potentially triggering unauthorized actions or data leaks.

OpenAI's approach combines proactive restriction (Lockdown Mode) with transparent communication (risk labels). While consumer versions will gain access soon, enterprise clients already benefit from these protections today.

The moves demonstrate how seriously OpenAI takes security as its models become workplace staples rather than just conversational curiosities.

Key Points:

- Lockdown Mode severely limits external interactions for sensitive use cases

- Elevated Risk tags provide clear warnings about potentially dangerous functions

- Both measures build upon existing sandboxing and URL protection systems

- Enterprise/education versions get first access before consumer rollout

- Comes amid growing concerns about prompt injection vulnerabilities