NVIDIA Open-Sources Audio2Face AI for Real-Time Animation

NVIDIA Democratizes Facial Animation with Open-Source Audio2Face

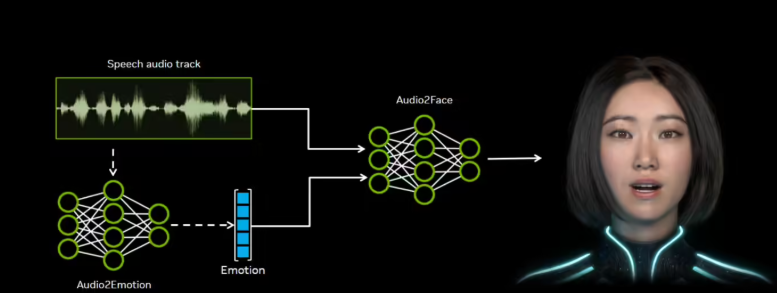

In a major move for digital content creation, NVIDIA has made its Audio2Face generative AI model publicly available through open-source licensing. This advanced technology transforms audio inputs into realistic facial animations in real-time, with applications spanning gaming, film production, and virtual customer service.

Technical Capabilities

The system analyzes acoustic features including phonemes, intonation, and speech patterns to generate accurate lip-syncing synchronized with emotional expressions. NVIDIA's release includes:

- Complete software development kit (SDK)

- Training framework for model customization

- Plugins for Autodesk Maya and Unreal Engine 5.5+

- Both regression and diffusion model architectures

The technology supports dual operational modes: offline rendering for pre-recorded audio and real-time processing for dynamic AI characters.

Industry Adoption

Leading game studios have already integrated Audio2Face into production pipelines:

- Survios implemented the technology in Alien: Fireteam Elite, streamlining facial capture processes

- Farm51 used it extensively in Chernobylite 2: Zone, cutting animation production time significantly

Wojciech Pazdur, Innovation Director at Farm51, called the technology "a revolutionary breakthrough" for character animation.

Developer Resources

The open-source package available at NVIDIA's developer portal includes:

- Core animation algorithms

- Local execution plugins

- Complete training framework documentation

- Sample implementation code

Developers can fine-tune models using proprietary datasets to create domain-specific solutions.

Future Implications

This release lowers barriers for creating expressive virtual characters across industries. As NVIDIA continues advancing its AI tools ecosystem, we can anticipate more sophisticated digital interactions in entertainment, education, and enterprise applications.

Key Points:

- 🚀 Open-source availability: Full SDK and training framework released publicly

- ⏱️ Dual processing modes: Supports both pre-rendered and real-time applications

- 🎮 Proven adoption: Already implemented in major game titles

- 🔧 Developer-friendly: Includes plugins for popular engines like Unreal

- 💡 Customizable: Models can be fine-tuned with proprietary data