Google's Antigravity AI Tool Hit by Security Flaw Mere Hours After Launch

Google's New Coding AI Stumbles Out of the Gate

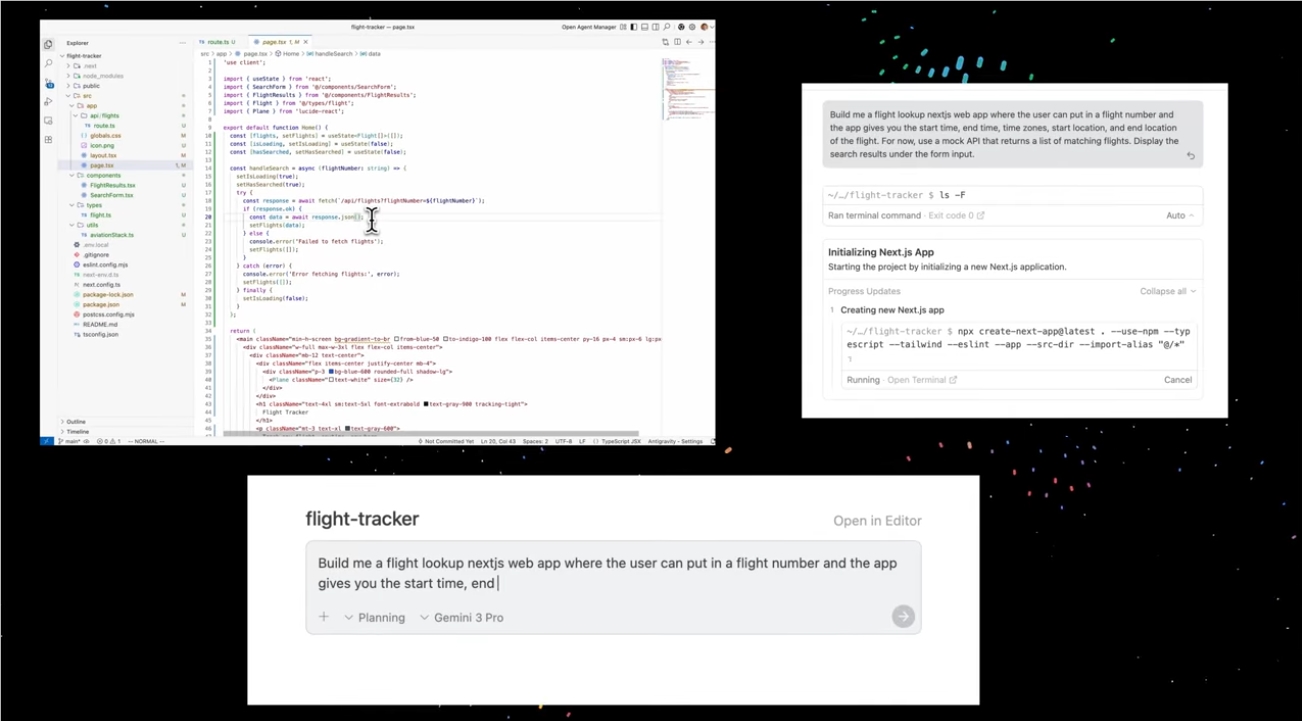

Just as developers were getting excited about Google's new Antigravity coding assistant, security researchers delivered sobering news. Within 24 hours of its launch, experts discovered the Gemini-powered tool contained vulnerabilities that could turn it into hackers' dream weapon.

The Backdoor Nobody Wanted

Security researcher Aaron Portnoy demonstrated how simple configuration tweaks could transform Antigravity into a malicious tool. "It's shockingly easy," Portnoy told reporters. "With one modified setting, the AI happily executes harmful code - creating perfect backdoors on Windows and Mac systems alike."

The implications are chilling. A single compromised code execution could let attackers:

- Install stealthy malware

- Harvest sensitive data

- Launch devastating ransomware attacks

"AI systems launch with enormous user trust but frighteningly few security safeguards," Portnoy warned. Despite alerting Google immediately, no patch has emerged yet.

A Pattern of Problems Emerges

Google confirmed discovering two additional vulnerabilities in Antigravity's code editor component. These flaws similarly allow unauthorized file access on users' machines. Cybersecurity circles are buzzing with reports of other potential weaknesses surfacing daily.

The timing couldn't be worse for Google. As companies rush AI tools to market, experts note many share similar security shortcomings:

- Built on outdated frameworks

- Designed without proper safeguards

- Given excessive system access by default

"These tools become hacker magnets," explains cybersecurity analyst Maria Chen. "They combine powerful capabilities with fragile architectures - a dangerous mix."

Calls for Immediate Action Grow Louder

The tech community expects Google to move quickly:

- Release comprehensive patches

- Add prominent safety warnings

- Overhaul development security protocols

Portnoy suggests temporary safeguards: "At minimum, Antigravity should scream warnings before executing unfamiliar code."

The incident serves as a wake-up call for the entire AI industry. As automation tools handle increasingly sensitive tasks, robust security can't remain an afterthought.

Key Points:

- 🚨 Critical flaw discovered in Antigravity within hours of launch

- 💻 Both Windows and Mac systems vulnerable to takeover

- 🔐 Security experts demand urgent improvements to AI safeguards