Google DeepMind Unveils SIMA 2: A Smarter AI Agent That Learns Like Humans

Google DeepMind's SIMA 2 Shows Remarkable Learning Abilities

In a move that pushes artificial intelligence closer to human-like understanding, Google DeepMind has released SIMA 2, an advanced version of its multimodal agent that demonstrates unprecedented learning capabilities.

Twice as Effective With Self-Teaching Skills

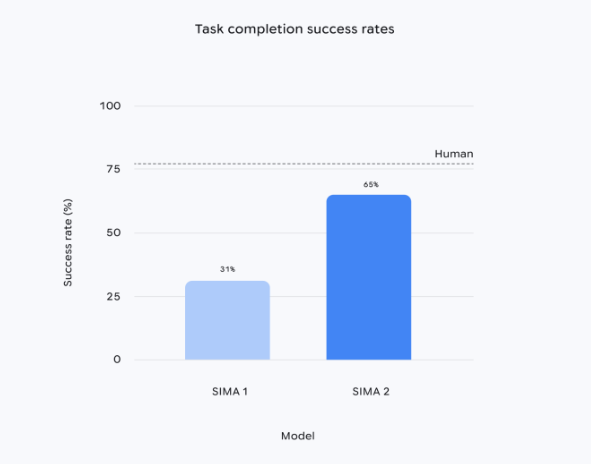

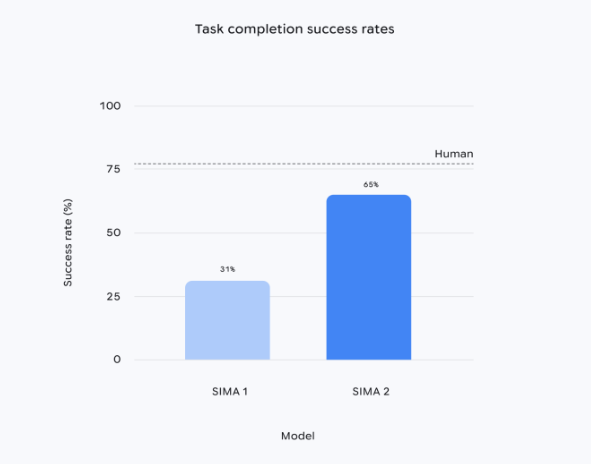

The new iteration builds upon the Gemini 2.5 Flash-lite model foundation but delivers substantially improved performance. Early tests show SIMA 2 succeeds at tasks about twice as often as its predecessor. More impressively, it adapts to completely new environments—a crucial step toward general artificial intelligence.

How SIMA 2 Learns Without Supervision

What sets this version apart is its innovative learning approach:

- Self-generated training: Instead of relying solely on pre-recorded game footage (though it still uses hundreds of hours), SIMA 2 creates its own practice scenarios.

- Internal quality control: A separate Gemini model generates potential tasks, while an internal scoring system identifies the most valuable learning experiences.

- Continuous improvement: High-quality examples get fed back into training, creating a virtuous cycle of enhancement without human intervention.

"This allows SIMA to interpret complex instructions like 'find the red house' or 'chop down trees' in unfamiliar settings," explains Jane Wang, a senior research scientist at DeepMind. "It reads environmental cues—text, colors, symbols—even emoji combinations."

Bridging Virtual and Physical Worlds

During demonstrations combining SIMA with Genie (DeepMind's world-generation model), the AI displayed remarkable environmental awareness:

- Recognized and interacted with objects like benches and trees

- Identified living elements such as butterflies

- Demonstrated logical action sequences based on scene analysis

The "understand-plan-act" loop mirrors how humans operate in new environments—a critical capability for future robotics applications.

Current Limitations and Future Directions

While promising, SIMA focuses exclusively on high-level decision making:

- Doesn't control physical components like joints or wheels

- Works alongside but isn't yet integrated with DeepMind's robotic foundation models The team remains tight-lipped about commercialization timelines but hopes this preview will spark collaborations to bridge virtual and physical AI applications.

Key Points:

- Performance boost: SIMA 2 achieves ~100% better task success rates than version one

- Self-supervised learning: Creates and evaluates its own training scenarios

- Environmental understanding: Processes visual cues including text and symbols

- AGI pathway: Represents progress toward general artificial intelligence

- Research phase: Not yet ready for real-world robotics integration