Google cracks AI's memory problem with onion-inspired learning

Google Solves AI's Biggest Memory Flaw

Imagine spending years mastering chess, only to forget how the pieces move after learning checkers. That's been the frustrating reality for artificial intelligence - until now. Google's research team has unveiled Nested Learning, a radical new approach that finally tackles AI's notorious "catastrophic forgetting" problem.

Why AI Keeps Forgetting

Traditional neural networks learn like chalk on a blackboard - new information wipes away the old. When an AI trained to write poetry learns coding, its literary skills vanish almost completely. Scientists call this "catastrophic forgetting," and it's forced developers to create specialized AIs rather than versatile learners.

The Memory Onion Solution

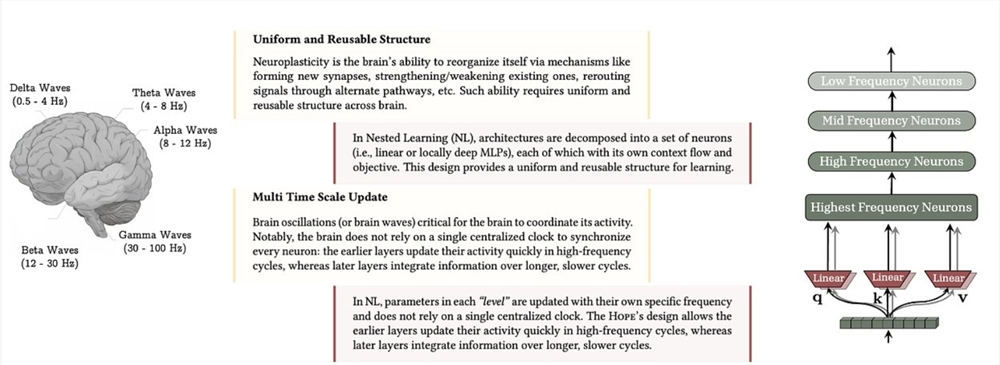

Google's breakthrough mimics how human brains store memories differently:

- Outer layers handle quick tasks (like remembering a conversation)

- Middle layers integrate recent experiences

- Core layers safeguard fundamental knowledge (like language rules)

This "memory onion" architecture lets each layer update at its own pace. The result? New knowledge sticks while old skills remain sharp.

Testing Shows Remarkable Results

The prototype system HOPE achieved:

- 98% retention of old skills when learning new ones (versus 70% previously)

- 20% better accuracy at recalling information from long conversations

- More human-like gradual forgetting instead of sudden memory drops

Real-World Impact

This technology could transform:

- Chatbots that continuously improve without retraining

- Medical AIs that learn from new cases without forgetting textbook knowledge

- Robots that acquire skills safely without "forgetting" basic movements

The era of truly lifelong learning machines may have just begun.

Key Points:

- Google's Nested Learning solves AI's memory retention problem

- Mimics human brain's layered memory system

- Early tests show near-perfect skill retention

- Could enable continuously learning AI assistants and robots