Baidu's ERNIE-4.5 Model Tops Hugging Face Rankings

Baidu's ERNIE-4.5 Model Dominates Hugging Face Rankings

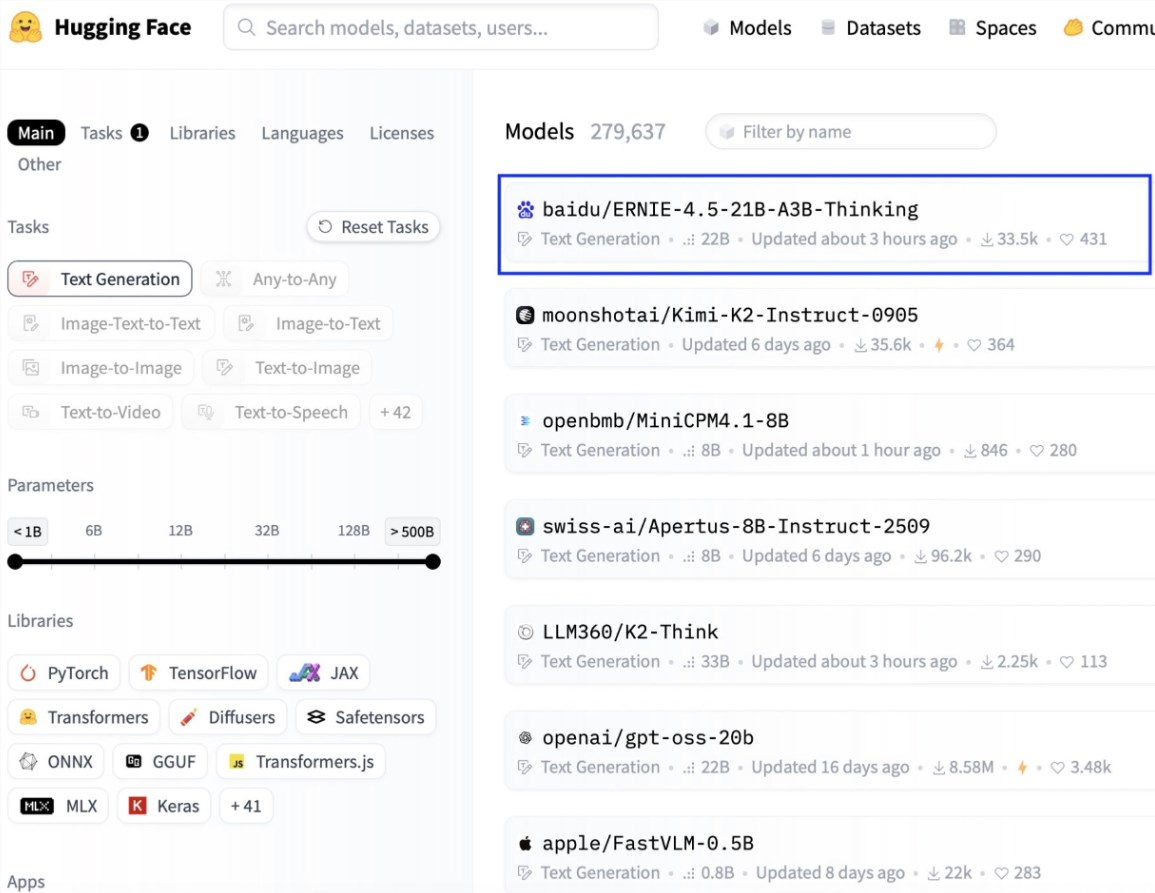

Baidu's ERNIE large model family has achieved a major breakthrough with its latest release, ERNIE-4.5-21B-A3B-Thinking, which has quickly risen to the top of Hugging Face's text generation model rankings while securing third place in the platform's overall model list. This achievement underscores China's growing influence in the global AI landscape.

Technical Specifications and Innovation

The model employs an advanced Mixture-of-Experts (MoE) architecture, featuring 21 billion total parameters with only 3 billion activated per token. This sparse activation approach significantly reduces computational requirements while maintaining high performance output. Notably, the model supports an impressive 128K long context window, making it particularly effective for complex tasks like logical reasoning and academic analysis.

Unlike most competitors relying on PyTorch, Baidu developed ERNIE-4.5 using its proprietary PaddlePaddle deep learning framework. This independent framework enhances multimodal task compatibility and hardware optimization, placing Baidu alongside Google as one of the few companies using self-developed frameworks for large model training.

Performance Benchmarks and Capabilities

Benchmark tests reveal that ERNIE-4.5 performs comparably to industry leaders like Gemini 2.5 Pro and GPT-5 in various domains including:

- Logical reasoning

- Mathematical problem-solving

- Scientific analysis

- Coding tasks

- Text generation

The model demonstrates remarkable parameter efficiency, outperforming larger models like Qwen3-30B on mathematical reasoning benchmarks (BBH and CMATH) despite having fewer total parameters.

Additional features include:

- Efficient tool calling functionality for API integration

- Reduced hallucination in long-context processing / Bilingual (Chinese-English) optimization for global applications

The open-source community has responded enthusiastically, with surging download numbers on Hugging Face. Developers can integrate the model using popular tools including vLLM, Transformers 4.54+, and FastDeploy.

Strategic Importance and Future Outlook

The Apache 2.0 licensed release significantly lowers barriers to AI adoption while strengthening Baidu's position in open-source AI development. This follows June's release of ten other models in the ERNIE 4.5 family, collectively showcasing China's advancements in MoE architecture and reasoning optimization.

The model represents a paradigm shift by proving that deep reasoning doesn't require trillion-scale dense parameters. Its efficient design makes high-performance AI more accessible to resource-limited developers, accelerating practical applications beyond research labs.

Key Points:

- Top-ranked performance: Leads Hugging Face text generation category

- Efficient architecture: MoE design activates only 3B of 21B parameters per token

- Technical independence: Developed using Baidu's PaddlePaddle framework

- Practical applications: Excels in reasoning, math, coding with reduced hallucinations

- Open ecosystem: Apache 2.0 license promotes commercial use and innovation