Ant Group's LingBot-VLA Brings Human-Like Precision to Robot Arms

Ant Group's Robot Arms Get an AI Brain Upgrade

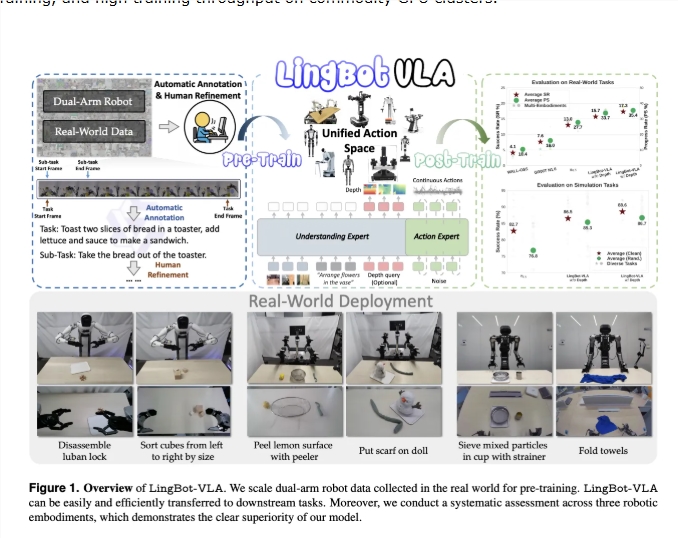

In a significant leap for robotic dexterity, Ant Group has introduced LingBot-VLA - an artificial intelligence system that brings human-like precision to mechanical arms. This isn't just another incremental improvement; it's potentially transformative for factories, warehouses, and any space where robots need to handle objects with care.

Teaching Robots the Art of Touch

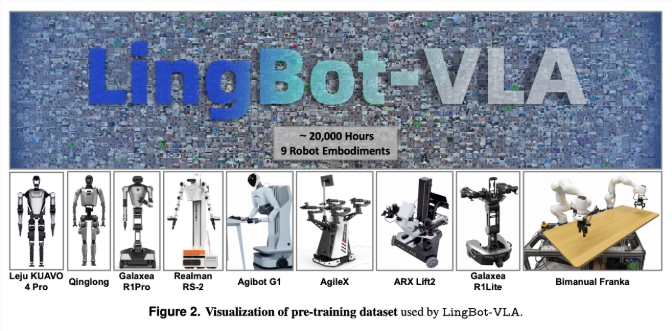

The secret sauce? Massive real-world training. Researchers collected approximately 20,000 hours of remote operation data across nine different dual-arm robots - equivalent to someone practicing non-stop for over two years. These weren't just simple movements either; the dataset captures the nuanced sequences needed for complex tasks.

"Imagine trying to teach someone ballet through YouTube videos," explains Dr. Li Wei, lead researcher on the project. "That's essentially what we've done with robots - but instead of dance steps, we're teaching them how two arms can work in perfect harmony."

How It Works: Seeing, Understanding, Acting

LingBot-VLA combines three crucial capabilities:

- Visual perception through Qwen2.5-VL that processes multiple camera angles simultaneously

- Language understanding to interpret instructions like "gently place the cube on top"

- Action prediction using conditional flow matching for smooth, continuous movements

The system even compensates for missing depth sensor data thanks to its built-in spatial reasoning module - think of it like giving robots an innate sense of how far objects are without constantly measuring.

Real-World Performance That Surprises Experts

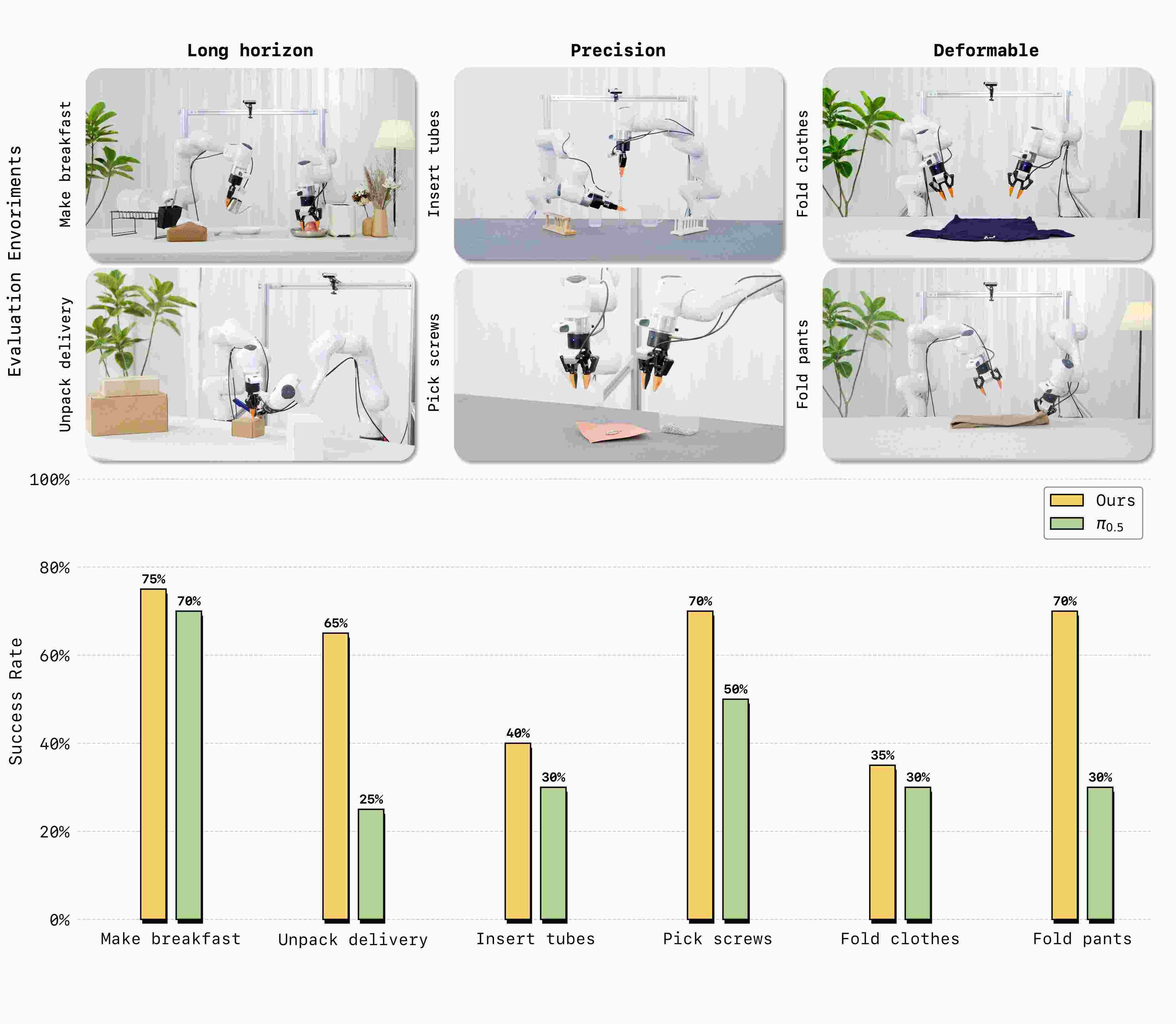

In benchmark tests involving 100 challenging tasks:

- Achieved 17.3% success rate (significantly higher than competitors)

- Adapted to new robots with just 80 demonstrations

- Showed particular skill in delicate operations like inserting pegs and folding cloth

The implications are substantial for industries relying on precise automation. Where current systems often struggle with unpredictable real-world conditions, LingBot-VLA demonstrates unusual adaptability.

Opening the Floodgates for Robotics Research

In an unexpected move that could accelerate innovation across the field:

- Full training toolkit now open source

- Optimized for GPU clusters (1.5-2.8x faster training than alternatives)

- Complete model weights available to researchers worldwide

This democratization of advanced robotics AI might just lower barriers enough to see a surge in practical applications - from assisted living facilities to disaster response robots.

Key Points:

- Dual-arm coordination: Enables complex manipulation tasks beyond single-arm capabilities

- Rapid adaptation: Learns new robots quickly with minimal demonstration data

- Depth perception: Maintains spatial awareness even without sensors

- Open ecosystem: Public release could spur widespread adoption and innovation