Xiaohongshu Unveils Open-Source AI Model dots.llm1 with Record 11.2T Training Tokens

Chinese social media platform Xiaohongshu has entered the AI race with the release of dots.llm1, its first open-source large language model. The massive 142-billion-parameter system leverages cutting-edge Mixture-of-Experts (MoE) architecture while maintaining efficient operation - activating just 14 billion parameters during inference to slash computational costs.

What sets dots.llm1 apart is its unprecedented training dataset: 11.2 trillion non-synthetic tokens carefully filtered through a rigorous three-stage quality pipeline. This data advantage translates to exceptional performance, with the model achieving an average score of 91.3 in Chinese language evaluations - surpassing established competitors like DeepSeek's V2/V3 and Alibaba's Qwen2.5 series.

The technical architecture reveals why this model delivers both power and efficiency:

- Transformer-based decoder with MoE replacing traditional feedforward networks

- 128 routing experts + 2 shared experts per layer using SwiGLU activation

- Dynamic selection of 6 relevant experts + 2 shared experts per token

- Improved RMSNorm normalization for training stability

- Load balancing strategies preventing expert network overuse

The training process employed the AdamW optimizer to control overfitting while managing gradient explosions effectively. Xiaohongshu has taken the unusual step of open-sourcing intermediate checkpoints at every 1-trillion-token milestone, providing researchers unprecedented visibility into large-scale model development.

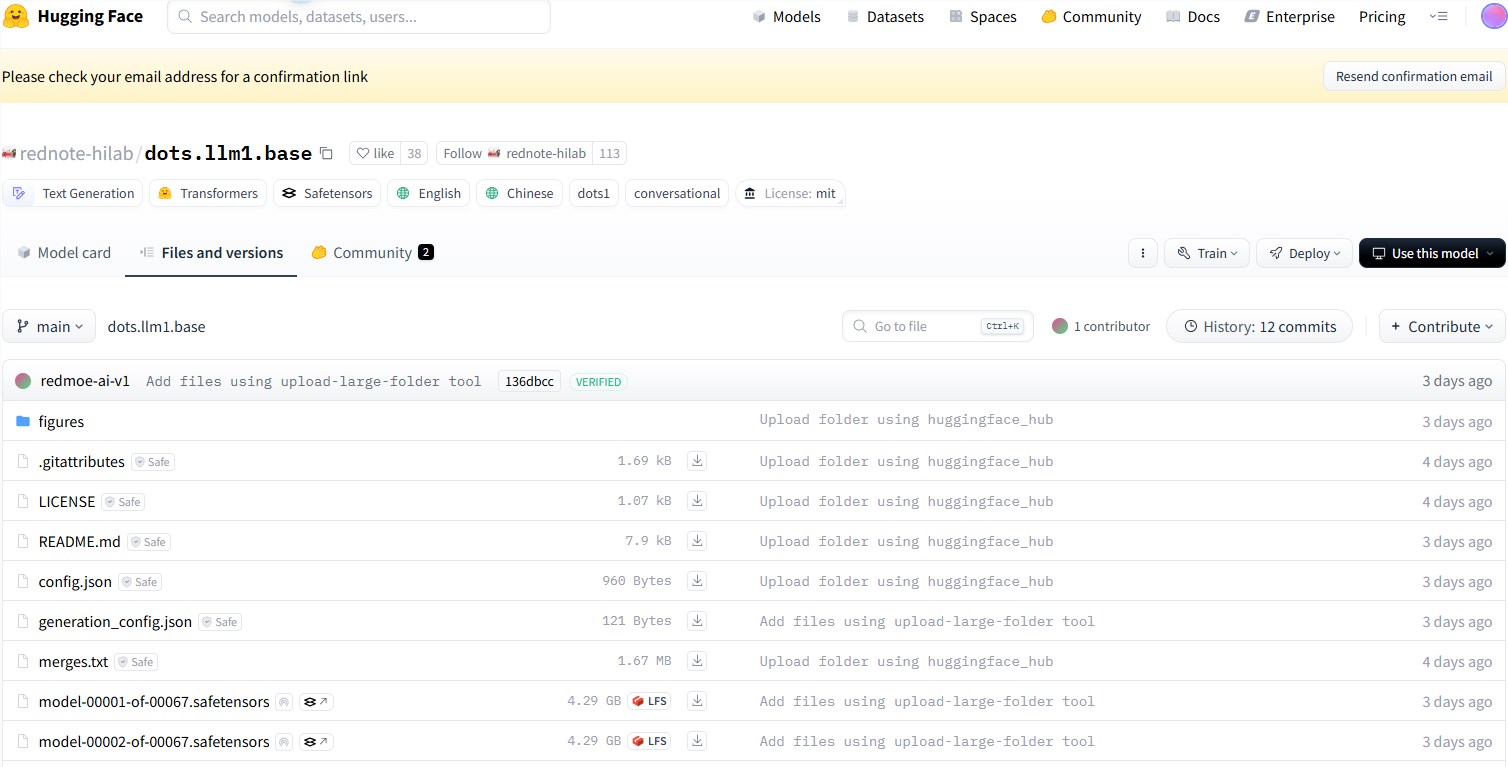

Available on Hugging Face, this release marks a significant milestone for Chinese AI development. Could this be the beginning of more open-source contributions from major tech players in the region?

Key Points

- Xiaohongshu's dots.llm1 features a 142B parameter MoE architecture activating only 14B parameters during use

- Trained on a record 11.2 trillion high-quality tokens through rigorous filtering pipelines

- Outperforms competitors in Chinese language tasks with average score of 91.3

- Innovative expert routing and load balancing ensure computational efficiency

- Complete model and training checkpoints available as open-source resources