Xiaohongshu's New AI Tool Lets You Compose Images Like a Pro

Xiaohongshu and Fudan Break New Ground in AI Image Generation

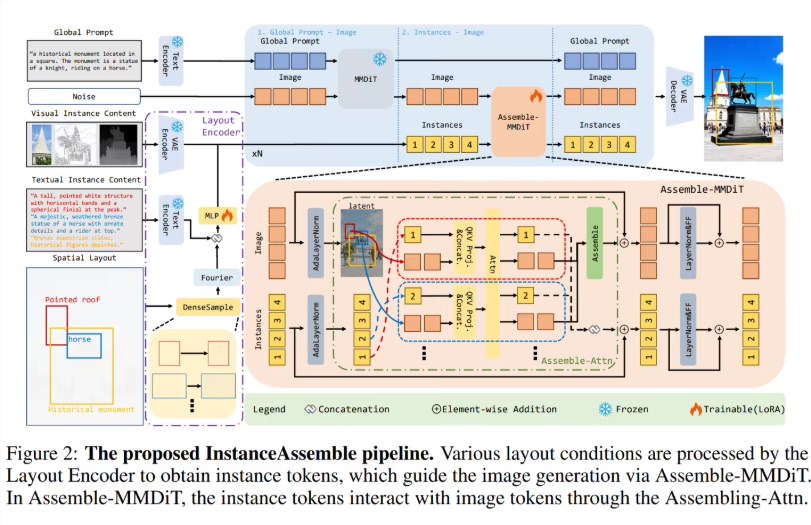

The collaboration between social media platform Xiaohongshu and Fudan University has yielded InstanceAssemble, a breakthrough technology that finally gives users real control over where objects appear in AI-generated images. Accepted by the prestigious NeurIPS 2025 conference, this innovation addresses what creators have long complained about - AI's tendency to misplace or misunderstand spatial relationships between elements.

Solving the Placement Puzzle

While current text-to-image systems can produce stunning visuals, they often stumble when asked to position objects precisely. Want a cat sitting on a specific chair? The AI might put it beside the chair instead - or worse, turn it into some feline-chair hybrid. InstanceAssemble changes this through its "instance assembly attention" mechanism.

"It's like giving the AI spatial awareness," explains Dr. Li Wen from Fudan's Computer Science department. "Users simply define bounding boxes and descriptions for each element, and the system generates content exactly where it should be."

Lightweight Yet Powerful

The technology impresses with its efficiency. Rather than requiring complete model retraining, InstanceAssemble adds just:

- 3.46% parameters for Stable Diffusion3-Medium

- A mere 0.84% for Flux.1 models

This lightweight approach makes adoption practical for developers working with existing systems.

Benchmarking Progress

The team didn't stop at the core technology. They've also released Denselayout - a benchmark dataset containing 90,000 instances - along with new evaluation metrics to standardize performance measurement across the industry.

With all code and pretrained models available on GitHub, InstanceAssemble could revolutionize fields from graphic design to advertising by finally giving creators pixel-perfect control over AI-generated compositions.

Key Points:

- 🎯 Pinpoint placement through innovative "instance assembly attention"

- ⚡ Minimal overhead adds less than 4% parameters to existing models

- 🔓 Fully open-source including pretrained models and benchmarking tools