Waymo's AI-Powered Taxis Get a Gemini Boost! 🚗💥

Move over, human drivers — Waymo’s back in the driver’s seat, and this time, it’s bringing some serious AI firepower. The Google-owned autonomous driving company just leveled up by integrating Google’s Gemini model into its fleet of self-driving taxis. Fasten your seatbelts, because EMMA (yes, that’s the new model’s name) is here to navigate the future of driving.

The Gemini Revolution

Waymo has always had a bit of a secret weapon in its back pocket: Google DeepMind. But now, things are getting real spicy with the introduction of Gemini, Google's multimodal large language model (MLLM). Instead of just cranking out chatbots or dabbling in image generation, Waymo’s using this tech to train its futuristic taxis. And the result? A smarter, more adaptable autonomous vehicle that can actually predict future driving scenarios like it’s reading a crystal ball.

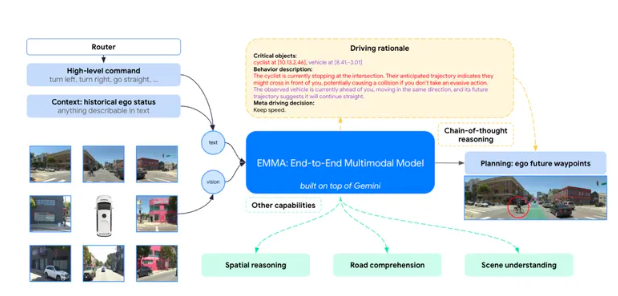

Meet EMMA: The End-to-End Multimodal Model

Introducing EMMA — Waymo’s shiny new toy in the autonomous arena. This “end-to-end multimodal model” takes sensor data from the environment (think cameras, radar, lidar, you name it) and turns it into future driving trajectories. Translation? Waymo’s self-driving vehicles are getting way better at dodging obstacles, navigating complex environments, and making decisions that just might put some human drivers to shame.

It’s Not Just About Avoiding Obstacles

But here’s where things get even juicier: Waymo’s not just using Gemini to avoid the odd pothole or pedestrian. Nope — they want to treat MLLMs like “first-class citizens” in the world of autonomous driving. This means the future of self-driving cars could look a lot more like a sci-fi flick, with vehicles that reason and think like humans, rather than just following pre-programmed rules.

Traditional Systems? Yeah, They’re Outdated

Let’s get real for a second. The way autonomous driving has been done until now? It’s been kind of... meh. Sure, traditional systems have made progress with their modular setups (you know, where each function like perception, mapping, prediction, and planning has its own little piece of the pie). But when things get complex (like driving through a construction zone or encountering a stray dog), these systems hit a wall.

That’s where Gemini and EMMA come to the rescue. These models pack what Waymo calls “world knowledge” and can perform “chain-of-thought reasoning”. In other words, they can analyze situations just like you would — except without the road rage.

EMMA’s Real-World Smarts (And a Few Quirks)

So what can EMMA do? Oh, just a little thing called navigating complex environments like a boss. Whether it’s a construction site, a family of ducks crossing the road, or a random detour, EMMA’s got it covered. This model processes sensor data to figure out the safest and smartest driving path at any given moment. It’s like having a hyper-aware co-pilot, except this one doesn’t need caffeine to stay alert.

But wait, there’s a catch. EMMA’s not perfect (yet). Right now, it’s still figuring out how to handle 3D sensor data from lidar and radar. So while EMMA’s pretty sharp, it’s not quite ready to take on every challenge the road throws its way. But don’t worry — Waymo’s on it. They’re pushing research forward to make sure EMMA can eventually handle all the data it needs to take autonomous driving to the next level.

The Road Ahead

Waymo’s work with the Gemini model and EMMA is groundbreaking, but it’s just the beginning. They’re hoping this tech will inspire others in the industry to step up and tackle some of the big challenges that still exist in autonomous driving. Sure, EMMA can’t do it all just yet, but progress is progress. And with companies like Waymo leading the charge, the future of driving is looking like something straight out of a tech geek’s dream.

Key Points to Remember:

- 🚗 Waymo is using Google’s Gemini model to create a next-gen autonomous driving system called EMMA.

- 🌍 EMMA processes sensor data to help self-driving taxis avoid obstacles and make smarter decisions.

- 🔍 There’s still work to be done — EMMA can’t process all types of sensor data yet, but Waymo’s on the case.

Summary

Waymo is harnessing the power of Google’s Gemini AI model to enhance autonomous driving technology.

The new EMMA model processes sensor data to help self-driving taxis make smarter decisions and avoid obstacles.

Waymo aims to treat MLLMs like Gemini as central to the future of autonomous driving, going beyond traditional systems.

While promising, EMMA still has limitations, particularly in handling 3D sensor data from lidar or radar.

Further research is needed to fully unlock the potential of this tech and drive the autonomous vehicle industry forward.