Tongyi Qianwen Unveils Qwen VLo Multimodal AI Model

Tongyi Qianwen Launches Qwen VLo Multimodal AI Model

Tongyi Qianwen has officially released Qwen VLo, a groundbreaking multimodal large language model that marks significant progress in visual content understanding and generation. This new model builds upon the strengths of the previous Qwen-VL series while introducing innovative features for creative applications.

Enhanced Visual Understanding and Generation

The model's standout feature is its progressive generation method, which constructs images systematically from left to right and top to bottom. This approach allows for continuous optimization during the creation process, ensuring harmonious and consistent final results.

Key Capabilities

- Semantic consistency: Maintains accuracy in image transformations (e.g., changing car colors while preserving original structures)

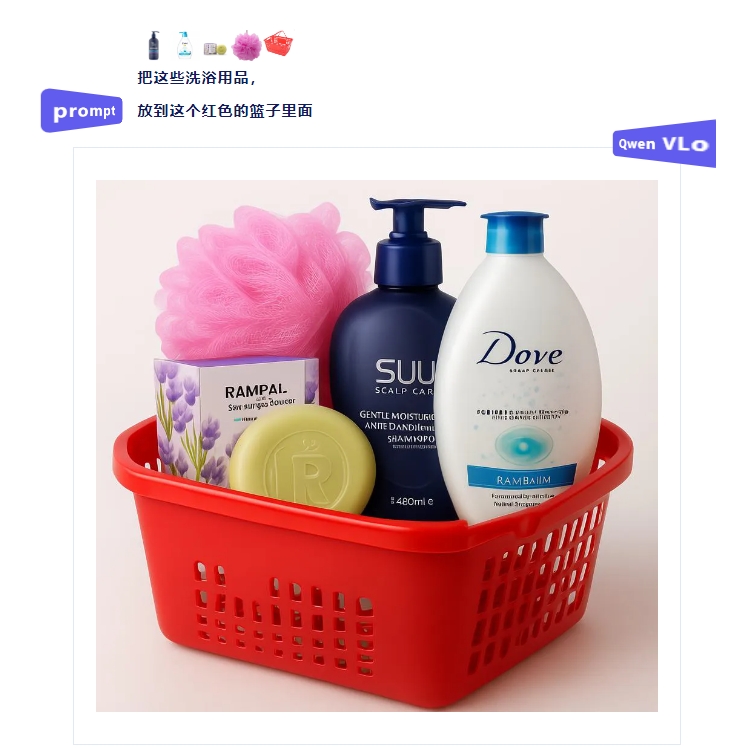

- Open instruction editing: Supports natural language commands for style changes, element additions, and background modifications

- Multilingual support: Processes instructions in Chinese, English, and other languages

- Flexible resolution: Generates images at any aspect ratio through dynamic resolution training

Practical Applications

Qwen VLo demonstrates versatile functionality including:

- Direct image generation from text (including posters)

- Background replacement and style transfers

- Multi-image processing and annotation

- Large-scale modifications based on open instructions

The model is currently accessible through the Qwen Chat platform, though developers note it remains in preview with some limitations to be addressed in future iterations.

Key Points

- Progressive generation method ensures high-quality visual output

- Superior semantic consistency compared to previous models

- Supports complex editing through natural language instructions

- Multilingual capability expands global accessibility

- Dynamic resolution accommodates diverse creative needs