Tongyi Qianwen Launches Open Source Qwen2.5-Coder

Tongyi Qianwen Launches Open Source Qwen2.5-Coder

The Tongyi Qianwen team has officially announced the open-source release of its latest series, Qwen2.5-Coder, aimed at fostering the development of Open Code large language models (LLMs). This release highlights the model's impressive strength, diversity, and practical applications in programming.

Key Features of Qwen2.5-Coder

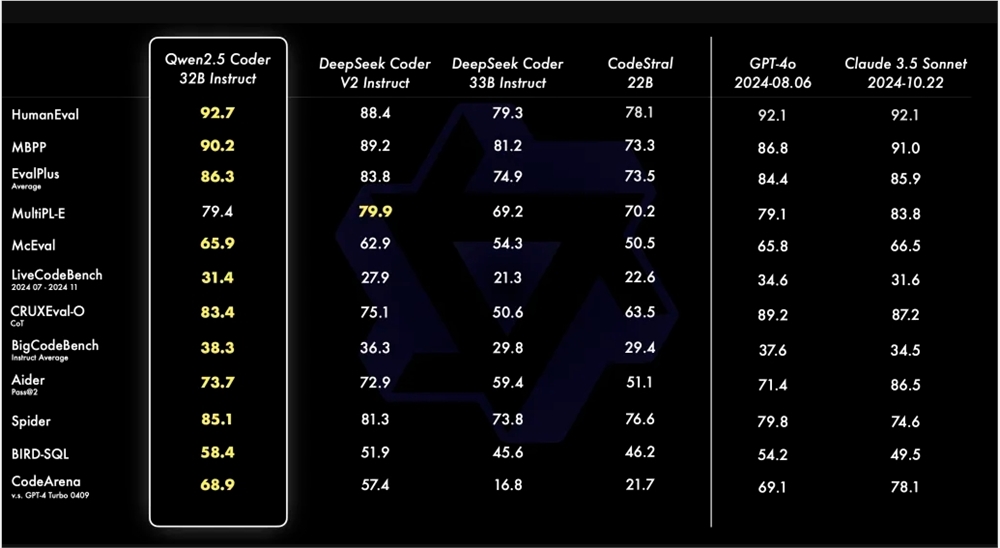

The Qwen2.5-Coder-32B-Instruct model has achieved state-of-the-art (SOTA) levels in coding capabilities, rivaling those of GPT-4o. It demonstrates comprehensive skills in code generation, code repair, and code reasoning, achieving top performance in multiple code generation benchmark tests. Notably, it has scored 73.7 on the Aider benchmark, matching GPT-4o's performance.

The Qwen2.5-Coder supports over 40 programming languages and has registered a score of 65.9 on McEval, displaying particularly strong performance in languages such as Haskell and Racket. This success is attributed to its unique data cleaning techniques and ratios used during the pre-training phase. In terms of code repair capabilities, Qwen2.5-Coder-32B-Instruct excels across multiple programming languages, achieving a score of 75.2 on the MdEval benchmark, thus ranking first in this category.

Alignment with Human Preferences

To evaluate Qwen2.5-Coder-32B-Instruct's alignment with human preferences, the team developed an internally labeled code preference evaluation benchmark known as Code Arena. Results indicate that Qwen2.5-Coder-32B-Instruct offers a significant advantage in preference alignment, enhancing its usability for developers.

Model Sizes and Licensing

This open-source release includes four model sizes: 0.5B, 3B, 14B, and 32B, accommodating the needs of different developers. The release features both Base and Instruct models, with the Base version serving as a foundation for developers to fine-tune their applications, while the Instruct version aligns with official chat models. Importantly, there is a positive correlation between model size and performance, with Qwen2.5-Coder achieving SOTA performance across all sizes.

The models sized 0.5B, 1.5B, 7B, 14B, and 32B are licensed under Apache 2.0, while the 3B model operates under a Research Only license. The team has confirmed the effectiveness of scaling in Code LLMs by evaluating the performance across different sizes of Qwen2.5-Coder across all datasets.

Conclusion

The open-source release of Qwen2.5-Coder provides developers with a powerful and versatile programming model, significantly contributing to the advancement and application of programming language models. This initiative is poised to enhance the capabilities of developers in various coding environments.

Qwen2.5-Coder Model Links:

Key Points

- Qwen2.5-Coder matches GPT-4o in coding capabilities.

- Supports over 40 programming languages with outstanding performance in Haskell and Racket.

- Includes multiple model sizes to cater to diverse developer needs.

- Licensed under Apache 2.0 for most models, encouraging open development.