Thinking Machine's Breakthrough Boosts Small Model Training 100x

Thinking Machine Revolutionizes Small Model Training with On-Policy Distillation

In a significant advancement for artificial intelligence, startup Thinking Machine has developed On-Policy Distillation, a training methodology that dramatically improves the efficiency of small AI models. Early benchmarks indicate performance improvements of 50 to 100 times on specialized tasks, potentially democratizing access to high-quality AI systems.

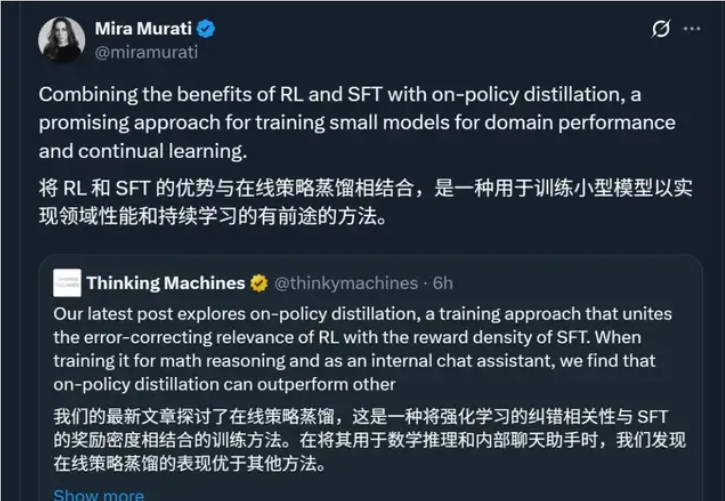

The breakthrough has garnered attention from prominent figures including Mira Murati, former Chief Technology Officer of OpenAI, who publicly endorsed the research. This development comes at a crucial time as the industry shifts focus toward more efficient, specialized AI solutions.

Hybrid Approach Creates 'AI Coach' System

The innovation addresses a fundamental challenge in AI training: balancing reinforcement learning's flexibility with supervised learning's efficiency. Traditional reinforcement learning allows models to explore solutions independently but requires extensive computation. Supervised learning provides direct answers but lacks adaptability.

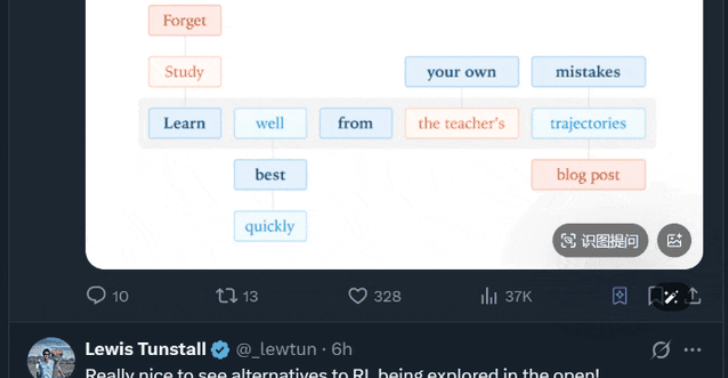

On-Policy Distillation merges these approaches by implementing what researchers describe as an "AI coach" system. A smaller "student" model generates content autonomously while receiving real-time feedback from a more powerful "teacher" model. This dynamic guidance occurs through continuous evaluation and adjustment of the KL divergence between the two models' outputs.

"This isn't just about transferring knowledge—it's about transferring understanding," explained Kevin Lu, former OpenAI researcher and current Thinking Machine team lead. "The teacher doesn't simply provide answers; it guides the reasoning process."

The method offers several advantages:

- Prevents shortcut learning behaviors

- Reduces overfitting risks

- Enhances generalization capabilities

- Maintains model stability during training

Remarkable Efficiency Gains Demonstrated

Initial testing reveals extraordinary performance improvements:

- Achieved comparable results to 32B parameter models using only 1/7 to 1/10 the training steps

- Reduced computational costs by two orders of magnitude

- Solved persistent "catastrophic forgetting" issues during enterprise deployment

The implications are particularly significant for resource-constrained organizations. Small businesses and academic teams can now develop specialized models competitive with those from major tech firms.

In one enterprise application test, an AI assistant successfully learned new business knowledge while retaining its original conversational abilities—a longstanding challenge in commercial AI implementation.

Industry Implications and Future Outlook

The research team brings substantial credentials to this development. Kevin Lu previously led key projects at OpenAI before joining Thinking Machine to focus on efficient small-model ecosystems.

The timing aligns with broader industry trends:

- Growing emphasis on vertical-specific AI solutions

- Increasing awareness of computing power limitations

- Demand for cost-effective deployment options

The paper detailing On-Policy Distillation methodology is available on Thinking Machine's website.

Key Points:

- 100x efficiency gains: Dramatically reduces training requirements for specialized tasks

- Hybrid approach: Combines reinforcement learning exploration with supervised learning precision

- Commercial viability: Enables smaller organizations to develop competitive AI systems

- Continuous learning: Solves catastrophic forgetting problem for enterprise applications