SmolLM2: The Pocket-Sized AI Revolution You Didn’t See Coming

Move over, colossal language models! Hugging Face has just released SmolLM2, a set of compact and efficient language models that are here to shake things up. While everyone else is busy building skyscrapers in the AI world, Hugging Face is showing us that maybe a cozy, well-designed tiny house can get the job done just as well.

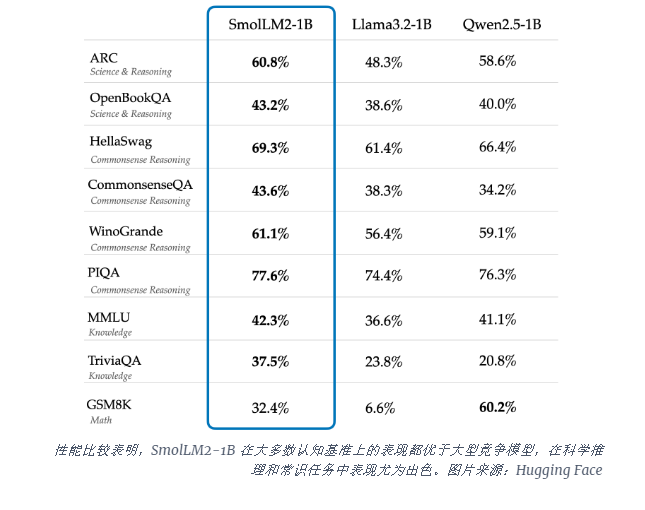

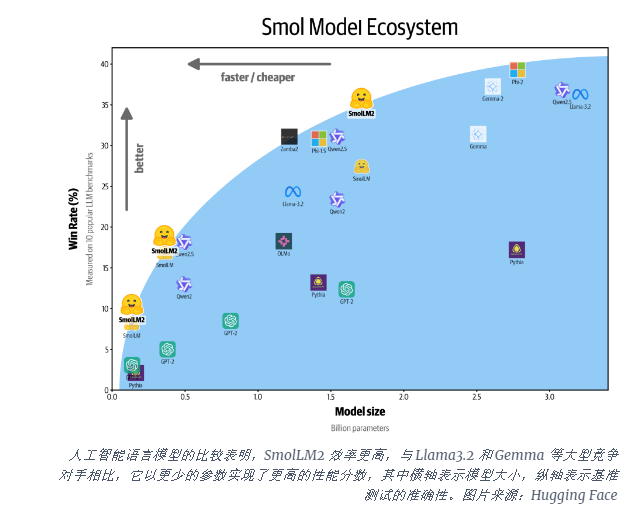

The SmolLM2 family consists of three sizes: 135M, 360M, and 1.7B parameters. And before you start yawning at those smaller numbers, let me tell you: these models pack a serious punch. They outperform even the bulkier Meta Llama1B on key benchmarks, particularly when it comes to scientific reasoning and common sense tasks. Who knew you could get so much power in such a small package?

Why Should You Care?

Well, for starters, while OpenAI and Anthropic are out here flexing their massive models, pushing the boundaries of what's possible (and what your electricity bill can handle), SmolLM2 is all about efficiency. These models are designed to run locally—that’s right, on your device—so you don’t need a supercomputer or a data center the size of a football field to harness their power.

This is a huge deal for those of us who don’t have access to endless computational resources. SmolLM2 can be deployed on smartphones and edge devices with limited processing power and memory. So instead of relying on the cloud for everything, you can have AI magic right in the palm of your hand.

Smol But Mighty

The SmolLM2-1B model absolutely crushes it in several cognitive benchmarks, outperforming competitors in areas like math and coding. That's right—this mini-beast is smarter than it looks. Hugging Face has fine-tuned these models using a diverse set of datasets, including FineWeb-Edu and specialized math and coding datasets. So when it comes to brains, SmolLM2 isn't cutting any corners.

The Perfect Timing

SmolLM2’s release couldn’t have come at a more perfect time. The AI world is currently obsessed with big models, but those come with some serious downsides: they’re slow, expensive, and resource-hungry. And let’s face it, not everyone has the luxury of a data center. This is where SmolLM2 shines—by offering a more sustainable and accessible approach to AI.

With SmolLM2, small is the new big. It offers companies and individuals a way to access advanced AI tools without needing to rent out half a server farm. Whether it’s text summarization, rewriting, or even integrating into function calls, this little model can handle it all.

A Glimpse Into the Future

It’s clear that SmolLM2 is a part of a much larger trend in AI: a shift toward efficiency. While big models will always have their place, there’s increasing recognition that bigger isn’t always better. Sometimes, you need something that’s lean, mean, and capable of getting the job done without breaking the bank—or your computer.

SmolLM2 is pointing the way to a future where efficient AI is king, and where we don’t all need to be at the mercy of tech giants and their enormous data centers. It’s a future where everyone can have access to innovative AI tools. Sounds pretty great, right?

Final Thoughts

The release of SmolLM2 is a game-changer in an industry that’s been entirely too focused on scale for too long. These tiny models are proving that you don’t need to be a giant to stand tall. Hugging Face’s SmolLM2 is a bold step toward a future where accessibility, efficiency, and performance are the name of the game. And honestly, we’re here for it.

Summary

- Hugging Face launched SmolLM2, a set of compact language models designed to be efficient and powerful.

- The models come in three sizes: 135M, 360M, and 1.7B parameters.

- SmolLM2-1B outperforms Meta’s Llama1B on key benchmarks, especially in scientific reasoning and common sense.

- These models are perfect for smartphones and edge devices, not just large data centers.

- SmolLM2 signals a shift toward efficient AI that’s accessible to more users and companies.